Introduction to Data Structures and Algorithms in Java: Dive into the heart of programming efficiency! This isn’t your grandma’s coding class; we’re talking about the fundamental building blocks that power everything from your favorite apps to complex AI systems. We’ll unpack the mysteries of arrays, linked lists, trees, and graphs, then unleash the power of algorithms to solve problems with speed and elegance. Get ready to level up your coding game.

This guide will walk you through the essential data structures used in Java, explaining their strengths and weaknesses. We’ll cover algorithms like searching and sorting, exploring their time complexities and real-world applications. From the basics of array manipulation to the intricacies of graph traversal, we’ll make sure you understand not just *how* things work, but *why* they’re designed the way they are. Prepare for a journey into the elegant world of efficient programming.

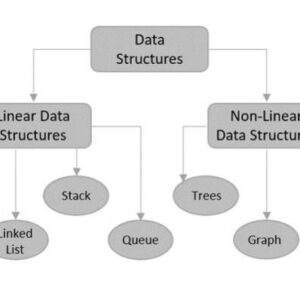

Introduction to Data Structures

Data structures are fundamental to computer science, providing efficient ways to organize and manage data. Choosing the right data structure significantly impacts the performance and scalability of your applications. Understanding their properties is crucial for any programmer, especially when working with Java.

Fundamental Concepts of Data Structures

Data structures are essentially ways of storing and organizing data in a computer so that it can be used efficiently. They define not only what kind of data is stored but also how that data is accessed, manipulated, and related. Key aspects include the relationships between data elements (linear, hierarchical, or network), the operations allowed on the data (insertion, deletion, search, etc.), and the memory usage characteristics. The choice of data structure depends heavily on the specific needs of the application, such as the frequency of various operations and the size of the dataset.

Common Data Structures in Java

Java provides built-in support and rich libraries for a variety of data structures. Let’s examine some of the most common ones.

Arrays

Arrays are the simplest data structure in Java. They store a fixed-size sequence of elements of the same type. Access to elements is direct and fast using their index (position).

Advantages: Simple to implement, fast access using index.

Disadvantages: Fixed size, inefficient insertion/deletion in the middle.

Example: `int[] numbers = new int[10];`

Linked Lists, Introduction to Data Structures and Algorithms in Java

Linked lists consist of nodes, each containing data and a pointer to the next node. This allows for dynamic sizing and efficient insertion/deletion at any point in the list.

Advantages: Dynamic size, efficient insertion/deletion.

Disadvantages: Slower access to elements (requires traversal).

Example: A singly linked list is implemented by defining a Node class with data and a pointer to the next Node.

Stacks

Stacks follow the Last-In, First-Out (LIFO) principle. Think of a stack of plates; you can only add or remove plates from the top.

Advantages: Simple to implement, efficient for tracking function calls or undo/redo operations.

Disadvantages: Limited access to elements (only top element).

Example: The `java.util.Stack` class provides a stack implementation.

Queues

Queues follow the First-In, First-Out (FIFO) principle. Like a queue at a store, the first element added is the first to be removed.

Advantages: Efficient for managing tasks or requests in order of arrival.

Disadvantages: Limited access to elements (only front and rear).

Example: The `java.util.Queue` interface and its implementations (like `LinkedList`) provide queue functionality.

Trees

Trees are hierarchical data structures with a root node and branches. Different types of trees (binary trees, binary search trees, etc.) offer various advantages.

Advantages: Efficient search, insertion, and deletion in balanced trees.

Disadvantages: Can be complex to implement and maintain balance.

Example: Binary Search Trees (BSTs) allow for efficient searching (O(log n) on average).

Graphs

Graphs consist of nodes (vertices) and edges connecting them. They represent relationships between data elements.

Advantages: Represent complex relationships between data.

Disadvantages: Can be computationally expensive for certain operations.

Example: Social networks are often modeled as graphs, where nodes represent users and edges represent connections.

Comparison of Data Structures

The following table summarizes the time complexity of common operations for different data structures. Note that these are average-case complexities; worst-case scenarios may differ.

| Data Structure | Insertion | Deletion | Search |

|---|---|---|---|

| Array | O(1) (at end), O(n) (in middle) | O(1) (at end), O(n) (in middle) | O(n) |

| Linked List | O(1) (at beginning/end), O(n) (in middle) | O(1) (at beginning/end), O(n) (in middle) | O(n) |

| Stack | O(1) | O(1) | O(n) |

| Queue | O(1) | O(1) | O(n) |

| Binary Search Tree | O(log n) | O(log n) | O(log n) |

| Graph (depends on implementation) | Varies | Varies | Varies |

Introduction to Algorithms

Algorithms are the secret sauce of computer science. Think of them as precise, step-by-step instructions a computer follows to solve a specific problem. Without algorithms, our computers would be glorified calculators, incapable of the complex tasks we rely on daily. From sorting your Instagram feed to recommending your next Netflix binge, algorithms are everywhere, quietly making our digital lives run smoothly.

Algorithms are crucial for efficient problem-solving because they provide a structured and repeatable approach. A well-designed algorithm ensures that a problem is solved correctly and consistently, regardless of the input data. This predictability is vital in software development, where reliability and consistency are paramount. Poorly designed algorithms, on the other hand, can lead to slow, inefficient, or even incorrect results.

Algorithm Efficiency and Time Complexity

The efficiency of an algorithm is measured by how its resource consumption (primarily time and memory) scales with the size of the input data. Time complexity, often expressed using Big O notation, provides a concise way to describe this scaling behavior. Big O notation focuses on the dominant terms as the input size grows large, ignoring constant factors. For example, an algorithm with O(n) time complexity means its execution time grows linearly with the input size (n). O(n²) indicates a quadratic growth, while O(log n) signifies a logarithmic growth, which is highly efficient for large datasets. Understanding Big O notation is essential for choosing the right algorithm for a given task, especially when dealing with large amounts of data.

Common Algorithm Types

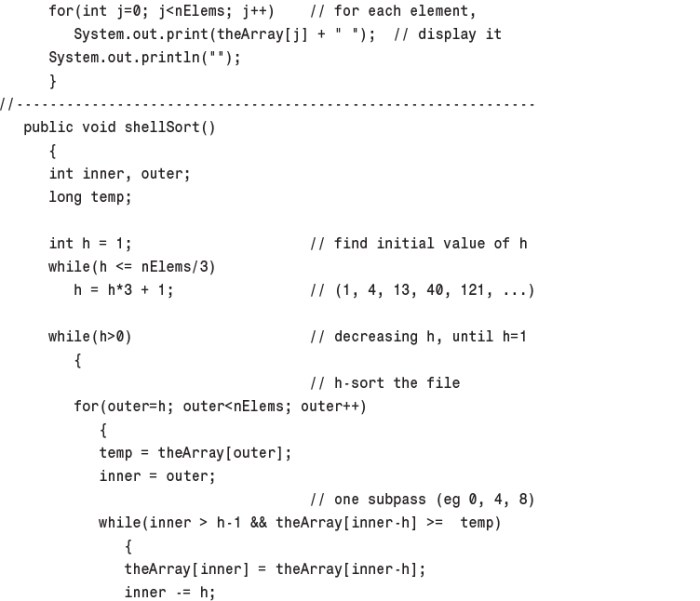

Several categories of algorithms address common computational problems. Searching algorithms find specific elements within a data structure. Linear search checks each element sequentially, resulting in O(n) time complexity. Binary search, applicable to sorted data, repeatedly divides the search interval in half, achieving a much faster O(log n) complexity. Sorting algorithms arrange elements in a specific order. Bubble sort, though simple, is inefficient with O(n²) complexity. Merge sort, on the other hand, employs a divide-and-conquer strategy, achieving O(n log n) complexity, making it significantly faster for larger datasets. Graph traversal algorithms explore connections within a graph data structure. Breadth-first search (BFS) explores nodes level by level, while depth-first search (DFS) explores as far as possible along each branch before backtracking. The choice between BFS and DFS depends on the specific problem and the desired outcome.

Algorithm Trade-offs

Choosing the optimal algorithm often involves considering trade-offs between different approaches. For example, a simpler algorithm might be easier to implement but less efficient for large datasets. A more complex algorithm might offer superior performance but require more development time and resources. The ideal algorithm depends on the specific problem constraints, including the size of the input data, available resources, and the acceptable level of performance. Sometimes, a less-efficient algorithm might be preferable if it’s easier to understand, maintain, or debug.

Merge Sort in Java

Merge sort is a classic example of a divide-and-conquer algorithm. It recursively divides the input array into smaller subarrays until each subarray contains only one element (which is inherently sorted). Then, it repeatedly merges the subarrays to produce new sorted subarrays until there is only one sorted array remaining.

“`java

public class MergeSort

public static void mergeSort(int[] arr)

if (arr.length <= 1)

return;

int mid = arr.length / 2;

int[] left = new int[mid];

int[] right = new int[arr.length - mid];

System.arraycopy(arr, 0, left, 0, mid);

System.arraycopy(arr, mid, right, 0, arr.length - mid);

mergeSort(left);

mergeSort(right);

merge(arr, left, right);

public static void merge(int[] arr, int[] left, int[] right)

int i = 0, j = 0, k = 0;

while (i < left.length && j < right.length)

if (left[i] <= right[j])

arr[k++] = left[i++];

else

arr[k++] = right[j++];

while (i < left.length)

arr[k++] = left[i++];

while (j < right.length)

arr[k++] = right[j++];

public static void main(String[] args)

int[] arr = 12, 11, 13, 5, 6, 7;

mergeSort(arr);

System.out.println("Sorted array:");

for (int num : arr)

System.out.print(num + " ");

```

This code provides a clear and concise implementation of the merge sort algorithm in Java. The `mergeSort` method recursively divides the array, and the `merge` method combines the sorted subarrays. The `main` method demonstrates its usage. The algorithm's efficiency is evident in its ability to sort even large arrays relatively quickly.

Arrays in Java

Source: oreilly.com

Arrays are fundamental data structures in Java, providing a way to store a collection of elements of the same data type in contiguous memory locations. Understanding arrays is crucial for efficient data manipulation and forms the basis for many advanced algorithms. This section delves into the declaration, initialization, manipulation, and applications of arrays in Java, including a look at multi-dimensional arrays and a simple stack implementation.

Array Declaration and Initialization

Declaring an array involves specifying the data type and the number of elements it will hold. For example, to declare an integer array named `numbers` capable of holding 10 integers, you would write: `int[] numbers = new int[10];`. This allocates space for 10 integers, and each element is automatically initialized to its default value (0 for integers). Alternatively, you can initialize an array directly upon declaration: `int[] numbers = 1, 2, 3, 4, 5, 6, 7, 8, 9, 10;`. This creates an array with the specified values. The length of an array is fixed at the time of creation and cannot be changed later.

Array Traversal Techniques

Accessing and processing each element of an array is called traversal. This is commonly done using a `for` loop. For example, to print each element of the `numbers` array:

“`java

for (int i = 0; i < numbers.length; i++)

System.out.println(numbers[i]);

```

This loop iterates through each index from 0 to `numbers.length - 1`, printing the value at each index. Enhanced `for` loops (also known as for-each loops) provide a more concise way to iterate:

```java

for (int number : numbers)

System.out.println(number);

```

This loop iterates through each element in the array without explicitly managing the index.

Finding Maximum and Minimum Elements

To find the maximum and minimum elements in an array, you can iterate through the array, keeping track of the largest and smallest values encountered. Consider this example:

“`java

int[] numbers = 5, 2, 9, 1, 5, 6;

int max = numbers[0];

int min = numbers[0];

for (int i = 1; i < numbers.length; i++)

if (numbers[i] > max)

max = numbers[i];

if (numbers[i] < min) min = numbers[i]; System.out.println("Maximum: " + max); System.out.println("Minimum: " + min); ``` This code initializes `max` and `min` to the first element and then iterates, updating them whenever a larger or smaller element is found.

Multi-Dimensional Arrays

Multi-dimensional arrays represent arrays of arrays. A two-dimensional array can be visualized as a table or matrix. For example, a 3×4 integer array can be declared as: `int[][] matrix = new int[3][4];`. Each element is accessed using two indices: `matrix[row][column]`. Multi-dimensional arrays are useful for representing data with multiple dimensions, such as images (pixels arranged in rows and columns) or game boards.

Array-Based Stack Implementation

A stack is a Last-In-First-Out (LIFO) data structure. An array can be used to implement a stack by using an array to store elements and tracking the top element’s index. The `push` operation adds an element to the top, and the `pop` operation removes and returns the top element. An example implementation might look like this:

“`java

class ArrayStack

private int[] stack;

private int top;

private int capacity;

public ArrayStack(int capacity)

this.capacity = capacity;

this.stack = new int[capacity];

this.top = -1; // Initially empty

public void push(int value)

if (top == capacity – 1)

System.out.println(“Stack Overflow”);

return;

stack[++top] = value;

public int pop()

if (top == -1)

System.out.println(“Stack Underflow”);

return -1; // Or throw an exception

return stack[top–];

// … other methods (peek, isEmpty, isFull, etc.) …

“`

This code demonstrates a basic stack implementation using an array. Error handling is included for stack overflow and underflow conditions.

Linked Lists in Java

Linked lists offer a dynamic alternative to arrays, providing flexibility in memory management and element insertion/deletion. Unlike arrays with their fixed size, linked lists can grow or shrink as needed, making them ideal for situations where the number of elements is unpredictable. This flexibility comes at the cost of slightly slower access to individual elements, but the advantages often outweigh this trade-off.

Linked lists are fundamental data structures consisting of nodes, each holding a data element and a pointer (reference) to the next node in the sequence. Different types of linked lists—singly, doubly, and circular—vary in how these pointers are used, leading to distinct performance characteristics and application scenarios.

Mastering Java’s data structures and algorithms is like building a sturdy house – you need a solid foundation. Efficient coding requires understanding these fundamentals, just as securing comprehensive international health coverage is crucial for peace of mind when traveling abroad. Check out this guide on How to Find the Best Insurance for International Health Coverage before your next adventure, then return to optimizing your Java code with those newfound organizational skills!

Singly Linked Lists

A singly linked list is the simplest type. Each node contains data and a pointer to the next node in the sequence. The last node’s pointer points to null, signifying the end of the list. This structure makes traversing the list efficient in one direction (forward), but going backward requires traversing the entire list from the beginning.

Consider a singly linked list representing a queue of tasks: Each node could store a task description and a pointer to the next task in the queue. Adding a new task involves creating a new node and updating the pointer of the last node. Removing a task requires updating the pointer of the preceding node.

Doubly Linked Lists

Doubly linked lists enhance the singly linked list by adding a second pointer in each node: one pointing to the next node and another pointing to the previous node. This bidirectional linking allows for efficient traversal in both directions.

Imagine a music playlist: A doubly linked list would allow for easy navigation—moving forward and backward through songs. Insertion and deletion operations become more complex due to the need to update two pointers per node, but the ability to move efficiently in both directions makes it advantageous in certain scenarios.

Circular Linked Lists

In a circular linked list, the last node’s pointer doesn’t point to null but instead points back to the first node, creating a closed loop. This structure is particularly useful for implementing circular buffers or scenarios where continuous looping is required.

A classic example is a carousel: Imagine each node representing a carousel horse. The last horse points back to the first, creating a continuous loop. Traversing the list would seamlessly cycle through all horses.

Performance Comparison of Linked Lists

| Operation | Singly Linked List | Doubly Linked List | Circular Linked List |

|---|---|---|---|

| Insertion at beginning | O(1) | O(1) | O(1) – assuming head pointer is readily available |

| Insertion at end | O(n) | O(1) – if tail pointer is maintained | O(1) – if tail pointer is maintained |

| Deletion at beginning | O(1) | O(1) | O(1) |

| Deletion at end | O(n) | O(1) – if tail pointer is maintained | O(1) – if tail pointer is maintained |

| Access by index | O(n) | O(n) | O(n) |

The table highlights the time complexity of common operations. Note that maintaining a tail pointer for doubly and circular linked lists significantly improves the performance of end insertions and deletions.

Implementing a Simple Singly Linked List in Java

This example demonstrates a basic singly linked list implementation with insertion and deletion operations. Note that error handling (e.g., for attempting to delete from an empty list) is omitted for brevity.

public class Node

int data;

Node next;

Node(int d)

data = d;

next = null;

public class SinglyLinkedList

Node head;

void insertAtBeginning(int new_data)

Node new_node = new Node(new_data);

new_node.next = head;

head = new_node;

void insertAtEnd(int new_data)

Node new_node = new Node(new_data);

if (head == null)

head = new_node;

return;

Node last = head;

while (last.next != null)

last = last.next;

last.next = new_node;

void deleteNode(int key)

Node temp = head, prev = null;

if (temp != null && temp.data == key)

head = temp.next;

return;

while (temp != null && temp.data != key)

prev = temp;

temp = temp.next;

if (temp == null) return;

prev.next = temp.next;

void printList()

Node tnode = head;

while (tnode != null)

System.out.print(tnode.data + " ");

tnode = tnode.next;

public static void main(String[] args)

SinglyLinkedList llist = new SinglyLinkedList();

llist.insertAtEnd(1);

llist.insertAtBeginning(2);

llist.insertAtEnd(3);

llist.insertAtBeginning(4);

System.out.println("Created Linked list is: ");

llist.printList();

System.out.println("");

llist.deleteNode(3);

System.out.println("Linked list after Deletion of 3: ");

llist.printList();

Illustration of a Singly Linked List

Imagine a chain of boxes. Each box represents a node. Inside each box is a number (the data). Each box also has an arrow pointing to the next box in the sequence. The last box’s arrow points to nothing (null). For example, a list containing 10, 20, 30 would have three boxes. The first box contains 10 and its arrow points to the second box (containing 20). The second box’s arrow points to the third box (containing 30). The third box’s arrow points to nothing. This visual representation clearly shows the sequential nature of a singly linked list and how data and pointers work together.

Trees and Graphs in Java

So far, we’ve tackled the linear world of arrays and linked lists. Now, let’s dive into the more complex, and frankly, more exciting realm of non-linear data structures: trees and graphs. These structures are crucial for representing hierarchical relationships and interconnected networks, forming the backbone of many applications, from file systems to social networks.

Trees and graphs, while distinct, share a common thread: they’re composed of nodes (data elements) and edges (connections between nodes). The key difference lies in the structure of these connections. Trees have a hierarchical structure with a single root node and no cycles, whereas graphs can have multiple root nodes and cycles. This fundamental difference dictates their applications and the algorithms used to manipulate them.

Tree Data Structures

Trees are hierarchical data structures, resembling an upside-down tree. Each node can have zero or more child nodes, except for leaf nodes which have no children. The topmost node is called the root. Different types of trees are characterized by the number of children each node can have and the relationships between nodes.

Binary Trees

A binary tree is a tree where each node has at most two children, referred to as the left child and the right child. Binary trees are fundamental building blocks for more complex tree structures. Their simplicity makes them easy to implement and understand, while their flexibility allows them to be used in various applications, such as expression evaluation and Huffman coding.

Binary Search Trees (BSTs)

Binary Search Trees are a special type of binary tree where the value of each node is greater than all values in its left subtree and less than all values in its right subtree. This property allows for efficient searching, insertion, and deletion of elements. A well-balanced BST provides logarithmic time complexity for these operations, making it a highly efficient data structure for searching and sorting. An unbalanced BST, however, can degenerate into a linked list, resulting in linear time complexity.

AVL Trees

AVL trees are self-balancing binary search trees. They maintain a balance factor for each node (the difference in height between its left and right subtrees), ensuring that the tree remains relatively balanced even after insertions and deletions. This self-balancing property guarantees logarithmic time complexity for all operations, regardless of the insertion order, making them a robust choice when performance is critical. The balancing is achieved through rotations, which restructure parts of the tree to maintain the balance factor.

Graph Representations

Graphs, unlike trees, can have cycles and multiple root nodes, representing complex relationships between data points. Two primary ways to represent graphs in Java are the adjacency matrix and the adjacency list.

Adjacency Matrix

An adjacency matrix is a two-dimensional array where each element (i, j) represents the connection between node i and node j. A value of 1 indicates a connection, and 0 indicates no connection. This representation is simple to implement but can be inefficient for sparse graphs (graphs with relatively few edges) as it uses a lot of memory to store zeros.

Adjacency List

An adjacency list represents a graph using an array of linked lists. Each element in the array represents a node, and its corresponding linked list contains the nodes it is connected to. This representation is efficient for sparse graphs, as it only stores the existing connections, saving memory compared to an adjacency matrix.

Binary Search Tree Implementation in Java

“`java

class Node

int data;

Node left, right;

Node(int d)

data = d;

left = right = null;

class BinarySearchTree

Node root;

BinarySearchTree()

root = null;

void insert(int data)

root = insertRec(root, data);

Node insertRec(Node root, int data)

if (root == null)

root = new Node(data);

return root;

if (data < root.data)

root.left = insertRec(root.left, data);

else if (data > root.data)

root.right = insertRec(root.right, data);

return root;

void inorder()

inorderRec(root);

void inorderRec(Node root)

if (root != null)

inorderRec(root.left);

System.out.print(root.data + ” “);

inorderRec(root.right);

public class Main

public static void main(String[] args)

BinarySearchTree tree = new BinarySearchTree();

tree.insert(50);

tree.insert(30);

tree.insert(20);

tree.insert(40);

tree.insert(70);

tree.insert(60);

tree.insert(80);

System.out.println(“Inorder traversal of the given tree”);

tree.inorder();

“`

This Java code implements a basic binary search tree with methods for insertion and inorder traversal. The `inorder` traversal visits nodes in ascending order of their values.

Graph Traversal Algorithms

Exploring all nodes in a graph is done using traversal algorithms. Two common methods are Breadth-First Search (BFS) and Depth-First Search (DFS).

Breadth-First Search (BFS)

BFS explores a graph level by level. It starts at a root node and visits all its neighbors before moving to the next level. This is typically implemented using a queue data structure. BFS is useful for finding the shortest path in unweighted graphs. Imagine searching for someone in a large social network; BFS would explore their immediate connections before moving to their friends’ friends.

Depth-First Search (DFS)

DFS explores a graph by going as deep as possible along each branch before backtracking. It uses a stack (implicitly through recursion or explicitly using a stack data structure). DFS is useful for tasks like topological sorting and finding connected components in a graph. Think of exploring a maze: DFS would try one path completely before trying another.

Algorithm Design Techniques: Introduction To Data Structures And Algorithms In Java

Crafting efficient algorithms is the cornerstone of any successful software project. Choosing the right approach can drastically impact performance, especially when dealing with large datasets. This section explores several powerful algorithm design paradigms, highlighting their strengths and weaknesses with practical examples.

Algorithm design isn’t a one-size-fits-all affair. Different problems lend themselves to different techniques. Understanding these paradigms allows you to select the most appropriate strategy, leading to optimized solutions. We’ll delve into three key paradigms: Divide and Conquer, Dynamic Programming, and Greedy Algorithms.

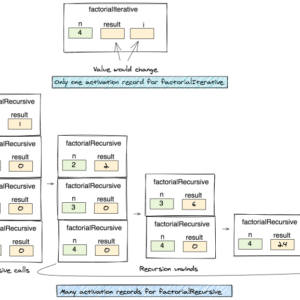

Divide and Conquer

Divide and conquer algorithms work by recursively breaking down a problem into smaller, self-similar subproblems until they become simple enough to solve directly. The solutions to these subproblems are then combined to obtain the solution to the original problem. This approach is particularly effective when the problem’s complexity can be significantly reduced by dividing it.

A classic example is merge sort. It recursively divides an unsorted list into smaller sublists until each sublist contains only one element (which is inherently sorted). Then, it repeatedly merges the sublists to produce new sorted sublists until there is only one sorted list remaining – the solution.

Another example is quicksort, which partitions the input array around a pivot element, recursively sorting the sub-arrays on either side of the pivot. While both merge sort and quicksort achieve O(n log n) time complexity on average, quicksort can have O(n2) worst-case time complexity if the pivot selection is consistently poor, whereas merge sort consistently maintains O(n log n).

Dynamic Programming

Dynamic programming tackles problems by breaking them down into overlapping subproblems, solving each subproblem only once, and storing their solutions to avoid redundant computations. This approach is ideal for optimization problems where the optimal solution can be constructed from optimal solutions to its subproblems.

The Fibonacci sequence calculation is a prime example. A naive recursive approach recalculates the same Fibonacci numbers repeatedly, leading to exponential time complexity. Dynamic programming, however, solves each Fibonacci number only once and stores the results, resulting in linear time complexity. This is achieved either through memoization (storing results during recursion) or tabulation (building a table of solutions bottom-up).

Greedy Algorithms

Greedy algorithms make locally optimal choices at each step, hoping to find a global optimum. They are simpler to implement than dynamic programming but don’t always guarantee the best solution. Their effectiveness depends heavily on the problem’s structure.

A classic example is Dijkstra’s algorithm for finding the shortest path in a graph. At each step, it selects the node with the shortest distance from the source node and adds it to the shortest path tree. While efficient, it only works correctly for graphs with non-negative edge weights.

Another example is Huffman coding, which builds an optimal prefix code by repeatedly combining the two least frequent symbols. This greedy approach results in a code with minimal expected length, efficiently compressing data.

Knapsack Problem using Dynamic Programming

The 0/1 knapsack problem involves selecting a subset of items with maximum total value, given a weight constraint. Dynamic programming offers an efficient solution.

Consider a knapsack with a weight capacity of W and a set of n items, each with a weight wi and a value vi. We can use a 2D array dp[i][w] to store the maximum value achievable using the first i items and a maximum weight of w. The recurrence relation is:

dp[i][w] = max(dp[i-1][w], dp[i-1][w - wi] + vi)ifw >= wi

dp[i][w] = dp[i-1][w]otherwise

The base case is dp[0][w] = 0 for all w. The solution is found in dp[n][W]. A Java implementation would involve creating and populating this 2D array according to the recurrence relation.

End of Discussion

Mastering data structures and algorithms is the key to unlocking true programming prowess. This journey through the Java landscape has equipped you with the fundamental tools to build efficient and scalable applications. Remember, the beauty lies not just in writing code that works, but in crafting solutions that are both elegant and performant. So go forth, and build something amazing!