The Importance of Algorithms in Modern Software Development: Think software is just lines of code? Think again. It’s the invisible engine, the secret sauce, powered by algorithms that dictate how everything works – from the smooth scrolling of your favorite app to the complex calculations behind AI. This isn’t just about tech jargon; it’s about understanding the fundamental building blocks of the digital world we inhabit. We’ll dive deep into how algorithms shape modern software, exploring their efficiency, impact, and the exciting future they hold.

From sorting your Instagram feed to recommending your next Netflix binge, algorithms are everywhere. This exploration will unravel the mysteries behind their design, implementation, and the crucial role they play in creating reliable, scalable, and user-friendly software. We’ll dissect different types, compare their efficiency, and even peek into the future of algorithmic development, including the potential game-changers like quantum computing.

Defining Algorithms in Software Development

Algorithms are the unsung heroes of the digital world, the secret sauce that makes software tick. They’re essentially a set of step-by-step instructions that a computer follows to solve a specific problem. Think of them as recipes for your computer, meticulously detailing how to transform input data into a desired output. Without algorithms, our software would be nothing more than inert code, incapable of performing even the simplest tasks.

Algorithms are the backbone of any software, dictating how data is processed, problems are solved, and information is managed. They are the bridge connecting human logic and machine execution, allowing us to translate our intentions into actions performed by a computer. Understanding algorithms is crucial for anyone serious about software development, regardless of their chosen programming language.

Types of Algorithms

Different problems require different algorithmic approaches. The choice of algorithm depends heavily on factors like the size of the input data, the desired efficiency, and the specific constraints of the problem. A poorly chosen algorithm can lead to slow, inefficient software, while a well-chosen one can drastically improve performance.

- Search Algorithms: These algorithms are used to find specific data within a larger dataset. Linear search, for example, checks each element sequentially until it finds the target. Binary search, on the other hand, is much more efficient for sorted data, repeatedly dividing the search interval in half.

- Sorting Algorithms: Sorting algorithms arrange data in a specific order (e.g., ascending or descending). Bubble sort is a simple but inefficient algorithm, while merge sort and quicksort are much more efficient for larger datasets. Imagine sorting a deck of cards – different sorting methods would take different amounts of time.

- Graph Algorithms: Used to navigate and analyze networks represented as graphs. Dijkstra’s algorithm, for instance, finds the shortest path between two nodes in a graph, crucial for applications like GPS navigation. Imagine mapping the most efficient route for a delivery service.

- Dynamic Programming Algorithms: These break down complex problems into smaller, overlapping subproblems, solving each subproblem only once and storing the results to avoid redundant computations. This is particularly useful for optimization problems, like finding the optimal path in a game or scheduling tasks efficiently.

Translating Human Logic into Machine-Executable Code

The process of translating human logic into machine-executable code involves breaking down a problem into smaller, manageable steps that a computer can understand. This typically involves using a programming language to express the algorithm’s logic. Each step in the algorithm is translated into a series of instructions that the computer’s processor can execute. This involves defining variables, using conditional statements (if-then-else), loops (for, while), and functions to organize and modularize the code. For example, a simple algorithm to calculate the area of a rectangle would involve defining variables for length and width, multiplying them, and storing the result in a new variable.

A Simple Sorting Algorithm: Bubble Sort

Let’s illustrate this with a simple example: sorting a list of numbers using the bubble sort algorithm. Bubble sort is a straightforward, albeit inefficient, algorithm that repeatedly steps through the list, compares adjacent elements, and swaps them if they are in the wrong order. The pass through the list is repeated until no swaps are needed, indicating that the list is sorted.

The algorithm can be described as follows:

- Compare the first two elements. If they are in the wrong order, swap them.

- Compare the second and third elements. If they are in the wrong order, swap them.

- Continue this process until the end of the list is reached.

- Repeat steps 1-3 until no swaps are made during a pass.

This simple example demonstrates how a clear, step-by-step approach—the essence of an algorithm—can be translated into code to solve a common programming problem. While bubble sort isn’t the most efficient sorting algorithm for large datasets, its simplicity makes it a great introductory example to the fundamental concepts of algorithmic thinking.

Algorithms and Efficiency

In the world of software development, speed and resourcefulness are king. An algorithm isn’t just a set of instructions; it’s the engine that drives your application’s performance. Choosing the right algorithm can mean the difference between a snappy, responsive app and one that crawls to a halt. This section dives into the crucial relationship between algorithms and efficiency, exploring how we measure and optimize their performance.

Algorithm efficiency is paramount, impacting both the time it takes to execute (time complexity) and the amount of memory it consumes (space complexity). A poorly designed algorithm can lead to unacceptable wait times for users or even system crashes due to memory exhaustion. Conversely, a well-optimized algorithm ensures a smooth, efficient user experience, even with large datasets.

Time and Space Complexity

Time complexity describes how the runtime of an algorithm scales with the input size. Space complexity, on the other hand, describes how the memory usage scales with the input size. We typically use Big O notation to express these complexities. For instance, an algorithm with O(n) time complexity means its runtime grows linearly with the input size (n). O(n²) indicates a quadratic relationship, meaning the runtime increases dramatically as the input size grows. Minimizing both time and space complexity is the goal of efficient algorithm design. Consider searching a phone book: a linear search (checking each entry one by one) is O(n), while a binary search (splitting the book in half repeatedly) is O(log n), significantly faster for large phone books.

Comparison of Sorting Algorithms

Different algorithms can solve the same problem, but with vastly different efficiencies. Let’s compare three common sorting algorithms: Bubble Sort, Merge Sort, and Quick Sort. These algorithms all achieve the same outcome – a sorted list – but their approaches and performance characteristics differ significantly. Bubble Sort, while simple to understand, is notoriously inefficient for large datasets. Merge Sort and Quick Sort, on the other hand, offer significantly better performance, making them preferred choices for larger-scale sorting tasks.

Big O Notation

Big O notation provides a standardized way to describe the upper bound of an algorithm’s runtime or space usage as the input size approaches infinity. It focuses on the dominant factors affecting performance, ignoring constant factors and smaller terms. For example, an algorithm with a runtime of 5n² + 10n + 5 is described as O(n²) because the n² term dominates as n grows large. Understanding Big O notation is crucial for comparing and evaluating the efficiency of different algorithms. It allows developers to make informed decisions about which algorithm to use based on the expected input size and performance requirements.

Time and Space Complexity of Sorting Algorithms

The table below summarizes the time and space complexity of Bubble Sort, Merge Sort, and Quick Sort. Note that the average and worst-case complexities can differ for some algorithms.

| Algorithm | Best-Case Time Complexity | Average-Case Time Complexity | Worst-Case Time Complexity | Space Complexity |

|---|---|---|---|---|

| Bubble Sort | O(n) | O(n²) | O(n²) | O(1) |

| Merge Sort | O(n log n) | O(n log n) | O(n log n) | O(n) |

| Quick Sort | O(n log n) | O(n log n) | O(n²) | O(log n) |

Algorithms in Specific Software Domains

Algorithms aren’t just abstract concepts; they’re the invisible engines driving the software we use every day. Their impact spans various software domains, shaping everything from how quickly your database searches to the recommendations you see on your favorite streaming service. Let’s dive into some key areas where algorithms play a crucial role.

Database Management System Algorithms

Database management systems (DBMS) rely heavily on efficient algorithms to handle massive amounts of data. These algorithms ensure quick retrieval, insertion, and deletion of information. Common algorithms include B-tree indexing for fast lookups, hash tables for efficient data storage and retrieval based on key values, and various sorting algorithms like merge sort or quicksort for organizing data. For example, a B-tree index allows a DBMS to quickly locate specific records within a large database by efficiently navigating a hierarchical tree structure, significantly reducing search time compared to a linear scan. Without these efficient algorithms, even moderately sized databases would become impractically slow.

Algorithms in Machine Learning and Artificial Intelligence, The Importance of Algorithms in Modern Software Development

Machine learning and AI are fundamentally powered by algorithms. These algorithms allow systems to learn from data, make predictions, and improve their performance over time. Common algorithms include linear regression for predicting continuous values, logistic regression for classification tasks, decision trees for creating hierarchical models, and support vector machines for finding optimal separating hyperplanes between data points. For instance, the recommendation system on Netflix uses sophisticated machine learning algorithms to analyze user viewing history and preferences, predicting which movies or shows they might enjoy. These algorithms constantly adapt and refine their predictions based on new data, providing increasingly personalized recommendations.

Algorithms in Graphical User Interfaces

While less visible, algorithms are essential for creating smooth and responsive graphical user interfaces (GUIs). Algorithms determine how elements are rendered on the screen, how user input is processed, and how animations are handled. For example, event handling algorithms manage user interactions such as mouse clicks and keyboard presses, ensuring that the GUI responds appropriately. Layout algorithms arrange elements on the screen efficiently, preventing overlaps and ensuring readability. Advanced GUIs might also utilize pathfinding algorithms to determine the shortest route for animations or transitions, creating visually appealing and intuitive experiences.

Algorithms in Network Protocols and Communication Systems

Network protocols and communication systems rely on a diverse set of algorithms to manage data transmission, routing, and error correction. Routing algorithms, like Dijkstra’s algorithm or A*, determine the most efficient path for data packets to travel across a network. Error correction algorithms, such as checksums or CRC (Cyclic Redundancy Check), detect and correct errors introduced during transmission. For example, when you browse the internet, routing algorithms ensure your requests reach the correct server efficiently, while error correction algorithms ensure the data received is accurate and complete. These algorithms are crucial for the reliable and efficient operation of the internet and other communication networks.

Algorithm Design and Implementation

Source: moneyreadme.com

Crafting efficient algorithms is the backbone of high-performing software. It’s not just about writing code that works; it’s about writing code that works *well*, scaling gracefully with increasing data and user demands. This involves a strategic design process and a keen eye for optimization.

Efficient algorithm design and implementation requires a blend of theoretical understanding and practical experience. It’s an iterative process, often involving refinement and testing to achieve optimal performance. The choice of algorithm and its implementation heavily influence the software’s speed, resource consumption, and overall user experience.

Algorithms are the unsung heroes of modern software, quietly powering everything from your Netflix recommendations to your bank’s fraud detection. But just like relying on outdated software can be risky, so can neglecting your financial protection; check out this guide on The Risks of Being Underinsured and How to Avoid It to ensure you’re not vulnerable.

Ultimately, both smart algorithms and smart financial planning are crucial for navigating the complexities of today’s world.

Algorithm Design Paradigms

Algorithm design isn’t a random process. Several established paradigms provide structured approaches to tackling different problem types. These paradigms offer reusable strategies and help ensure efficiency.

Consider the classic “divide and conquer” strategy, where a complex problem is broken down into smaller, self-similar subproblems. Merge sort, a highly efficient sorting algorithm, exemplifies this. Each sub-array is sorted recursively until the entire array is sorted. Alternatively, dynamic programming tackles problems by breaking them into overlapping subproblems, solving each subproblem only once, and storing their solutions to avoid redundant computations. This is particularly useful for optimization problems like finding the shortest path in a graph.

Step-by-Step Algorithm Debugging and Optimization

Even the most meticulously designed algorithms can contain bugs or inefficiencies. A systematic debugging and optimization process is crucial.

- Identify Bottlenecks: Use profiling tools to pinpoint sections of code consuming excessive time or resources. This often reveals areas ripe for optimization.

- Code Review: Peer review helps catch subtle errors and inefficiencies that might be missed during individual testing.

- Algorithmic Refinement: If profiling reveals an algorithmic limitation, consider alternative algorithms or optimize the existing one. This might involve changing data structures or employing different techniques.

- Data Structure Selection: Choosing the right data structure significantly impacts performance. For instance, using a hash table for fast lookups instead of a linear search can dramatically improve efficiency.

- Testing and Benchmarking: Rigorous testing with varied inputs is essential to validate improvements and ensure the optimized algorithm behaves correctly under diverse conditions.

Utilizing Appropriate Data Structures

The choice of data structure directly influences an algorithm’s efficiency. Selecting the right structure is as crucial as the algorithm itself.

For example, consider searching for a specific element within a large dataset. A linear search through an unsorted array has a time complexity of O(n), meaning the time increases linearly with the number of elements. However, using a binary search tree or a hash table can reduce this to O(log n) or O(1) on average, respectively, significantly improving search speed for large datasets. Similarly, using a queue for breadth-first search or a stack for depth-first search optimizes graph traversal algorithms.

The Impact of Algorithms on Software Quality

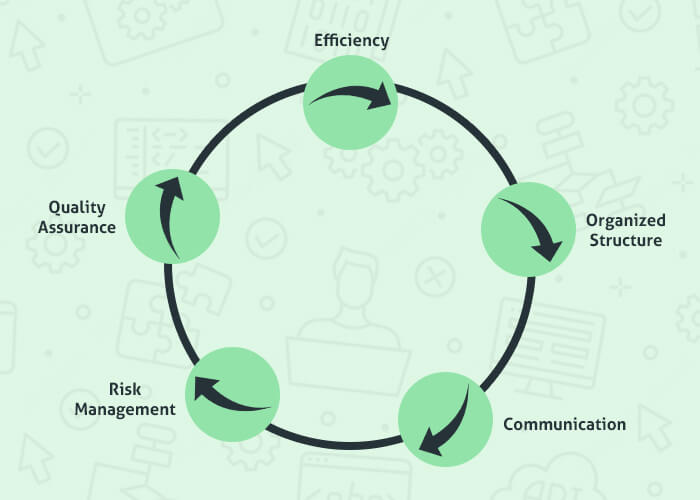

Source: prismetric.com

The choice of algorithm significantly impacts the overall quality of software, influencing everything from its maintainability and scalability to its reliability and security. A well-chosen algorithm can lead to efficient, robust, and secure software, while a poorly chosen one can result in a system plagued with bugs, performance issues, and security vulnerabilities. Understanding this crucial relationship is key to building high-quality software.

Algorithm choice directly affects software maintainability and scalability. A simple, elegant algorithm is generally easier to understand, modify, and extend over time. This translates to reduced maintenance costs and faster development cycles. Conversely, a complex or inefficient algorithm can become a nightmare to maintain, leading to increased development time and costs. Scalability is equally impacted; an algorithm that performs well with small datasets might struggle significantly when faced with larger ones, potentially leading to performance bottlenecks and system failures. Choosing algorithms designed for scalability from the outset is crucial for applications expected to handle large volumes of data or users.

Algorithm Correctness and Software Reliability

Algorithm correctness is intrinsically linked to software reliability. A correct algorithm produces the expected output for all valid inputs, contributing to a reliable and predictable software system. Bugs and unexpected behavior often stem from flaws in the algorithm’s logic or its failure to handle edge cases appropriately. Rigorous testing and verification are crucial for ensuring algorithm correctness and, consequently, improving software reliability. For instance, a banking application using an incorrect algorithm for calculating interest could lead to significant financial losses and erode user trust.

Examples of Poorly Designed Algorithms and Their Consequences

Poorly designed algorithms can have devastating consequences. Consider a sorting algorithm with a time complexity of O(n²), used in a system processing millions of records. The performance would be abysmal, making the system practically unusable. Another example lies in security vulnerabilities. A flawed cryptographic algorithm, susceptible to attacks, could expose sensitive user data, leading to significant security breaches and legal repercussions. The infamous Heartbleed bug, a vulnerability in the OpenSSL cryptographic library, is a prime example of how a poorly designed algorithm can have widespread and severe consequences. This bug stemmed from a buffer overflow error within the algorithm, allowing attackers to steal sensitive data from affected servers.

Ensuring Algorithm Robustness and Handling Edge Cases

Robust algorithms are designed to handle unexpected inputs or situations gracefully. Techniques for ensuring robustness include input validation, error handling, and comprehensive testing. Input validation ensures that the algorithm only processes valid data, preventing unexpected behavior or crashes. Error handling involves implementing mechanisms to gracefully handle errors and exceptions, preventing the system from crashing. Comprehensive testing involves testing the algorithm with a wide range of inputs, including edge cases and boundary conditions, to identify and fix potential issues. This thorough approach minimizes the risk of unexpected failures and improves overall software quality. Furthermore, employing defensive programming techniques, such as assertions and exception handling, helps in building more robust and reliable software.

Future Trends in Algorithm Development: The Importance Of Algorithms In Modern Software Development

Source: kovair.com

The world of algorithms is constantly evolving, driven by the insatiable need for faster, more efficient, and more intelligent software. New challenges in data processing, artificial intelligence, and quantum computing are pushing the boundaries of what’s possible, leading to exciting breakthroughs in algorithm design and implementation. This section explores some of the most significant trends shaping the future of this crucial field.

The rapid advancements in computing power and the explosion of big data are fueling innovation in algorithm design. We’re seeing a move towards more sophisticated techniques, including machine learning algorithms that can adapt and improve their performance over time, and distributed algorithms that can handle massive datasets across multiple processors. This shift isn’t just about speed; it’s about unlocking insights from data that were previously impossible to analyze.

Quantum Computing’s Impact on Algorithm Development

Quantum computing promises a revolutionary leap in computational power, potentially solving problems currently intractable for even the most powerful classical computers. Algorithms designed for quantum computers, known as quantum algorithms, leverage the principles of quantum mechanics—superposition and entanglement—to perform calculations in fundamentally different ways. For instance, Shor’s algorithm, a quantum algorithm, could break widely used encryption methods, highlighting both the potential and the challenges associated with this emerging technology. The development of efficient quantum algorithms is a major area of research, with the potential to revolutionize fields like drug discovery, materials science, and financial modeling. The development of robust and error-corrected quantum computers is still in its early stages, but the potential impact on algorithm design is undeniable.

Algorithms and Big Data Analytics

The sheer volume, velocity, and variety of data generated today necessitate the development of advanced algorithms for efficient data processing and analysis. Big data analytics relies heavily on algorithms to extract meaningful insights from complex datasets. Algorithms are used for tasks such as data cleaning, feature extraction, model building, and prediction. For example, streaming algorithms, designed to process data in real-time, are crucial for applications like fraud detection and social media trend analysis. Similarly, graph algorithms are vital for analyzing social networks and identifying relationships within large datasets. The continued growth of big data will drive further innovation in algorithm design, focusing on scalability, efficiency, and the ability to handle diverse data types.

Key Areas of Advancement in Algorithm Development (Next Five Years)

Several key areas are poised for significant advancement in algorithm development over the next five years. These advancements will shape the landscape of software development and impact numerous industries.

- Explainable AI (XAI): Developing algorithms that can provide transparent and understandable explanations for their decisions, increasing trust and accountability in AI systems. This is crucial for applications in healthcare, finance, and other high-stakes domains.

- Federated Learning: Enabling collaborative machine learning across decentralized datasets, improving model accuracy while preserving data privacy. This approach is particularly relevant in healthcare, where sharing sensitive patient data directly is often prohibited.

- Quantum Algorithm Optimization: Developing more efficient and robust quantum algorithms for specific applications, addressing challenges related to error correction and scalability. This will pave the way for practical applications of quantum computing.

- Graph Neural Networks (GNNs): Improving the capabilities of GNNs for handling large and complex graph data, enabling more effective analysis of social networks, knowledge graphs, and molecular structures. This could lead to breakthroughs in drug discovery and social science research.

- AutoML (Automated Machine Learning): Developing automated tools and techniques for designing, optimizing, and deploying machine learning models, making AI more accessible to a wider range of developers and users. This will accelerate the adoption of AI across various industries.

Outcome Summary

So, the next time you effortlessly navigate a website or marvel at the power of AI, remember the unsung heroes behind the scenes: algorithms. Their importance in modern software development can’t be overstated. Understanding their intricacies isn’t just for computer scientists; it’s key to appreciating the complex technology shaping our lives. From enhancing user experience to ensuring software reliability, algorithms are the backbone of the digital age, and their future looks brighter than ever.