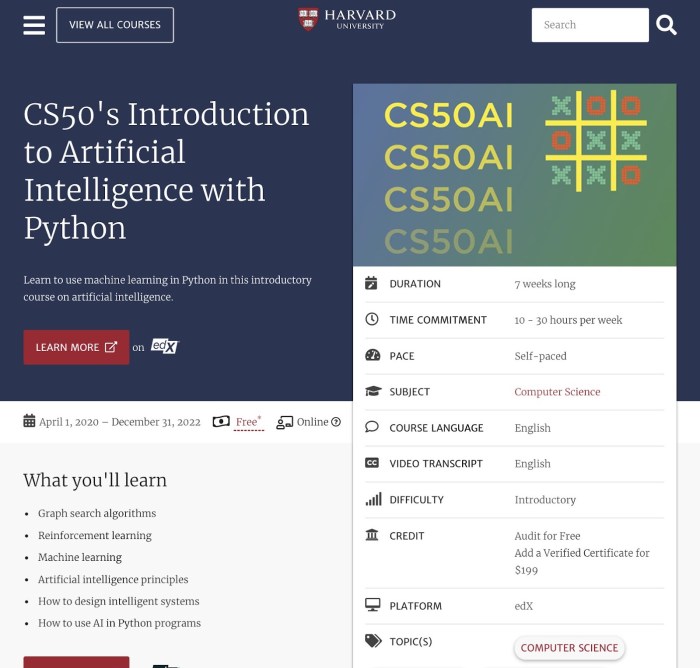

Introduction to Artificial Intelligence with Python: Dive into the fascinating world of AI! This course isn’t just about lines of code; it’s about unlocking the power to build intelligent systems. We’ll journey from setting up your Python environment to mastering machine learning algorithms, all while demystifying complex concepts with practical examples and real-world datasets. Get ready to build your AI future, one line of code at a time.

From understanding fundamental Python concepts crucial for AI programming to implementing machine learning algorithms using Scikit-learn, this course provides a comprehensive learning path. We’ll explore supervised, unsupervised, and reinforcement learning, tackle real-world datasets, and master data visualization techniques to interpret model performance effectively. Prepare to build practical projects and gain a solid foundation in this rapidly evolving field.

Course Overview

Ready to dive into the exciting world of Artificial Intelligence? This course provides a practical introduction to AI concepts and their implementation using Python, equipping you with the skills needed to navigate this rapidly evolving field. We’ll cover the fundamentals, build practical projects, and prepare you for a future where AI is increasingly integral to almost every industry.

This course is designed to be engaging and accessible, blending theoretical understanding with hands-on coding experience. Whether you’re a complete beginner or have some programming experience, you’ll find this course valuable in expanding your knowledge and building a strong foundation in AI.

Course Syllabus

The course is structured into several modules, each building upon the previous one. This progressive approach ensures a solid understanding of core concepts before tackling more advanced topics.

- Module 1: Introduction to AI and Python Fundamentals: This module sets the stage, introducing fundamental AI concepts and refreshing Python programming essentials. We’ll cover data types, control structures, and fundamental libraries like NumPy and Pandas, crucial for handling data in AI applications. Expect coding exercises to solidify your understanding.

- Module 2: Machine Learning Basics: We’ll delve into the core concepts of machine learning, exploring supervised, unsupervised, and reinforcement learning techniques. We’ll cover algorithms like linear regression, logistic regression, and k-means clustering, accompanied by practical implementations in Python.

- Module 3: Deep Learning Introduction: This module introduces the power of deep learning, focusing on neural networks. We’ll explore different neural network architectures, such as convolutional neural networks (CNNs) for image processing and recurrent neural networks (RNNs) for sequential data. Expect hands-on projects involving image classification and natural language processing.

- Module 4: AI Applications and Ethical Considerations: This module explores real-world applications of AI, examining case studies from various industries. We’ll also discuss the ethical implications of AI, fostering responsible and ethical development practices.

- Module 5: Capstone Project: You’ll apply your newly acquired skills to a comprehensive capstone project, allowing you to design and implement an AI solution to a real-world problem. This project will be a culmination of the knowledge and skills gained throughout the course.

Learning Objectives

Upon completion of this course, students will be able to:

- Understand fundamental AI concepts and their applications.

- Programmatically implement various machine learning and deep learning algorithms using Python.

- Analyze and interpret data for AI model development.

- Evaluate the performance of AI models and optimize their accuracy.

- Critically assess the ethical implications of AI technologies.

Prerequisites

Basic programming knowledge (preferably in Python) is recommended, but not strictly required. A willingness to learn and a curious mind are the most important prerequisites. We’ll provide supplemental resources to support those with limited prior programming experience.

Welcome Message

Welcome, future AI innovators! This course is your launchpad into the exciting world of artificial intelligence. You’ll gain practical skills, build a strong portfolio, and open doors to rewarding careers in data science, machine learning engineering, AI research, and many more emerging fields. The demand for AI professionals is skyrocketing, and this course will give you the edge you need to thrive in this dynamic landscape. Get ready to transform data into insights and build the future of AI!

Comparison of AI Approaches

This course will cover both machine learning and deep learning, two prominent approaches within AI. Machine learning involves algorithms that learn from data without explicit programming, often relying on feature engineering to represent data effectively. Deep learning, a subfield of machine learning, utilizes artificial neural networks with multiple layers to learn complex patterns from data. Deep learning excels in handling large datasets and complex tasks, such as image recognition and natural language processing, while machine learning is often more suitable for smaller datasets and simpler problems. This course will provide a comprehensive understanding of both approaches and their respective strengths, allowing you to choose the most appropriate technique for a given problem. For example, a spam filter might effectively use machine learning, while a self-driving car heavily relies on deep learning for image recognition.

Setting up the Python Environment

So you’re ready to dive into the fascinating world of AI with Python? Awesome! Before we start building intelligent systems, we need to get our coding environment shipshape. This means installing Python itself, along with some essential libraries that will be our trusty sidekicks throughout this journey. Think of it as prepping your workshop before you start building your masterpiece.

Setting up your Python environment might seem daunting at first, but with a clear step-by-step guide, it’s surprisingly straightforward. We’ll cover installing Python, creating a virtual environment (super important!), and setting up Jupyter Notebook – your interactive coding playground.

Python Installation

Installing Python is usually a breeze. Head to the official Python website (python.org) and download the installer appropriate for your operating system (Windows, macOS, or Linux). During installation, make sure to check the box that adds Python to your system’s PATH. This allows you to run Python from your terminal or command prompt without navigating to its installation directory. After the installation completes, open your terminal or command prompt and type `python –version` to verify the installation. You should see the version number printed, confirming that Python is correctly installed.

Creating a Virtual Environment

Virtual environments are like isolated sandboxes for your projects. Why are they crucial? Because they prevent conflicts between different project dependencies. Imagine one project needing an older version of a library while another needs a newer one – virtual environments prevent these clashes. To create a virtual environment, use the `venv` module (built into Python 3.3+). Open your terminal, navigate to your project directory, and type `python -m venv .venv`. This creates a virtual environment named `.venv` in your current directory. To activate the environment, use the following commands depending on your operating system:

- Windows: `.venv\Scripts\activate`

- macOS/Linux: `source .venv/bin/activate`

Once activated, your terminal prompt will usually change to indicate that you’re working within the virtual environment. Now, any packages you install will only be installed within this environment, keeping your global Python installation clean and organized.

Installing Necessary Libraries

With your virtual environment activated, it’s time to install the essential libraries: NumPy, Pandas, and Scikit-learn. These are the power tools of the AI world. NumPy provides powerful numerical computation capabilities, Pandas excels at data manipulation and analysis, and Scikit-learn offers a wide array of machine learning algorithms. Use the `pip` package manager to install them:

pip install numpy pandas scikit-learn

After installation, you can verify by importing them in a Python interpreter: import numpy; import pandas; import sklearn. No errors? You’re good to go!

Setting up Jupyter Notebook

Jupyter Notebook is an interactive coding environment that makes working with Python (and other languages) a joy. It allows you to combine code, text, and visualizations in a single document, making it ideal for exploring data, building models, and documenting your work. Install it using pip within your activated virtual environment:

pip install jupyter

To launch Jupyter Notebook, simply type `jupyter notebook` in your terminal. This will open a new tab in your web browser, displaying the Jupyter Notebook interface where you can create and manage your notebooks. You’ll be greeted by a clean interface, ready for your coding adventures.

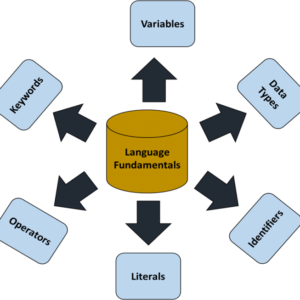

Fundamental Python for AI: Introduction To Artificial Intelligence With Python

Python’s versatility and readability make it a go-to language for artificial intelligence. Understanding its core concepts is crucial for building robust and efficient AI systems. This section dives into the essential Python elements you’ll need to master.

From the basics of data types and control flow to the power of object-oriented programming and the data manipulation capabilities of NumPy and Pandas, we’ll equip you with the fundamental building blocks for your AI journey. We’ll cover these elements with practical examples to solidify your understanding.

Data Types and Control Flow

Python offers a variety of built-in data types, including integers, floats, strings, booleans, and more complex structures like lists, tuples, and dictionaries. These data types are fundamental to representing and manipulating data within AI algorithms. Control flow mechanisms like conditional statements (if, elif, else) and loops (for, while) allow you to manage the execution of your code based on specific conditions and iterate over data structures. For example, a simple for loop can iterate through a list of numbers, performing calculations on each element. Similarly, conditional statements can direct the flow of execution based on whether a specific condition is true or false. This is essential for tasks such as data filtering or decision-making within AI models.

Functions and Object-Oriented Programming

Functions are reusable blocks of code that perform specific tasks. They promote modularity and code reusability, which are vital for managing the complexity of AI projects. Object-oriented programming (OOP) provides a powerful framework for organizing code into objects, each with its own attributes (data) and methods (functions). OOP concepts like classes, inheritance, and polymorphism are essential for building complex and maintainable AI systems. For example, you could define a class to represent an image, with attributes like pixel data and methods for image processing.

So you’re diving into the fascinating world of Introduction to Artificial Intelligence with Python? That’s awesome! Learning to code AI opens doors to so many possibilities, even in unexpected areas. For example, consider how AI could help you analyze your financial situation – maybe even optimizing something as crucial as your life insurance. Check out this guide on How to Maximize Your Life Insurance Policy’s Benefits to see what I mean.

Then, armed with that knowledge, you can return to your Python AI journey with a clearer understanding of practical applications.

NumPy for Array Manipulation

NumPy is a cornerstone library for numerical computing in Python. It provides powerful tools for creating and manipulating arrays, which are essential for representing data in many AI applications. NumPy’s functions allow for efficient element-wise operations, matrix manipulations, and linear algebra calculations – all critical components of many machine learning algorithms. For example, you can easily perform matrix multiplication or calculate the mean of an array using NumPy functions.

Pandas for Data Cleaning and Analysis

Pandas is a crucial library for data manipulation and analysis. It provides data structures like DataFrames, which are essentially tables that allow for easy data organization, cleaning, and transformation. Pandas offers a rich set of functions for data cleaning, such as handling missing values, removing duplicates, and data type conversions. It also provides tools for data analysis, such as calculating summary statistics, grouping data, and merging datasets. This is particularly important for preparing data for machine learning models, ensuring data quality and consistency.

Data Manipulation Example with Pandas

Consider a dataset of customer information. We can use Pandas to clean and analyze this data. The table below shows a sample before and after cleaning and manipulation.

| CustomerID | Name | Age | PurchaseAmount |

|---|---|---|---|

| 1 | Alice | 30 | 100 |

| 2 | Bob | 25 | 50 |

| 3 | Charlie | 40 | 150 |

| 4 | David | 35 | NULL |

After cleaning (filling NULL values with the mean of PurchaseAmount and converting data types):

| CustomerID | Name | Age | PurchaseAmount |

|---|---|---|---|

| 1 | Alice | 30 | 100 |

| 2 | Bob | 25 | 50 |

| 3 | Charlie | 40 | 150 |

| 4 | David | 35 | 100 |

Introduction to Machine Learning Concepts

Machine learning, a core component of AI, empowers computers to learn from data without explicit programming. Instead of relying on hard-coded rules, machine learning algorithms identify patterns, make predictions, and improve their performance over time based on the data they’re fed. This section explores the fundamental concepts of machine learning, focusing on its three primary types and key algorithm categories.

Supervised Learning

Supervised learning involves training a model on a labeled dataset – data where each instance is tagged with the correct answer. The model learns to map inputs to outputs based on these labeled examples. Think of it like teaching a child to identify animals: you show them pictures of cats and dogs, labeling each correctly. Eventually, the child learns to identify new cats and dogs based on the patterns they’ve learned. Examples include spam detection (email labeled as spam or not spam) and image classification (images labeled with the object they depict). The model learns to predict the correct label for new, unseen data.

Unsupervised Learning

Unlike supervised learning, unsupervised learning deals with unlabeled data. The algorithm’s task is to discover hidden patterns, structures, or groupings within the data without any prior knowledge of the correct answers. Imagine a social media algorithm clustering users with similar interests together based on their posts and interactions – it doesn’t know beforehand who belongs to which group, it simply identifies these groups based on shared characteristics. Other examples include anomaly detection (identifying unusual transactions in a financial dataset) and dimensionality reduction (reducing the number of variables in a dataset while retaining important information).

Reinforcement Learning

Reinforcement learning is all about learning through trial and error. An agent interacts with an environment, taking actions and receiving rewards or penalties based on its performance. The goal is to learn a policy – a strategy for selecting actions – that maximizes the cumulative reward over time. A classic example is a game-playing AI, such as AlphaGo, which learns to play Go by playing numerous games against itself and receiving rewards for winning and penalties for losing. The agent learns to improve its strategy over time based on the feedback it receives from the environment.

Classification vs. Regression

Classification and regression are two fundamental types of supervised learning problems. Classification involves predicting a categorical output, assigning data points to predefined classes. For example, classifying emails as spam or not spam is a classification task. In contrast, regression involves predicting a continuous output, such as predicting house prices based on size and location. The key difference lies in the nature of the output variable: categorical for classification and continuous for regression.

Machine Learning Algorithms

Several algorithms are used in machine learning, each with its strengths and weaknesses.

Linear Regression: This algorithm models the relationship between variables by fitting a linear equation to the data. It’s suitable for predicting continuous outputs where there’s a linear relationship between the input and output variables. For instance, predicting crop yield based on rainfall amount.

Logistic Regression: While the name suggests otherwise, logistic regression is a classification algorithm. It predicts the probability of a data point belonging to a particular class. It’s commonly used in binary classification problems, such as predicting whether a customer will click on an ad or not.

Decision Trees: Decision trees create a tree-like model to classify or regress data. They partition the data based on a series of decisions, creating branches that lead to different outcomes. They are easily interpretable, making them suitable for understanding the factors driving predictions. For example, a decision tree could predict customer churn based on factors like contract length, usage frequency, and customer service interactions.

Implementing Machine Learning Algorithms with Scikit-learn

Scikit-learn is a powerhouse Python library that simplifies the implementation of a wide range of machine learning algorithms. This section dives into practical applications, focusing on linear regression, logistic regression, and decision trees, demonstrating how to leverage Scikit-learn’s capabilities for real-world problem-solving. We’ll cover data preprocessing, model training, and evaluation, providing a solid foundation for your AI journey.

This section will cover the practical implementation of three key machine learning algorithms using Scikit-learn: linear regression for prediction, logistic regression for classification, and decision trees for both prediction and classification. We’ll walk through the process step-by-step, emphasizing the importance of data preparation and model evaluation.

Linear Regression with Scikit-learn

Linear regression is a fundamental supervised learning algorithm used for predicting a continuous target variable based on one or more predictor variables. Scikit-learn provides efficient tools for implementing and evaluating linear regression models. The process typically involves data preprocessing, model training using the `LinearRegression` class, and evaluating the model’s performance using metrics like Mean Squared Error (MSE) and R-squared.

For example, consider predicting house prices based on size (square footage). We would first load and clean our dataset, ensuring there are no missing values and that the data is appropriately scaled. Then, we would use `train_test_split` to divide the data into training and testing sets. The training set is used to fit a `LinearRegression` model, while the testing set is used to evaluate the model’s performance on unseen data. The model’s coefficients would reveal the relationship between house size and price. Finally, metrics like MSE and R-squared would quantify the accuracy of the predictions.

Logistic Regression with Scikit-learn

Logistic regression is a powerful classification algorithm used to predict the probability of a categorical dependent variable. Unlike linear regression, which predicts a continuous value, logistic regression predicts the probability of an event occurring. This probability is then used to classify the data point into one of the categories. Scikit-learn’s `LogisticRegression` class simplifies this process. The steps involved include data preprocessing, model training using the `LogisticRegression` class, and model evaluation using metrics like accuracy, precision, recall, and F1-score.

Imagine building a model to predict customer churn (whether a customer will cancel their subscription). We would collect data on customer usage, demographics, and past behavior. After preprocessing, we would train a `LogisticRegression` model on a training dataset, and then evaluate its performance on a separate testing dataset using metrics like accuracy and the area under the ROC curve (AUC). A high AUC indicates that the model can effectively distinguish between customers who will churn and those who will not.

Decision Tree for Real-World Problem Solving

Decision trees are versatile algorithms capable of both classification and regression tasks. They create a tree-like model of decisions and their possible consequences, making them easily interpretable. We will use Scikit-learn’s `DecisionTreeClassifier` or `DecisionTreeRegressor` (depending on the task) and Matplotlib for visualization.

Let’s design a project to predict customer satisfaction based on various factors like product quality, customer service, and price.

- Data Collection and Preprocessing: Gather data on customer satisfaction ratings and relevant factors. Clean and preprocess the data, handling missing values and transforming categorical variables as needed.

- Model Training: Train a `DecisionTreeClassifier` model using the preprocessed data. Experiment with different hyperparameters to optimize performance.

- Model Evaluation: Evaluate the model’s performance using metrics appropriate for classification, such as accuracy, precision, and recall.

- Visualization with Matplotlib: Use Matplotlib to visualize the decision tree. This visualization will show the decision path leading to different satisfaction levels, making the model’s logic transparent.

- Interpretation and Insights: Analyze the visualized tree to understand the key factors influencing customer satisfaction. This could reveal areas for improvement in product development or customer service.

A visual representation of the decision tree, created using Matplotlib, would display nodes representing decisions based on features (e.g., product quality rating above a certain threshold), branches representing the decision outcomes, and leaf nodes representing the predicted customer satisfaction level (e.g., high, medium, low). The visualization would clearly illustrate the model’s decision-making process.

Data Visualization and Interpretation

Source: googleusercontent.com

Data visualization is the unsung hero of machine learning. While algorithms crunch numbers, visualizations bring those numbers to life, revealing patterns, trends, and anomalies that might otherwise remain hidden. Understanding your data visually is crucial for building effective models, identifying potential biases, and communicating your findings effectively. This section will explore key visualization techniques and how to interpret the resulting insights.

Visualizing Data with Matplotlib

Matplotlib is a powerful Python library for creating static, interactive, and animated visualizations. It offers a wide range of plot types, making it adaptable to various data analysis tasks. By visualizing your data before and after model training, you gain crucial insights into your dataset’s characteristics and your model’s performance.

Scatter plots are ideal for exploring relationships between two continuous variables. For instance, you might plot a scatter plot of housing prices (y-axis) against house size (x-axis) to see if a positive correlation exists. A histogram provides a visual representation of the distribution of a single continuous variable, showing the frequency of data points within specified ranges. Imagine visualizing the distribution of ages in a customer dataset using a histogram to understand the age demographics. Bar charts, on the other hand, are best for visualizing categorical data or comparing the means of different groups. For example, a bar chart could compare the average income of different customer segments.

Interpreting Model Performance Metrics

Evaluating a machine learning model’s performance is critical. Several metrics provide different perspectives on how well your model is performing. Understanding these metrics and how to interpret them is essential for choosing the best model for a given task.

| Metric | Description | Interpretation |

|---|---|---|

| Accuracy | The ratio of correctly predicted instances to the total number of instances. | High accuracy suggests a good overall performance. However, it can be misleading in imbalanced datasets. |

| Precision | The ratio of true positives to the sum of true positives and false positives. | Measures the accuracy of positive predictions. A high precision indicates that when the model predicts a positive outcome, it’s likely to be correct. |

| Recall (Sensitivity) | The ratio of true positives to the sum of true positives and false negatives. | Measures the model’s ability to identify all positive instances. High recall means the model is good at finding all relevant cases. |

| F1-Score | The harmonic mean of precision and recall. | Provides a balanced measure considering both precision and recall. A high F1-score indicates a good balance between precision and recall. |

For example, consider a spam detection model. High precision means the model rarely classifies non-spam emails as spam (reducing false positives), while high recall means the model successfully identifies most spam emails (reducing false negatives). The F1-score balances these two considerations, providing a holistic view of the model’s performance. Choosing the most important metric depends on the specific application; a medical diagnosis model might prioritize recall to avoid missing any positive cases, whereas a spam filter might prioritize precision to minimize false positives.

Working with Real-World Datasets

Diving headfirst into the world of AI often means grappling with real-world datasets. These aren’t neat, perfectly organized spreadsheets; they’re messy, complex collections of information that mirror the real-world problems we’re trying to solve. This section will guide you through the process of working with a real dataset, from loading and cleaning to building a predictive model. We’ll use the Iris dataset, a classic in machine learning, to illustrate these key steps.

The Iris dataset is a fantastic starting point for beginners. It’s relatively small, easy to understand, and provides a solid foundation for learning data manipulation and model building techniques. It contains measurements of 150 iris flowers, with 50 flowers from each of three species: setosa, versicolor, and virginica. Each flower is described by four features: sepal length, sepal width, petal length, and petal width, all measured in centimeters. This simplicity makes it perfect for demonstrating core concepts without getting bogged down in complexities.

Dataset Loading and Preprocessing

This section details the process of loading the Iris dataset using Python’s popular scikit-learn library and preparing it for analysis. The dataset is readily available within scikit-learn, simplifying the loading process significantly. We’ll then handle any potential data cleaning needs, though the Iris dataset is relatively clean.

First, we import the necessary libraries: `pandas` for data manipulation and `sklearn.datasets` for accessing the Iris dataset. Then, we load the dataset using `load_iris()`. The function returns a dictionary-like object containing the data, target variables (species), and feature names. We extract the data and target variables into pandas DataFrames for easier manipulation and exploration. Finally, we can optionally check for missing values or outliers, although in this case, such steps are not typically necessary.

“`python

import pandas as pd

from sklearn.datasets import load_iris

iris = load_iris()

iris_df = pd.DataFrame(data=iris.data, columns=iris.feature_names)

iris_df[‘species’] = iris.target

# Check for missing values (optional for Iris, usually needed for other datasets):

# print(iris_df.isnull().sum())

“`

Exploratory Data Analysis

Before building a model, it’s crucial to explore the dataset. This involves understanding the distribution of the data, identifying relationships between features, and gaining insights that can inform model selection. We can use descriptive statistics, visualizations, and correlation analysis to achieve this.

We can calculate summary statistics using the `describe()` method in pandas. This provides measures like mean, standard deviation, and percentiles for each feature. Histograms and scatter plots can visually represent the distribution of individual features and relationships between pairs of features. A correlation matrix shows the linear relationships between all pairs of features, helping identify potential redundancies or strong relationships.

“`python

# Summary statistics

print(iris_df.describe())

# Histograms

iris_df.hist(figsize=(10, 8))

# Scatter plots and correlation matrix (requires additional libraries like matplotlib and seaborn)

# … (code for visualization would go here)

“`

Model Training and Evaluation

With the data loaded and explored, we can now build a machine learning model to predict the species of iris based on its sepal and petal measurements. We’ll use a simple Support Vector Machine (SVM) classifier for this example.

First, we split the dataset into training and testing sets using `train_test_split` from scikit-learn. Then, we initialize an SVM classifier, train it on the training data using `fit()`, and make predictions on the test data using `predict()`. Finally, we evaluate the model’s performance using metrics like accuracy, precision, and recall, which are calculated using functions from `sklearn.metrics`.

“`python

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score, classification_report

X = iris_df.drop(‘species’, axis=1)

y = iris_df[‘species’]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

svm = SVC()

svm.fit(X_train, y_train)

y_pred = svm.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

report = classification_report(y_test, y_pred)

print(f”Accuracy: accuracy”)

print(f”Classification Report:\nreport”)

“`

This process demonstrates a typical workflow for working with real-world datasets in machine learning. Remember, this is a simplified example, and real-world datasets often require more extensive preprocessing and model tuning.

Model Evaluation and Improvement

Building a machine learning model is only half the battle; understanding its performance and refining it for optimal results is equally crucial. This section delves into the essential techniques for evaluating your model’s accuracy and strategies to boost its predictive power. We’ll explore various methods, focusing on practical applications and avoiding overly theoretical discussions.

Model evaluation isn’t just about getting a high accuracy score; it’s about understanding how well your model generalizes to unseen data and identifying potential areas for improvement. Choosing the right evaluation metrics is key, and we’ll cover the most common ones and when to use them. Improving model performance involves a multi-faceted approach, ranging from refining the input data to tweaking the model’s internal parameters.

Cross-Validation Techniques

Cross-validation is a powerful resampling procedure used to evaluate a model’s performance and avoid overfitting. It involves splitting the dataset into multiple subsets (folds), training the model on some folds, and testing it on the remaining fold(s). This process is repeated multiple times, with different folds used for training and testing each time. The results are then averaged to provide a more robust estimate of the model’s generalization performance. For instance, k-fold cross-validation, where k represents the number of folds, is a widely used technique. A common choice is 5-fold or 10-fold cross-validation, offering a balance between computational cost and accuracy of the performance estimate. The average performance across all folds gives a more reliable indication of how the model will perform on entirely new, unseen data compared to a single train-test split. Imagine training a model to predict customer churn. Using 5-fold cross-validation gives a more reliable estimate of the model’s accuracy than a single train-test split, because it averages the performance across different subsets of the data.

Feature Engineering Strategies

Feature engineering involves transforming or selecting relevant features from the raw data to improve model performance. This often involves creating new features from existing ones, removing irrelevant features, or handling missing values effectively. For example, instead of using raw age data, you might create new features like “age group” (e.g., young adult, middle-aged, senior) or “age squared” to capture non-linear relationships. Similarly, one-hot encoding can transform categorical variables into numerical representations suitable for many algorithms. Consider a model predicting house prices. Instead of just using the size of the house, you could engineer features like “size per bedroom,” “size per bathroom,” or “distance to the nearest school” to capture more nuanced relationships that better predict house price. Careful feature engineering can significantly impact a model’s accuracy and interpretability.

Hyperparameter Tuning Methods

Hyperparameters are settings that control the learning process of a machine learning model. They are not learned from the data but are set before training. Techniques like grid search and random search are commonly used to find the optimal hyperparameter settings. Grid search systematically tries all combinations of hyperparameters within a specified range, while random search randomly samples hyperparameter combinations. For example, when training a support vector machine (SVM), hyperparameters like the C parameter (regularization strength) and the kernel type need to be tuned. Using grid search or random search, you can explore different combinations of these parameters to find the setting that yields the best performance on a validation set. Bayesian optimization is a more advanced technique that uses a probabilistic model to guide the search for optimal hyperparameters, often leading to faster convergence compared to grid or random search.

Selecting Appropriate Evaluation Metrics, Introduction to Artificial Intelligence with Python

The choice of evaluation metric depends heavily on the type of machine learning problem. For classification problems, common metrics include accuracy, precision, recall, F1-score, and the area under the ROC curve (AUC). For regression problems, common metrics include mean squared error (MSE), root mean squared error (RMSE), mean absolute error (MAE), and R-squared. For example, in a fraud detection system (a classification problem), you might prioritize recall (minimizing false negatives) to ensure that most fraudulent transactions are identified, even at the cost of some false positives. In contrast, for predicting house prices (a regression problem), RMSE might be a suitable metric as it directly measures the average prediction error in the same units as the target variable. Choosing the right metric is crucial for evaluating the model’s performance in the context of the specific problem.

Closure

So, you’ve conquered the basics of Python, delved into the intricacies of machine learning algorithms, and even wrestled with real-world datasets. You’ve built models, visualized results, and honed your skills in interpreting those crucial performance metrics. Now, armed with this newfound knowledge, you’re ready to tackle the ever-expanding landscape of AI. This isn’t just the end of a course; it’s the launchpad for your AI journey. Keep learning, keep building, and keep innovating!