How to Write Efficient Code: Best Practices for Developers – sounds boring, right? Wrong! This isn’t your grandpa’s coding manual. We’re diving deep into the nitty-gritty of crafting code that’s not just functional, but downright *sleek*. Think lightning-fast execution, minimal memory hogging, and code so clean, it’ll make your IDE sing. Get ready to level up your coding game and write code that’s as efficient as it is elegant.

From understanding the nuances of data structures and algorithms to mastering code optimization techniques and debugging like a pro, we’ll cover it all. We’ll explore practical examples, demystify complex concepts, and equip you with the best practices to write code that’s not only efficient but also maintainable and readable. Prepare for a journey into the heart of efficient coding – it’s going to be epic.

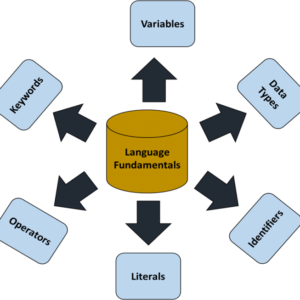

Understanding Code Efficiency

Writing efficient code isn’t just about making your programs run faster; it’s about crafting software that’s robust, maintainable, and resource-friendly. Efficiency encompasses several key aspects, all contributing to a better overall developer experience and a more performant application. Ignoring efficiency can lead to slow, resource-intensive applications that are difficult to scale and maintain.

Code efficiency boils down to three main pillars: speed, memory usage, and readability. Speed refers to how quickly your code executes. Memory usage focuses on how much RAM your program consumes during execution. Readability, often overlooked, is crucial for maintainability and collaboration. Clean, well-structured code is easier to understand, debug, and modify, saving time and frustration in the long run. A balance between these three aspects is key; prioritizing one excessively might negatively impact the others. For instance, highly optimized code for speed might become incredibly complex and difficult to read.

Inefficient and Efficient Code Examples in Python

Let’s illustrate with Python examples. Consider a scenario where you need to find the sum of numbers in a list.

An inefficient approach might involve using a loop with multiple operations:

def inefficient_sum(numbers):

total = 0

for i in range(len(numbers)):

total += numbers[i]

return total

This approach iterates through the list using its index, which is less efficient than Python’s built-in functionalities. A more efficient solution leverages Python’s built-in `sum()` function:

def efficient_sum(numbers):

return sum(numbers)

The `sum()` function is optimized and significantly faster, especially for larger lists. This demonstrates how utilizing built-in functions can dramatically improve code efficiency.

Best Practices for Concise and Maintainable Code

Writing concise and maintainable code is paramount for long-term project success. Several best practices contribute to this goal:

Employing meaningful variable and function names enhances readability. Avoid cryptic abbreviations or single-letter variables unless their purpose is immediately clear within a very limited scope. Well-structured code, using appropriate indentation and whitespace, improves readability significantly. Comments should clarify complex logic, not restate the obvious. Modularizing your code into smaller, reusable functions promotes organization and reduces redundancy. Regular code reviews with colleagues offer valuable feedback and identify potential inefficiencies.

Writing efficient code is all about minimizing bugs and maximizing performance, but remember, your coding hustle is also a business! Just like you need to protect your software, you need to protect your business, and that starts with smart planning. Check out this guide on How to Safeguard Your Small Business from Legal Risks with Insurance to ensure you’re covered.

Then, get back to that clean, efficient code – your future self (and your clients) will thank you.

Time Complexities of Common Algorithms

Understanding algorithmic time complexity is vital for writing efficient code. The table below shows the time complexities of common algorithms, expressed using Big O notation. Big O notation describes the upper bound of an algorithm’s runtime as the input size grows.

| Algorithm | Best Case | Average Case | Worst Case |

|---|---|---|---|

| Linear Search | O(1) | O(n) | O(n) |

| Binary Search | O(1) | O(log n) | O(log n) |

| Bubble Sort | O(n) | O(n2) | O(n2) |

| Merge Sort | O(n log n) | O(n log n) | O(n log n) |

For example, a linear search has a worst-case time complexity of O(n), meaning the runtime grows linearly with the input size. In contrast, a binary search, applicable only to sorted data, boasts a much better time complexity of O(log n), making it significantly faster for large datasets. Choosing the right algorithm significantly impacts the overall efficiency of your code.

Data Structures and Algorithms

Choosing the right data structures and algorithms is crucial for writing efficient code. The performance of your application, especially as data scales, hinges heavily on these fundamental building blocks. A poorly chosen structure can lead to slowdowns and bottlenecks, while a well-selected one can significantly boost efficiency. This section explores the impact of these choices and provides insights into optimizing code performance.

The Impact of Data Structures on Code Efficiency

The efficiency of your code is directly tied to how well your chosen data structure supports the operations you need to perform. For example, if you frequently need to access elements by their index, an array is a highly efficient choice because accessing elements by index is a constant-time operation (O(1)). However, if you frequently need to insert or delete elements in the middle of the sequence, a linked list might be more efficient, even though accessing elements by index takes linear time (O(n)). The optimal choice always depends on the specific needs of your application. Understanding the time and space complexities of different data structures is key to making informed decisions.

Performance Characteristics of Common Data Structures

Arrays provide fast access to elements using their index, but inserting or deleting elements in the middle can be slow. Linked lists excel at insertions and deletions, but accessing elements by index is slow. Hash tables offer average-case constant-time complexity for insertion, deletion, and search operations, making them ideal for scenarios requiring quick lookups, like dictionaries or symbol tables. Trees, on the other hand, offer a balance between the strengths of arrays and linked lists, providing efficient search, insertion, and deletion in many cases, particularly when balanced (like in a balanced binary search tree). The choice between these depends on the frequency of various operations and the size of the data. For instance, a small dataset might see minimal performance differences, while a large dataset would show dramatic differences. Consider a phone book: an array would be cumbersome for searching, while a hash table, using the name as a key, allows for quick lookups.

Utilizing Algorithms for Code Optimization

Algorithms are sets of instructions that solve specific problems. Choosing the right algorithm can dramatically improve code efficiency. For instance, a simple linear search through an unsorted array has a time complexity of O(n), meaning the time it takes increases linearly with the number of elements. However, if the array is sorted, a binary search can achieve a time complexity of O(log n), significantly faster for large datasets. This illustrates the importance of selecting algorithms that are well-suited to the data and the operations being performed. Efficient algorithms often exploit the structure of the data to minimize the number of operations required.

Common Algorithm Design Patterns

Selecting appropriate algorithm design patterns is crucial for creating efficient and maintainable code. Understanding these patterns allows for a more strategic approach to problem-solving, resulting in improved performance.

- Divide and Conquer: This approach breaks down a problem into smaller, self-similar subproblems, solves them recursively, and then combines the solutions. Examples include merge sort and quicksort.

- Dynamic Programming: This technique solves a problem by breaking it down into smaller overlapping subproblems, solving each subproblem only once, and storing their solutions to avoid redundant computations. Examples include the Fibonacci sequence calculation and the knapsack problem.

- Greedy Algorithms: These algorithms make locally optimal choices at each step, hoping to find a global optimum. Examples include Dijkstra’s algorithm for finding the shortest path in a graph and Huffman coding for data compression.

- Backtracking: This approach explores all possible solutions by systematically trying different options and undoing choices if they lead to a dead end. Examples include the N-Queens problem and finding all paths in a maze.

- Branch and Bound: This technique systematically explores the search space, pruning branches that are guaranteed not to lead to a better solution than the one already found. It’s often used in optimization problems.

Code Optimization Techniques

Writing efficient code isn’t just about making your program run; it’s about making it run *fast* and *smoothly*. This section dives into practical techniques to identify and squash those pesky performance bottlenecks, transforming your code from a sluggish snail to a cheetah on caffeine. We’ll explore how to pinpoint problem areas, optimize loops, manage memory effectively, and leverage profiling tools to keep your code running lean and mean.

Optimizing your code is a journey, not a destination. It’s an iterative process of identifying performance bottlenecks, implementing improvements, and then measuring the impact. This continuous cycle ensures your code remains efficient even as it grows in complexity.

Identifying and Addressing Code Bottlenecks

Pinpointing performance bottlenecks involves a blend of intuition, experience, and the use of profiling tools. Common bottlenecks often reside within loops that iterate over large datasets or complex recursive functions. Inefficient algorithms also contribute significantly to slow performance. Addressing these issues might involve selecting more efficient algorithms (like switching from a bubble sort to a quicksort for large datasets), optimizing data structures to reduce search times, or employing techniques like memoization to cache results of expensive computations.

Optimizing Loops and Reducing Redundant Calculations

Loops are frequently the culprits behind slow code. Consider a loop iterating through a million records; even minor inefficiencies become amplified. Optimizing loops involves minimizing the operations performed within each iteration. For example, calculations that can be done outside the loop should be moved out. Redundant calculations should be eliminated by storing results and reusing them when necessary. Using more efficient data structures can drastically reduce the number of iterations required. For instance, using a hash table for lookups instead of iterating through a list can provide a significant speed boost.

Memory Management and Minimizing Memory Usage

Memory management is crucial for efficient code. Excessive memory consumption can lead to slowdowns, crashes, or even system instability. Strategies for minimizing memory usage include using appropriate data structures (arrays are generally more memory-efficient than lists in many cases), avoiding unnecessary object creation, and employing techniques like object pooling to reuse objects instead of repeatedly allocating and deallocating them. Garbage collection is your friend here; understanding how it works in your programming language can help you write more memory-efficient code. Consider using techniques like generational garbage collection which prioritizes the collection of recently allocated objects that are often short-lived.

Using Profiling Tools to Identify Performance Issues

Profiling tools are invaluable for identifying performance bottlenecks. These tools monitor your code’s execution, providing detailed information about the time spent in different parts of your program. By identifying the functions or code sections that consume the most time, you can focus your optimization efforts on the areas that will yield the greatest improvements. Many programming languages and Integrated Development Environments (IDEs) offer built-in profiling tools, or you can use standalone profilers. For example, Python’s `cProfile` module allows you to analyze the performance of your Python code, providing insights into the call counts and execution times of various functions. Analyzing this data allows you to identify which functions or code segments are consuming the most processing time and should be optimized first.

Coding Style and Readability

Source: pixelfreestudio.com

Writing efficient code isn’t just about speed; it’s about clarity. Clean, readable code is easier to understand, debug, maintain, and collaborate on. This leads to reduced development time, fewer errors, and a more enjoyable coding experience. Think of it as writing a well-structured essay – you wouldn’t just throw sentences together randomly, would you? The same principle applies to code.

Readability directly impacts maintainability. Imagine trying to decipher code written years ago, by someone else, with no comments or consistent style. It’s a nightmare! Conversely, well-structured, well-documented code is a joy to work with, making future modifications and updates a breeze. This translates to cost savings and improved project timelines in the long run.

Consistent Coding Style and Code Linters

Maintaining a consistent coding style across a project is crucial for readability. This involves adhering to a set of rules regarding indentation, naming conventions, spacing, and other formatting aspects. Inconsistency makes code harder to follow and understand, hindering collaboration and increasing the risk of errors. Code linters are invaluable tools that automatically enforce these style guidelines, identifying inconsistencies and suggesting improvements. Popular linters include Pylint for Python, ESLint for JavaScript, and RuboCop for Ruby. These tools act as automated code reviewers, helping maintain a consistent and high-quality codebase. They help catch potential issues early, preventing larger problems later.

Comments and Meaningful Variable Names

Comments and well-chosen variable names are essential for code understanding. Comments explain the “why” behind the code, providing context and clarifying complex logic. They are especially useful when revisiting code after a period of time or when working on a project with multiple developers. Avoid overly verbose or redundant comments; focus on explaining non-obvious sections or complex algorithms. Meaningful variable names should clearly indicate the purpose of the variable. Instead of using vague names like `x` or `temp`, opt for descriptive names such as `userName` or `totalAmount`. This enhances readability and makes it easier to grasp the flow of the code. Think of it like using labels in a spreadsheet – it’s much easier to understand what the data represents.

Coding Style Guidelines

The following table summarizes common coding style guidelines across different programming languages. Adhering to these conventions promotes consistency and improves code readability.

| Aspect | Python (PEP 8) | JavaScript (Airbnb Style Guide) | Java (Google Java Style Guide) |

|---|---|---|---|

| Indentation | 4 spaces | 2 spaces | 2 spaces |

| Line Length | 79 characters | 80 characters | 100 characters |

| Naming Conventions | snake_case | camelCase | camelCase |

| Comments | Use docstrings for functions and classes | Use JSDoc for documentation | Use Javadoc for documentation |

Testing and Debugging Efficient Code

Writing efficient code isn’t just about clever algorithms; it’s about building robust, reliable systems. Thorough testing is the cornerstone of this reliability, ensuring your optimized code performs as expected under various conditions and doesn’t introduce unforeseen bottlenecks. Debugging, the process of identifying and fixing errors, becomes crucial when performance issues arise, allowing you to refine your code for optimal efficiency.

The Importance of Thorough Testing in Ensuring Code Efficiency

Testing isn’t an afterthought; it’s an integral part of the development lifecycle. Comprehensive testing helps uncover performance bottlenecks early, preventing costly fixes later. By systematically testing different aspects of your code, you can identify areas where optimization is needed and verify that your optimizations actually improve performance. Failing to test adequately can lead to unexpected behavior in production, impacting user experience and potentially causing significant problems. For instance, a seemingly optimized sorting algorithm might fail dramatically when dealing with exceptionally large datasets if not thoroughly tested with diverse input sizes.

Testing Methodologies: Unit and Integration Testing

Two primary testing methodologies are crucial for ensuring code efficiency: unit testing and integration testing. Unit testing focuses on individual components or modules of your code, verifying that each part functions correctly in isolation. This allows for quick identification of problems within specific units. Integration testing, on the other hand, tests the interaction between different modules or components, ensuring they work together seamlessly and efficiently. Imagine building a car – unit testing would involve testing the engine, brakes, and steering wheel separately, while integration testing would check if all these parts work together harmoniously.

Debugging Techniques for Identifying and Resolving Performance Issues

Debugging inefficient code requires a systematic approach. Profiling tools are invaluable for pinpointing performance bottlenecks. These tools analyze your code’s execution, identifying sections consuming excessive resources (CPU time, memory). Log files can provide valuable insights into the program’s behavior, helping you track down the source of errors. Using a debugger, a tool that allows you to step through your code line by line, helps you observe variable values and identify unexpected behavior. Furthermore, employing code review practices, where other developers examine your code, can catch errors and suggest optimizations that you might have missed. For example, a profiler might reveal that a specific database query is taking an unusually long time, prompting you to optimize the query or database schema.

Debugging Inefficient Code: A Flowchart

Imagine a flowchart with the following steps:

1. Identify Performance Issue: Start with observing slowdowns, high resource consumption, or unexpected behavior.

2. Reproduce the Issue: Create a consistent set of steps to reproduce the problem reliably.

3. Gather Data: Utilize profiling tools and log files to collect data on resource usage and program execution.

4. Isolate the Problem: Analyze the collected data to pinpoint the specific code section causing the performance issue.

5. Develop and Test Solutions: Implement potential fixes and rigorously test them to verify their effectiveness.

6. Deploy and Monitor: Integrate the solution into the system and monitor performance to ensure the issue is resolved and no new problems are introduced.

7. Document Findings: Record the problem, solution, and any relevant learnings to prevent similar issues in the future.

This systematic approach allows for efficient debugging and code improvement.

Advanced Optimization Strategies: How To Write Efficient Code: Best Practices For Developers

Writing efficient code isn’t just about avoiding obvious inefficiencies; it’s about mastering advanced techniques that significantly boost performance, especially when dealing with large datasets or complex applications. This section delves into strategies that go beyond the basics, helping you create truly optimized software. We’ll explore caching, parallel processing, and when optimization might not be the best approach.

Caching Mechanisms

Caching is a powerful technique that stores frequently accessed data in a readily available location, reducing the need to repeatedly fetch it from slower sources like databases or remote servers. Imagine a website’s homepage: instead of querying the database every time a user visits, the page content can be cached. This drastically reduces server load and improves response times. Different caching strategies exist, including in-memory caching (using structures like dictionaries or hash maps), disk caching (for persistent storage), and distributed caching (across multiple servers). The choice depends on factors like data size, access frequency, and the application’s architecture. Effective caching requires careful consideration of cache invalidation strategies to ensure data remains consistent. For example, a Least Recently Used (LRU) algorithm can help manage cache space efficiently by removing less frequently accessed items.

Parallel and Concurrent Programming

Modern multi-core processors offer the potential for massive performance gains through parallel and concurrent programming. Parallel programming involves breaking down a task into independent subtasks that can be executed simultaneously on different cores. Concurrent programming, on the other hand, focuses on managing multiple tasks that might share resources, even if they aren’t fully independent. Techniques like threading and multiprocessing are key here. Threading allows multiple tasks to run seemingly at the same time within a single process, while multiprocessing creates separate processes, each with its own memory space. The choice between threading and multiprocessing depends on the nature of the task and the potential for resource contention. For CPU-bound tasks (heavy calculations), multiprocessing often provides better performance, while for I/O-bound tasks (waiting for network requests), threading can be more efficient. Libraries like Python’s `multiprocessing` and `threading` modules provide tools for implementing these techniques.

Situations Where Optimization May Be Unnecessary

Optimization isn’t always the answer. Premature optimization can lead to complex, less readable, and harder-to-maintain code. If a program is already performing adequately, investing significant time and effort into optimization might not yield significant returns. Prioritizing readability and maintainability is often more beneficial in the long run, especially for smaller projects or less performance-critical parts of a larger system. Focus your optimization efforts on the parts of the code that are identified as bottlenecks through profiling and performance analysis. A well-structured and clean codebase is usually easier to optimize later if needed.

Advanced Optimization Techniques

Understanding the advantages and disadvantages of various advanced techniques is crucial for making informed decisions about optimization strategies.

- Profiling and Benchmarking: Identifying performance bottlenecks through systematic measurement. Advantages: Pinpoints areas needing optimization. Disadvantages: Can be time-consuming to set up and interpret results.

- Vectorization: Performing operations on entire arrays or vectors simultaneously using specialized hardware instructions. Advantages: Significant speed improvements for numerical computations. Disadvantages: Requires understanding of vectorization capabilities of the hardware and programming language.

- Just-in-Time (JIT) Compilation: Compiling code during runtime, allowing for dynamic optimization based on program behavior. Advantages: Can improve performance over traditional compilation. Disadvantages: Adds runtime overhead and complexity.

- Asynchronous Programming: Handling multiple tasks concurrently without blocking the main thread. Advantages: Improves responsiveness and efficiency for I/O-bound operations. Disadvantages: Can make code more complex to manage.

Example: Efficient String Manipulation

String manipulation is a fundamental task in programming, and its efficiency significantly impacts application performance. Choosing the right methods and understanding the characteristics of strings (mutable vs. immutable) is crucial for writing optimized code. This section will explore efficient string manipulation techniques in Python, Java, and C++, comparing their performance and highlighting the impact of string mutability.

String Manipulation in Python

Python strings are immutable. This means that any operation that appears to modify a string actually creates a new string. While convenient for data integrity, it can lead to performance issues if not handled carefully. For instance, repeatedly concatenating strings using the ‘+’ operator creates many intermediate string objects, impacting memory usage and speed. A more efficient approach for multiple concatenations is to use the `join()` method. Consider the following example:

# Inefficient string concatenation

long_string = ""

for i in range(1000):

long_string += str(i)

# Efficient string concatenation using join()

long_string = "".join(str(i) for i in range(1000))

The `join()` method is significantly faster because it allocates memory for the final string only once. Other efficient techniques include using string formatting (f-strings or `str.format()`) for embedding variables into strings and leveraging built-in string methods like `startswith()`, `endswith()`, `find()`, and `replace()` for specific operations.

String Manipulation in Java

Java strings, like Python strings, are also immutable. Similar to Python, repeatedly concatenating strings using the ‘+’ operator is inefficient. The `StringBuilder` or `StringBuffer` classes provide mutable alternatives, significantly improving performance for multiple concatenations. `StringBuffer` is synchronized, making it suitable for multithreaded environments, while `StringBuilder` is faster in single-threaded contexts.

// Inefficient string concatenation

String longString = "";

for (int i = 0; i < 1000; i++)

longString += i;

// Efficient string concatenation using StringBuilder

StringBuilder sb = new StringBuilder();

for (int i = 0; i < 1000; i++)

sb.append(i);

String longStringEfficient = sb.toString();

Java also offers a rich set of built-in string methods for various manipulation tasks, which should be preferred over manual character-by-character processing whenever possible.

String Manipulation in C++

C++ offers both mutable and immutable string types. The `std::string` class is mutable, allowing for in-place modifications, whereas C-style strings (null-terminated character arrays) are immutable. Using `std::string` generally offers better performance and safety compared to C-style strings for most operations. Efficient techniques include using the `append()` method for concatenations and leveraging methods like `substr()`, `find()`, `replace()`, etc. Avoid manual memory management with C-style strings whenever possible to prevent potential memory leaks and errors.

#include

#include

int main()

std::string str = "Hello";

str.append(" World!"); //Efficient append operation

std::cout << str << std::endl;

return 0;

Performance Comparison of String Methods, How to Write Efficient Code: Best Practices for Developers

The actual performance differences depend on factors like string length, the specific operation, and the underlying hardware. However, we can illustrate general trends:

| Method | Python | Java | C++ |

|---|---|---|---|

| Concatenation (+) | Slow (O(n^2)) | Slow (O(n^2)) | Slow (O(n^2) with C-style strings) |

| join() (Python) / StringBuilder.append() (Java) / std::string::append() (C++) | Fast (O(n)) | Fast (O(n)) | Fast (O(n)) |

| Substr/substring | Relatively fast | Relatively fast | Relatively fast |

| Replace | Relatively fast | Relatively fast | Relatively fast |

Note: O(n) denotes linear time complexity, while O(n^2) denotes quadratic time complexity. The table reflects general performance characteristics and may vary depending on the specific implementation and hardware.

Impact of Immutable vs. Mutable Strings

Immutable strings, while offering benefits in terms of data integrity and thread safety, can lead to performance penalties due to the creation of new string objects for each modification. Mutable strings, on the other hand, allow for in-place modifications, reducing memory allocation and improving performance, especially for repeated concatenations or modifications. The choice between mutable and immutable strings depends on the specific application requirements and the trade-off between performance and data integrity.

Outcome Summary

So, there you have it – your cheat sheet to writing efficient code. Remember, it’s not just about speed; it’s about creating code that's readable, maintainable, and scalable. By understanding the fundamentals of data structures, algorithms, and optimization techniques, you'll be well-equipped to tackle any coding challenge. Embrace the power of clean, efficient code, and watch your development skills soar to new heights. Now go forth and code like a ninja!