Building Scalable Systems with Microservices Architecture: Forget monolithic messes! Imagine a system so adaptable, it effortlessly handles growth spurts and user surges. That’s the magic of microservices. We’re diving deep into this architectural marvel, exploring how independent, nimble services work together to create a powerhouse of scalability and resilience. Get ready to ditch the legacy headaches and embrace a future-proof design.

This guide unravels the core principles of microservices, comparing them to their monolithic counterparts. We’ll walk you through designing scalable microservices for real-world scenarios, like an e-commerce platform, revealing strategies for handling massive traffic and data. From choosing the right technologies (think Spring Boot, Node.js, Kubernetes) to mastering data management and deployment, we’ll equip you with the knowledge to build systems that truly scale.

Introduction to Microservices Architecture

Imagine building a Lego castle. You could build it as one massive, interconnected structure – a monolith. Or, you could build it from smaller, independent modules – microservices. Each module, representing a specific function like a tower or a wall, can be built, modified, and even replaced without affecting the others. That’s the essence of microservices architecture. It’s a way of designing software applications as a collection of small, autonomous services, each running its own process and communicating with each other over a network, usually using lightweight mechanisms like REST APIs.

Microservices architecture is built on several core principles. These principles ensure that the system remains flexible, scalable, and maintainable. Key amongst them are independent deployability, decentralized governance, and technology diversity. Each microservice is independently deployable, meaning changes to one service don’t necessitate a full system redeployment. Decentralized governance allows individual teams to own and manage their services, fostering agility. Finally, technology diversity enables teams to choose the best technology for each specific service, leading to optimized performance and development efficiency.

Benefits of Microservices for Scalable Systems

Adopting a microservices approach offers significant advantages in building scalable systems. The modular nature of microservices allows for independent scaling of individual components based on demand. If one part of the application experiences a surge in traffic, only that specific microservice needs to be scaled up, avoiding unnecessary resource consumption in other areas. This granular scalability translates to cost efficiency and improved resource utilization. Furthermore, the independent deployability of microservices reduces the risk of widespread outages. A failure in one service is less likely to cascade and bring down the entire system. Finally, faster development cycles are enabled through independent development and deployment, allowing for continuous integration and continuous delivery (CI/CD) practices.

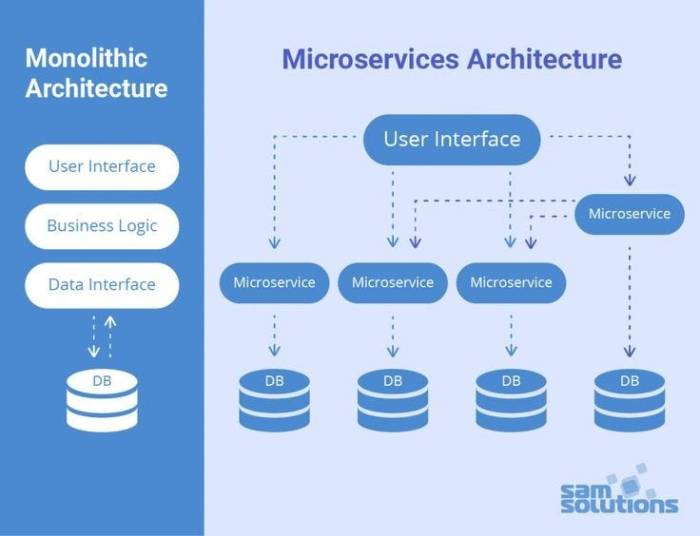

Microservices vs. Monolithic Architecture

The key difference between microservices and monolithic architectures lies in their structure and deployment. A monolithic application is a single, large application where all components are tightly coupled. This creates a complex, interdependent system that is difficult to scale, maintain, and update. In contrast, a microservices architecture comprises many smaller, independent services that communicate with each other through APIs. This modular design allows for independent scaling, easier maintenance, and faster development cycles. Consider a hypothetical e-commerce platform. A monolithic version would have all functionalities – user accounts, product catalog, shopping cart, payment processing – within a single codebase. A microservices version would separate each of these into individual services, each responsible for a specific task. Changes to the payment processing service, for instance, would not require redeployment of the entire platform.

Real-World Examples of Microservices

Numerous successful companies leverage microservices architecture to build highly scalable and robust systems. Netflix, for example, is renowned for its extensive use of microservices to manage its streaming platform. Their architecture allows them to handle massive traffic spikes during peak viewing times by scaling individual services as needed. Similarly, Amazon utilizes a microservices architecture to power its e-commerce platform, enabling the seamless handling of millions of transactions daily. These examples demonstrate the effectiveness of microservices in managing complex, high-traffic applications. The ability to independently deploy and scale individual components is critical to their success in handling the ever-increasing demands of their users.

Designing Scalable Microservices

Source: medium.com

Building scalable systems with microservices architecture requires careful planning, much like choosing the right car insurance. You need to assess your “risk profile” – the potential load and failure points – just as you’d consider your driving habits when selecting a policy, like checking out this helpful guide on How to Choose the Right Car Insurance for Your Driving Habits.

Ultimately, both require a tailored approach for optimal performance and cost-effectiveness.

Building a truly scalable e-commerce platform requires more than just throwing hardware at the problem. It demands a thoughtful, well-architected microservices approach. This section dives into the key design considerations for creating a robust and adaptable system capable of handling massive traffic and data volumes.

E-commerce Microservice Architecture Design

Let’s imagine designing an e-commerce platform using a microservices architecture. We can break down the functionality into several independent services, each responsible for a specific aspect of the platform. Key services might include: Catalog Service (managing product information), User Service (handling user accounts and profiles), Order Service (processing orders), Payment Service (integrating with payment gateways), Inventory Service (tracking stock levels), and Recommendation Service (suggesting relevant products). These services communicate with each other via APIs, allowing for flexibility and independent scaling. For instance, during peak shopping seasons, the Order Service can be scaled independently to handle the increased load without affecting other services.

Strategies for Handling High Traffic and Data Volume

Scaling a microservices architecture involves several strategies. Horizontal scaling, where you add more instances of a service to distribute the load, is crucial. Load balancing distributes incoming requests across these instances. Database scaling is equally important; consider using a distributed database like Cassandra or sharding your database across multiple servers. Caching frequently accessed data, using technologies like Redis or Memcached, dramatically reduces database load. Asynchronous processing, using message queues like Kafka or RabbitMQ, helps handle bursts of activity by decoupling services and allowing them to process requests at their own pace. For example, order confirmations can be sent asynchronously, preventing delays in order processing.

Best Practices for Independent Deployments and Fault Tolerance

Independent deployments are a cornerstone of microservices. Each service should have its own codebase, deployment pipeline, and lifecycle. This allows for rapid iteration and updates without affecting other parts of the system. Employing containerization technologies like Docker and Kubernetes simplifies deployment and management. Fault tolerance is achieved through techniques like circuit breakers (preventing cascading failures), retries (handling temporary network issues), and health checks (monitoring service health). Consider implementing a service mesh like Istio to manage service-to-service communication, security, and observability. For instance, if the Payment Service fails, the Order Service can temporarily halt payment processing without bringing down the entire platform.

Microservice Communication Patterns

Effective communication between microservices is essential for a well-functioning system. Several patterns are commonly employed:

| Communication Pattern | Description | Example | Pros/Cons |

|---|---|---|---|

| Synchronous (REST/gRPC) | Direct request-response communication. | User Service requests User details from User Database. | Simple to implement, but can lead to blocking and cascading failures. |

| Asynchronous (Message Queues) | Communication via message brokers, decoupling services. | Order Service publishes an event to a queue when an order is placed. Inventory Service subscribes to this queue to update stock levels. | More complex to implement but provides better fault tolerance and scalability. |

| Event-Driven Architecture | Services communicate by publishing and subscribing to events. | Payment Service publishes a “payment successful” event. Order Service listens for this event to update the order status. | Highly scalable and resilient, but requires careful event management. |

| API Gateway | Centralized entry point for all client requests. | All client requests go through the API gateway, which routes them to the appropriate microservice. | Improved security and simplifies client-side interactions. |

Implementing Microservices

Building a microservices architecture isn’t just about drawing diagrams; it’s about choosing the right tools and technologies to bring your vision to life. This section dives into the practical side, exploring the popular choices and best practices for implementing and managing your microservices ecosystem. We’ll cover everything from the frameworks you’ll use to build individual services to the tools that keep them running smoothly.

Popular Technologies and Frameworks for Building Microservices

Selecting the right technology stack is crucial for the success of your microservices architecture. The choice often depends on factors like team expertise, project requirements, and scalability needs. Popular options offer diverse strengths and cater to different programming paradigms.

- Spring Boot (Java): A robust and mature framework offering a comprehensive ecosystem for building microservices in Java. Its convention-over-configuration approach simplifies development and deployment. Spring Boot excels in enterprise-level applications requiring high reliability and transaction management.

- Node.js (JavaScript): Known for its speed and scalability, Node.js is a popular choice for building microservices that handle high volumes of concurrent requests. Its asynchronous, non-blocking I/O model makes it efficient for real-time applications and APIs.

- Kubernetes: While not strictly a framework for building microservices, Kubernetes is the undisputed king of orchestrating them. It automates deployment, scaling, and management of containerized applications, ensuring high availability and efficient resource utilization. Think of it as the conductor of your microservices orchestra.

Message Brokers for Inter-Service Communication

Microservices rarely operate in isolation. Efficient communication between them is vital, and message brokers play a crucial role in facilitating this communication asynchronously and reliably. The choice of broker depends on factors like message volume, throughput requirements, and data persistence needs.

- Kafka: A high-throughput, distributed streaming platform designed for handling massive volumes of data. It’s ideal for applications requiring real-time data processing and event streaming. Think of large-scale data pipelines and real-time analytics.

- RabbitMQ: A versatile message broker supporting various messaging protocols. It’s well-suited for applications requiring robust message delivery guarantees and flexible routing capabilities. It’s a strong contender for applications needing reliable message delivery, even in complex scenarios.

Containerizing Microservices with Docker and Orchestrating with Kubernetes

Containerization with Docker packages each microservice and its dependencies into an isolated container, ensuring consistent execution across different environments. Kubernetes then takes over, automating the deployment, scaling, and management of these containers across a cluster of machines. This combination drastically simplifies deployment and scaling, making it easier to manage a large number of microservices.

Imagine a scenario where you need to scale a specific microservice during a peak demand period. With Docker and Kubernetes, you simply tell Kubernetes to spin up more containers of that specific service; it handles the rest automatically, ensuring smooth operation even under pressure.

Essential Tools for Monitoring and Logging in a Microservices Environment

Monitoring and logging are crucial for maintaining the health and performance of a microservices architecture. Effective monitoring provides insights into the overall system health, while detailed logging helps pinpoint and resolve issues quickly.

- Prometheus: A popular open-source monitoring system that collects and aggregates metrics from your microservices. It provides real-time dashboards and alerting capabilities.

- Grafana: A powerful visualization tool that integrates with Prometheus and other monitoring systems, allowing you to create custom dashboards and visualize your microservices’ performance.

- Elasticsearch, Logstash, and Kibana (ELK Stack): A widely used logging solution that collects, processes, and visualizes logs from your microservices. This combination provides comprehensive log analysis capabilities, crucial for debugging and troubleshooting.

Data Management in Microservices

The beauty of microservices lies in their independence, but this independence brings its own set of challenges, especially when it comes to managing data. Unlike monolithic applications with a single, centralized database, microservices often interact with multiple databases, leading to complexities in data consistency, transactions, and overall data management. Let’s dive into the strategies and approaches for effectively handling these complexities.

Managing data across a distributed system of microservices requires careful planning and a strategic approach. The key is to balance the autonomy of individual services with the need for overall data consistency and integrity. This involves choosing the right database technologies, implementing appropriate data synchronization techniques, and establishing clear data ownership and access control mechanisms.

Strategies for Maintaining Data Consistency and Transactions

Data consistency across multiple microservices isn’t a given; it requires proactive strategies. Sagas, two-phase commit, and event sourcing are popular approaches. Sagas manage distributed transactions by orchestrating a series of local transactions within each microservice. Two-phase commit, while powerful, can introduce performance bottlenecks. Event sourcing, on the other hand, records all changes as a sequence of events, providing a robust audit trail and enabling eventual consistency. The choice depends heavily on the specific requirements and trade-offs between consistency and performance. For example, an e-commerce system might use sagas for order processing, ensuring that inventory updates and payment processing happen reliably, even if one part fails.

Database Approaches for Microservices

The “one-size-fits-all” database approach often fails in a microservices architecture. Polyglot persistence—using different database technologies for different microservices—is a common and often effective solution. A service managing user profiles might benefit from a NoSQL database like MongoDB for its flexibility, while a service handling financial transactions might require the ACID properties of a relational database like PostgreSQL. Shared databases, while simpler to implement initially, can create tight coupling between services and hinder scalability and independent deployment. Choosing the right database for each microservice allows for optimal performance and scalability based on specific data needs. For instance, a social media platform might use Cassandra for handling massive user feeds, while employing a relational database for managing user accounts and relationships.

Data Synchronization Techniques

Efficient data synchronization is crucial for maintaining consistency across microservices. Several techniques exist, each with its strengths and weaknesses. Message queues (like Kafka or RabbitMQ) provide asynchronous, reliable data synchronization, allowing services to communicate without tight coupling. Database replication, while offering strong consistency, can be complex to manage and can impact performance. API-based synchronization, using REST or gRPC, is straightforward but can be less efficient for high-volume data updates. The choice depends on factors such as consistency requirements, data volume, and performance needs. A real-time chat application might use WebSockets for immediate data synchronization, while a batch processing system might leverage message queues for asynchronous updates.

Designing a Data Model for a Microservice-Based System

A well-defined data model is essential for managing data ownership and access control. Each microservice should own its data, minimizing dependencies and promoting independent development. Access control mechanisms, such as API keys, OAuth 2.0, or RBAC (Role-Based Access Control), should be implemented to secure data and prevent unauthorized access. Consider a system with user profiles, order management, and inventory tracking microservices. The user profile service owns user data, the order management service owns order data, and the inventory service owns inventory data. Each service exposes APIs for accessing its data, controlled by appropriate authentication and authorization mechanisms. This clear delineation of data ownership prevents conflicts and simplifies data management.

Testing and Deployment of Microservices

Building and deploying microservices isn’t just about writing code; it’s a whole orchestration of testing and deployment strategies that ensure your system remains robust, scalable, and, dare we say, enjoyable to maintain. Getting this right is crucial for avoiding those late-night debugging sessions fueled by copious amounts of caffeine.

Testing Microservices

Thorough testing is paramount in a microservices architecture. The decentralized nature of the system means individual services need rigorous testing, but so does the interaction between them. Failing to address both aspects can lead to unexpected failures in production. We’re talking about the kind of failures that make your heart sink faster than a lead balloon.

Unit Testing

Unit tests focus on verifying the functionality of individual components within a microservice. Think of it as checking each gear in your complex clockwork mechanism – each must function correctly for the whole to work. Effective unit tests use mocking to isolate the component under test from external dependencies, ensuring accurate assessment of its functionality independent of the wider system. This is particularly important in a microservices environment where dependencies are numerous and potentially complex.

Integration Testing

Once unit tests pass, integration testing verifies the interaction between multiple microservices. This is where we see how the individual gears mesh together. Common approaches include contract testing (verifying that services communicate according to agreed-upon specifications) and end-to-end testing (simulating real-world scenarios to assess overall system behavior). Imagine testing a whole workflow, like ordering a product – from initiating the order to delivery confirmation.

Deployment Strategies, Building Scalable Systems with Microservices Architecture

Deploying microservices requires careful planning and execution to minimize disruption and ensure a smooth transition. The right strategy depends on factors such as service criticality and risk tolerance.

Blue-Green Deployment

In a blue-green deployment, two identical environments exist: a “blue” (production) and a “green” (staging). New code is deployed to the green environment, thoroughly tested, and then traffic is switched from blue to green. If issues arise, traffic can be quickly switched back to the blue environment with minimal downtime. It’s like having a backup singer ready to step in if the main act falters.

Canary Deployment

A canary deployment involves gradually rolling out new code to a small subset of users. This allows for real-world testing in a production environment before a full rollout. If problems occur, the deployment can be quickly halted, limiting the impact of any issues. Think of it like releasing a new song to a small group of fans before launching it to the world. Their feedback can help identify any unforeseen problems.

Configuration and Secrets Management

Managing configuration and secrets (like API keys and database passwords) across numerous microservices requires a robust and secure approach. Centralized configuration management systems and dedicated secret management tools are essential to maintain consistency and security. Imagine a central vault securely storing all sensitive information, accessible only to authorized microservices.

Deploying to a Cloud Platform: A Checklist

Deploying a microservice to a cloud platform involves a series of steps to ensure a successful and efficient process. Here’s a simplified checklist:

- Build and test the microservice.

- Create a cloud infrastructure (e.g., virtual machines, containers).

- Configure networking and security.

- Deploy the microservice to the cloud environment.

- Monitor the microservice for performance and stability.

- Implement logging and tracing for troubleshooting.

- Scale the microservice based on demand.

This checklist provides a framework; the specific steps may vary depending on the chosen cloud platform and the complexity of the microservice.

Security Considerations in Microservices

Microservices architecture, while offering incredible scalability and flexibility, introduces a complex security landscape. The distributed nature of the system, with many independent services communicating constantly, creates numerous potential vulnerabilities that require careful consideration and proactive mitigation strategies. Failing to address these security concerns can lead to significant breaches and compromise the integrity of your entire application.

Common Security Challenges in Microservices Architectures

The decentralized nature of microservices presents unique security challenges compared to monolithic applications. These challenges stem from the increased attack surface due to the numerous independent services and the complexity of managing security across this distributed environment. A breach in one service could potentially compromise the entire system if security isn’t properly handled across the board. For example, a vulnerability in a seemingly insignificant authentication service could allow attackers access to sensitive data across all connected microservices. This necessitates a holistic security approach that considers every component and their interactions.

Securing Inter-Service Communication

Protecting communication between microservices is crucial. Traditional methods like firewalls and intrusion detection systems are still important, but they need to be augmented with strategies specifically designed for microservices. Implementing mutual Transport Layer Security (mTLS) is a common and effective method. mTLS requires each service to authenticate itself to other services using digital certificates, ensuring only authorized services can communicate. Additionally, using API gateways to manage and secure external access to your microservices can help centralize security policies and control access points. Careful consideration should also be given to the encryption methods used for data in transit, choosing robust and widely-supported algorithms. For instance, TLS 1.3 is generally recommended over older versions due to its enhanced security features.

Implementing Authentication and Authorization in a Microservices Environment

Authentication verifies the identity of a user or service, while authorization determines what actions they’re permitted to perform. In a microservices architecture, a centralized authentication service is often employed, using technologies like OAuth 2.0 or OpenID Connect. This allows services to rely on a single source of truth for user identity, simplifying authentication management. Authorization, however, can be implemented at various levels. A fine-grained approach, often using an API gateway or a dedicated authorization service, allows for granular control over access to specific resources within each microservice. For example, a user might be authorized to read data but not modify it, reflecting the principle of least privilege. This requires careful design and implementation of access control mechanisms, potentially involving role-based access control (RBAC) or attribute-based access control (ABAC).

Secure Coding Practices for Microservices

Secure coding is paramount in mitigating vulnerabilities. This includes using parameterized queries to prevent SQL injection attacks, input validation to prevent cross-site scripting (XSS) attacks, and proper error handling to avoid exposing sensitive information. Regular security audits and penetration testing are essential to identify and address potential weaknesses. Adopting a secure development lifecycle (SDLC) that integrates security practices into every stage of development is crucial. This involves incorporating security reviews, static and dynamic code analysis, and security testing throughout the development process, rather than as an afterthought. Following established security best practices and using secure libraries and frameworks will minimize the risk of introducing vulnerabilities. For instance, regularly updating dependencies to patch known vulnerabilities is vital in maintaining a secure microservices environment.

Monitoring and Observability in Microservices

Source: divergentsoftlab.com

Building a robust and scalable microservices architecture isn’t just about designing independent services; it’s equally crucial to ensure you can effectively monitor and understand their behavior. Without proper monitoring, troubleshooting issues, identifying performance bottlenecks, and predicting failures becomes a monumental task, quickly transforming your elegant system into a chaotic mess. Think of it like this: you wouldn’t build a skyscraper without blueprints or a way to check its structural integrity, right? Monitoring and observability are the blueprints and structural integrity checks for your microservices ecosystem.

Effective monitoring and logging are paramount in a microservices architecture due to the distributed nature of the system. The sheer number of independent services, each with its own potential points of failure, necessitates a sophisticated approach to understanding the overall health and performance. Without it, pinpointing the source of a problem can be akin to finding a needle in a digital haystack.

Key Metrics for Microservice Monitoring

Monitoring microservices requires tracking specific metrics to provide a holistic view of their performance and health. These metrics should cover various aspects, providing a comprehensive understanding of the system’s behavior. Ignoring crucial metrics can lead to unexpected outages and performance degradation.

- Request Latency: The time it takes for a request to be processed and a response to be returned. High latency indicates potential bottlenecks or performance issues.

- Error Rates: The percentage of requests that result in errors. A spike in error rates suggests problems within a specific service or across the system.

- Throughput: The number of requests processed per unit of time. Low throughput can indicate capacity constraints.

- Resource Utilization (CPU, Memory, Disk I/O): Monitoring resource consumption helps identify services that are consuming excessive resources or nearing their limits. This can prevent resource exhaustion and subsequent service failures.

- Queue Lengths: For asynchronous communication patterns, monitoring queue lengths helps identify potential bottlenecks and ensure message processing isn’t backing up.

Centralized Logging and Tracing

Implementing centralized logging and tracing is vital for gaining a unified view of events across all microservices. This allows for easier correlation of events, identification of root causes, and improved debugging capabilities. Think of it as having a single, comprehensive logbook for your entire system, rather than a collection of individual service logs.

A robust centralized logging system aggregates logs from all microservices into a single location, often leveraging tools like Elasticsearch, Fluentd, and Kibana (the ELK stack) or similar technologies. This enables searching, filtering, and analyzing logs across the entire system to identify patterns and pinpoint problematic areas.

Distributed tracing, on the other hand, allows you to track requests as they propagate through multiple microservices. Tools like Jaeger or Zipkin provide detailed visualizations of request flows, highlighting latency issues and bottlenecks across service boundaries. This context is essential for debugging complex issues spanning multiple services.

Comprehensive Monitoring Dashboard

A comprehensive monitoring dashboard provides a centralized view of the entire microservices system’s health and performance. Imagine a dashboard divided into sections, each representing a critical aspect of the system.

The first section would display an overview of the system’s overall health, using color-coded indicators (green for healthy, yellow for warning, red for critical) to quickly identify any major issues. Next, individual service health would be presented, each with its key metrics (latency, error rate, resource utilization) displayed graphically. A section dedicated to resource utilization across the entire system would show CPU, memory, and network usage. Another section would display real-time log entries and alerts, providing immediate insights into ongoing events. Finally, a section dedicated to distributed tracing would visualize request flows, allowing quick identification of bottlenecks and performance issues. The dashboard would be highly customizable, allowing users to filter and focus on specific services or metrics based on their needs. This centralized view provides a single pane of glass for monitoring the entire microservices ecosystem, facilitating proactive issue resolution and improved system reliability.

Concluding Remarks: Building Scalable Systems With Microservices Architecture

Building truly scalable systems isn’t about throwing more servers at the problem; it’s about smart architecture. Microservices offer that smart solution, providing the flexibility and resilience needed to thrive in today’s dynamic digital landscape. By understanding the design principles, choosing the right tools, and implementing robust monitoring, you can create systems that not only survive but flourish under pressure. So ditch the monolithic mindset and embrace the power of microservices – your future self (and your users) will thank you.