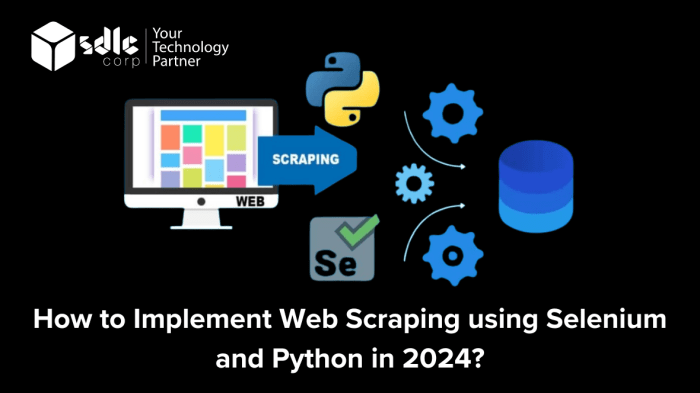

How to Implement Web Scraping with Python? Think of it as digital archaeology – unearthing hidden treasures from the vast expanse of the internet. This guide dives deep into the world of web scraping, showing you how to use Python to extract valuable data from websites. We’ll cover everything from setting up your environment to handling tricky dynamic websites and cleaning your data for analysis. Get ready to unlock the power of information!

We’ll walk you through the essential Python libraries like `requests` and `Beautiful Soup`, showing you how to make HTTP requests, parse HTML, and even tackle those pesky JavaScript-heavy sites using Selenium. We’ll also delve into ethical considerations and best practices, ensuring you scrape responsibly. By the end, you’ll be equipped to extract data from almost any website, transforming raw web content into actionable insights.

Introduction to Web Scraping with Python: How To Implement Web Scraping With Python

Source: sdlccorp.com

Web scraping, in its simplest form, is the automated process of extracting data from websites. Think of it as a digital scavenger hunt, but instead of clues, you’re using code to find and collect information. This information can range from product prices and reviews to news articles and research data – essentially anything that’s publicly available on a website. Python, with its rich ecosystem of libraries, makes this process surprisingly straightforward, even for beginners.

Fundamental Concepts of Web Scraping

Web scraping involves several key steps. First, you need to identify the target website and the specific data you want to extract. Then, you use a library like `requests` to fetch the website’s HTML content. This raw HTML is a structured document containing all the website’s text and elements. Next, you employ a parsing library like `Beautiful Soup` to navigate this HTML, selecting the specific data points you’re interested in. Finally, you process and store this extracted data in a structured format, such as a CSV file or a database. The entire process is governed by the website’s robots.txt file and ethical considerations, which we will discuss next.

Ethical Considerations and Legal Implications of Web Scraping

Web scraping isn’t always a free-for-all. Respecting a website’s terms of service is crucial. Many websites explicitly prohibit scraping in their terms of service, and violating these terms can lead to legal action. Furthermore, overloading a website with requests can cause performance issues, which is unethical and potentially illegal. Always check the website’s `robots.txt` file (usually located at `website.com/robots.txt`), which Artikels which parts of the site should not be scraped. Overly aggressive scraping can also lead to your IP address being blocked. Consider the impact your scraping activities might have on the website’s servers and users. Respectful scraping practices involve polite politeness, adhering to rate limits, and avoiding unnecessary strain on the target website.

Popular Python Libraries for Web Scraping

Python offers several powerful libraries that simplify the web scraping process. Choosing the right library depends on the complexity of your project and your specific needs. Below is a comparison of three popular choices:

| Library Name | Primary Function | Advantages | Disadvantages |

|---|---|---|---|

| requests | Fetching website content (HTML) | Simple and easy to use; excellent for basic tasks. | Requires additional libraries for parsing HTML; not suitable for complex scraping tasks. |

| Beautiful Soup | Parsing HTML and XML | Powerful parsing capabilities; flexible and easy to learn; supports various parsers. | Can be slower than Scrapy for large-scale projects. |

| Scrapy | Building web scrapers | Fast and efficient; built-in features for handling requests, parsing, and data storage; highly scalable. | Steeper learning curve compared to requests and Beautiful Soup. |

Setting up the Development Environment

Source: gologin.com

Web scraping requires a solid foundation. Before diving into the exciting world of data extraction, you need to set up your development environment. This involves installing Python, a powerful programming language perfect for this task, and a few essential libraries that simplify the scraping process. We’ll also create a project and set up a virtual environment for organized and efficient coding.

Setting up your environment might seem daunting, but with a clear, step-by-step guide, it becomes straightforward. Think of it as building the scaffolding for your web scraping skyscraper – a necessary step before constructing the impressive structure itself.

Python Installation

Installing Python is the first crucial step. Download the latest version of Python from the official Python website (python.org). Choose the installer appropriate for your operating system (Windows, macOS, or Linux). During installation, make sure to select the option to add Python to your system’s PATH environment variable. This allows you to run Python from your command line or terminal without specifying its full path. After installation, verify the installation by opening your terminal or command prompt and typing `python –version`. This should display the installed Python version number. If it doesn’t, you might need to adjust your system’s PATH settings or reinstall Python.

Installing Necessary Libraries

Once Python is installed, we need to install the libraries that will make web scraping easier. The most common library is `requests`, which handles fetching web pages, and `Beautiful Soup 4`, which parses HTML and XML data. You can install these using `pip`, Python’s package installer. Open your terminal or command prompt and execute these commands:

pip install requests beautifulsoup4These commands will download and install the necessary libraries. If you encounter errors, ensure that `pip` is correctly configured and that you have an active internet connection.

Creating a New Python Project

Now, let’s create a dedicated folder for your web scraping project. This keeps things organized and prevents conflicts with other projects. Choose a descriptive name (e.g., “web_scraper”). Next, navigate to this folder using your terminal:

cd /path/to/your/web_scraperReplace `/path/to/your/web_scraper` with the actual path to your project folder. Inside this folder, you’ll create your Python script files.

Setting up a Virtual Environment

Virtual environments are crucial for managing project dependencies. They create isolated spaces for each project, preventing conflicts between different project requirements. To create a virtual environment, use the `venv` module (available in Python 3.3 and later):

python3 -m venv .venvThis command creates a virtual environment named “.venv” in your project directory. To activate the environment, use the following commands (the exact command might vary slightly depending on your operating system):

# On Windows

.venv\Scripts\activate

# On macOS/Linux

source .venv/bin/activateOnce activated, your terminal prompt will typically change to indicate that the virtual environment is active. Now, any packages you install using `pip` will be installed only within this environment, keeping your global Python installation clean and organized. Remember to deactivate the environment when you’re finished working on the project using the command `deactivate`.

Making HTTP Requests with `requests`

So, you’ve got your Python environment all set up and you’re ready to dive into the exciting world of web scraping. But before you can start extracting data, you need to learn how to fetch web pages. This is where the `requests` library comes in – your trusty sidekick for making HTTP requests. Think of it as the courier delivering web pages right to your Python program.

The `requests` library simplifies the process of interacting with web servers, allowing you to retrieve data from websites using various HTTP methods. We’ll focus on the two most common: GET and POST requests. Understanding how to use these effectively is fundamental to successful web scraping.

GET Requests, How to Implement Web Scraping with Python

GET requests are used to retrieve data from a specified resource. Imagine it as asking a server, “Hey, can I please have this page?”. The server responds with the requested data, if available. Here’s how you’d make a GET request using `requests` in Python:

“`python

import requests

response = requests.get(“https://www.example.com”)

if response.status_code == 200:

print(“Request successful!”)

print(response.text) # Access the HTML content

else:

print(f”Request failed with status code: response.status_code”)

“`

This code snippet first imports the `requests` library. Then, it makes a GET request to `https://www.example.com`. The `response` object contains the server’s response, including the HTTP status code and the webpage’s content. We check the status code; 200 indicates success. If successful, we print the HTML content; otherwise, we print the error status code.

POST Requests

POST requests are used to send data to a server, often to create or update resources. Think of it as sending a form submission. They are less common in simple web scraping but become crucial when interacting with websites that require data submission. Here’s an example:

“`python

import requests

data = ‘key1’: ‘value1’, ‘key2’: ‘value2’

response = requests.post(“https://httpbin.org/post”, data=data)

if response.status_code == 200:

print(“Request successful!”)

print(response.json()) # Access the JSON response

else:

print(f”Request failed with status code: response.status_code”)

“`

This example sends a POST request to `https://httpbin.org/post` with a dictionary containing key-value pairs. The `httpbin.org` website is useful for testing HTTP requests because it echoes back the data you send. The response is often in JSON format, which we access using `response.json()`.

Handling HTTP Status Codes

HTTP status codes are crucial for understanding the outcome of your requests. A 200 OK indicates success, but other codes signify different situations. For instance, a 404 Not Found means the resource doesn’t exist, and a 500 Internal Server Error indicates a problem on the server’s side. Properly handling these codes is vital for robust scraping. Ignoring errors can lead to unexpected crashes or inaccurate data. Always check the `response.status_code` after each request.

Incorporating Headers and Parameters

Adding headers and parameters to your requests provides more control and allows you to mimic a web browser’s behavior more closely. Headers contain metadata about the request, such as the user agent (identifying your browser), while parameters are used to pass additional data within the URL.

For example, to specify a custom user agent:

“`python

headers = ‘User-Agent’: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3’

response = requests.get(“https://www.example.com”, headers=headers)

“`

This adds a `User-Agent` header, making it appear as if a specific Chrome browser is making the request. This is often necessary to avoid being blocked by websites that detect scraping bots. Adding parameters is similarly straightforward:

“`python

params = ‘param1’: ‘value1’, ‘param2’: ‘value2’

response = requests.get(“https://www.example.com”, params=params)

“`

This appends parameters to the URL, modifying the request. Many websites use parameters to filter or sort data. Understanding how to use them effectively is crucial for targeted scraping.

Parsing HTML with `Beautiful Soup`

So, you’ve made your HTTP requests and successfully fetched the HTML content of a webpage. Now what? This is where Beautiful Soup steps in, acting as your trusty HTML parser, allowing you to navigate and extract the juicy data you need. Think of it as a highly skilled librarian, expertly organizing and retrieving information from a chaotic pile of books (or in this case, HTML).

Beautiful Soup is a Python library that makes parsing HTML and XML incredibly straightforward. It offers a simple and intuitive API to traverse the parsed HTML tree, allowing you to easily find specific elements and extract the data you’re looking for. This makes it an essential tool for any web scraping project.

CSS Selectors for Element Selection

CSS selectors provide a powerful and concise way to target specific HTML elements within a document. Beautiful Soup seamlessly integrates with this familiar syntax, allowing you to select elements based on their tag names, classes, IDs, and attributes. For example, soup.select(".product-title") would select all elements with the class “product-title,” while soup.select("#main-content") would select the element with the ID “main-content.” The flexibility of CSS selectors makes it easy to pinpoint even deeply nested elements within complex HTML structures. You can combine multiple selectors to refine your selection even further, for instance, soup.select("div.product-item h3") selects all

tags that are children of a

with the class “product-item”.

XPath for Element Selection

XPath offers another powerful method for navigating and selecting elements in an HTML document. Unlike CSS selectors, XPath uses a path-like syntax to traverse the XML tree, making it particularly useful for complex or deeply nested structures. For instance, soup.select('//div[@id="main-content"]/p') would select all

tags that are children of a

with the id “main-content”. While CSS selectors are often more concise for simple tasks, XPath provides a more robust and flexible approach for intricate selections, especially when dealing with less predictable HTML structures. The choice between CSS selectors and XPath often depends on the complexity of the HTML and personal preference.

Extracting Data from HTML Elements

Once you’ve selected the relevant elements, extracting the data is equally straightforward. Beautiful Soup provides methods to access various aspects of the HTML elements. You can extract text content using the .text attribute, attributes using the .get() method, and navigate the element tree using methods like .find(), .find_all(), and .children. For instance, element.text will give you the text content of the element, while element.get('href') will retrieve the value of the ‘href’ attribute.

Understanding how to effectively use these methods is crucial for efficient data extraction.

.find(tag, attributes): Finds the first matching tag with specified attributes..find_all(tag, attributes): Finds all matching tags with specified attributes. Returns a list..select(css_selector): Finds elements using CSS selectors. Returns a list..text: Extracts the text content of an element..get(attribute_name): Extracts the value of a specified attribute..attrs: Returns a dictionary containing all attributes of an element..parent: Returns the parent element..children: Returns a generator yielding all direct children..descendants: Returns a generator yielding all descendants.

Handling Dynamic Websites with Selenium

So far, we’ve tackled static websites – those whose content is fully loaded when the page initially loads. But the internet is a dynamic place! Many websites use JavaScript to load content after the initial page load, presenting a challenge for our simple `requests` and Beautiful Soup approach. This is where Selenium comes in, a powerful tool that allows us to interact with websites as a real user would.

Selenium essentially acts as a bridge between your Python code and a web browser. It automates browser actions like clicking buttons, filling forms, and scrolling down a page, allowing you to access the fully rendered content, including that generated by JavaScript. This is crucial for scraping dynamic websites, which often hide data behind asynchronous loading or complex interactions.

Selenium’s Interaction with Web Browsers

Selenium achieves this interaction by controlling a web browser instance (like Chrome, Firefox, or Edge) programmatically. It sends commands to the browser, instructing it to navigate to a URL, interact with elements on the page, and retrieve the resulting HTML. This process mimics a user’s actions, ensuring that all JavaScript is executed and the data you want is available. The browser then acts as an intermediary, rendering the page completely, including all dynamically loaded content. Selenium then extracts the data from the fully rendered page, providing you with the complete picture.

Scraping JavaScript-Rendered Websites with Selenium

Let’s see how to use Selenium to scrape a website that uses JavaScript to load data. Assume we want to scrape product titles from an e-commerce site that loads product details using AJAX calls after the page loads. First, we’ll need to install Selenium and a webdriver (a program that controls the browser). For example, to use Chrome, you’d install the ChromeDriver.

The code below demonstrates a basic example. Remember to replace `”YOUR_CHROME_DRIVER_PATH”` with the actual path to your ChromeDriver executable. The specific selectors (like `css_selector` or `xpath`) will depend on the website’s structure, and you’ll need to inspect the website’s HTML using your browser’s developer tools to find the appropriate selectors.

“`python

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver_path = “YOUR_CHROME_DRIVER_PATH”

driver = webdriver.Chrome(executable_path=driver_path)

driver.get(“https://www.example-ecommerce-site.com/products”) # Replace with your target URL

# Wait for the products to load (adjust the wait time as needed)

WebDriverWait(driver, 10).until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, “.product-title”)))

product_titles = driver.find_elements(By.CSS_SELECTOR, “.product-title”) # Replace with the actual CSS selector

for title in product_titles:

print(title.text)

driver.quit()

“`

This code first initializes the webdriver, then navigates to the target URL. It then uses `WebDriverWait` to ensure that the product titles have loaded before attempting to access them. Finally, it extracts the text content of each product title element and prints it to the console. Remember to always respect a website’s `robots.txt` and terms of service when scraping. Excessive scraping can overload servers, so be mindful of your requests.

Data Extraction and Cleaning

Source: freecodecamp.org

Mastering web scraping with Python opens doors to automating data collection, but remember, freelancing has its risks. A crucial aspect to consider alongside your Python skills is securing your financial future; check out The Benefits of Having Disability Insurance as a Freelancer to learn more. Then, armed with both coding prowess and financial security, you can confidently tackle any web scraping project that comes your way.

So you’ve successfully scraped a webpage – congrats! But raw scraped data is like a diamond in the rough; it needs polishing to be truly valuable. This section dives into the crucial steps of extracting the specific information you need and then cleaning it up for analysis or storage. We’ll cover techniques for handling various data types and formats, ensuring your data is ready for its close-up.

Data extraction and cleaning are iterative processes. You might need to refine your extraction methods based on the structure of the website and the quality of the scraped data. Thorough cleaning ensures data accuracy and consistency, preventing errors down the line. This is where the magic happens; turning messy web data into usable insights.

Extracting Data from Scraped HTML

Extracting data involves pinpointing the relevant elements within the scraped HTML and retrieving their content. Beautiful Soup makes this surprisingly straightforward. We can use various methods, depending on the HTML structure. Finding elements by tag name, class, ID, or attributes are common techniques. For example, if we want all the product titles from a shopping website, and these titles are within `

` tags with the class `product-title`, we can use Beautiful Soup’s `find_all` method. This will return a list of all the `

` elements matching our criteria, from which we can then extract the text content.

Consider a scenario where you’re scraping product information from an e-commerce site. Each product is represented by a `div` with the class “product”. Inside each “product” div, the price is within a `span` tag with the class “price”. Using Beautiful Soup, you could iterate through each “product” div, find the “price” span within it, and extract the price value as a string. This string would then need further cleaning to remove any currency symbols or formatting.

Cleaning and Transforming Extracted Data

Raw scraped data often contains unwanted characters, inconsistencies in formatting, and missing values. Cleaning involves removing or correcting these issues to ensure data quality. Common cleaning tasks include removing extra whitespace, handling special characters, converting data types (e.g., strings to numbers or dates), and dealing with missing or inconsistent data.

For instance, if you’re extracting prices, you might find values like “$19.99”, “£25.50”, or “10.00 EUR”. Cleaning this data would involve removing the currency symbols, converting the strings to floating-point numbers, and potentially standardizing the currency to a single unit.

Handling Different Data Formats

Web scraping often involves dealing with diverse data formats. Text data requires handling whitespace, special characters, and encoding issues. Numeric data might need to be converted to the appropriate data type and handled for potential errors. Dates often come in various formats, requiring parsing and standardization.

Let’s imagine you are scraping a news website and need to extract publication dates. You might encounter dates in formats like “October 26, 2023”, “26/10/2023”, or “2023-10-26”. Python’s `datetime` module provides powerful tools to parse these various formats and convert them into a consistent, standardized format (e.g., YYYY-MM-DD) for easier analysis and storage. Error handling is key here; not all scraped dates will be perfectly formatted, so robust error handling is crucial.

Data Storage and Management

So you’ve successfully scraped your data – congratulations! But the journey doesn’t end there. Raw scraped data is like a pile of LEGO bricks; you have all the pieces, but you need a system to build something amazing. This section dives into how to effectively store and manage your web-scraped treasure trove, ensuring it’s organized, accessible, and ready for analysis. We’ll explore various methods, from simple text files to robust databases, helping you choose the best approach for your project’s scale and complexity.

Data storage is a crucial aspect of any web scraping project. Choosing the right method depends on factors like data volume, structure, and how you plan to use the data later. Incorrect storage can lead to inefficiencies, data loss, or difficulties in accessing and analyzing your hard-earned information. Let’s look at some common solutions.

Storing Data in CSV Files

CSV (Comma Separated Values) files are a straightforward and widely compatible option for storing tabular data. Each line in a CSV represents a row, and values within a row are separated by commas. Python’s `csv` module provides tools for easily writing and reading CSV files. For example, imagine you scraped product information (name, price, description). You could store this in a CSV like this:

“`python

import csv

data = [

[“Product Name”, “Price”, “Description”],

[“Awesome Widget”, “29.99”, “A really great widget!”],

[“Super Gadget”, “49.99”, “This gadget is super awesome!”]

]

with open(‘products.csv’, ‘w’, newline=”, encoding=’utf-8′) as csvfile:

writer = csv.writer(csvfile)

writer.writerows(data)

“`

This creates a `products.csv` file containing your neatly organized product data. CSV is great for smaller datasets and when you need a simple, human-readable format. However, it struggles with complex data structures.

Storing Data in JSON Files

JSON (JavaScript Object Notation) is a lightweight data-interchange format ideal for representing structured data. Unlike CSV’s tabular structure, JSON allows for nested objects and arrays, making it suitable for more complex data. The Python `json` module simplifies JSON handling. Continuing with our product example, JSON might look like this:

“`python

import json

data = [

“name”: “Awesome Widget”, “price”: 29.99, “description”: “A really great widget!”,

“name”: “Super Gadget”, “price”: 49.99, “description”: “This gadget is super awesome!”

]

with open(‘products.json’, ‘w’, encoding=’utf-8′) as jsonfile:

json.dump(data, jsonfile, indent=4)

“`

This generates a `products.json` file with a more flexible and easily parsed structure, especially beneficial for applications that need to handle nested or varied data.

Storing Data in SQLite Databases

For larger datasets or when you need more advanced querying capabilities, a database is the way to go. SQLite is a lightweight, file-based database system perfect for smaller projects. Python’s `sqlite3` module provides convenient database interaction.

“`python

import sqlite3

conn = sqlite3.connect(‘products.db’)

cursor = conn.cursor()

cursor.execute(”’

CREATE TABLE IF NOT EXISTS products (

name TEXT,

price REAL,

description TEXT

)

”’)

data = [

(“Awesome Widget”, 29.99, “A really great widget!”),

(“Super Gadget”, 49.99, “This gadget is super awesome!”)

]

cursor.executemany(“INSERT INTO products VALUES (?, ?, ?)”, data)

conn.commit()

conn.close()

“`

This creates an SQLite database (`products.db`) with a table to store your product information. SQLite offers the advantages of structured query language (SQL) for efficient data retrieval and manipulation, handling much larger datasets more efficiently than simple files.

Managing Large Datasets

When dealing with substantial amounts of scraped data, efficient management is key. Consider these strategies:

Data chunking: Instead of processing everything at once, break down your scraping and storage into smaller, manageable chunks. This reduces memory consumption and improves performance.

Database indexing: If using a database, create indexes on frequently queried columns to speed up data retrieval.

Data compression: Compressing your data files (e.g., using gzip) reduces storage space and improves transfer speeds.

Data cleaning and normalization: Before storage, clean and normalize your data to ensure consistency and accuracy. This includes handling missing values, removing duplicates, and standardizing formats.

Advanced Web Scraping Techniques

So, you’ve mastered the basics of web scraping with Python. You can make requests, parse HTML, and even handle some dynamic content. But the real world of web scraping is far more nuanced and challenging. This section dives into the advanced techniques you’ll need to navigate the complexities and pitfalls of efficiently and ethically extracting data from the web. We’ll explore strategies for handling intricate website structures, evading anti-scraping measures, and scraping responsibly.

This section covers advanced techniques to improve your web scraping efficiency and avoid common pitfalls. We’ll explore methods for dealing with pagination, complex website structures, anti-scraping measures, and responsible scraping practices. Mastering these techniques will transform you from a novice scraper into a seasoned data extraction pro.

Handling Pagination and Complex Website Structures

Many websites present data across multiple pages, requiring sophisticated techniques to scrape all the information. Understanding the website’s pagination structure—whether it uses query parameters, “next” buttons, or other methods—is crucial. For websites with complex structures, using CSS selectors or XPath expressions with Beautiful Soup becomes increasingly important to pinpoint the specific elements containing the desired data. Consider using libraries like Scrapy, which provides built-in support for crawling multiple pages and handling complex website navigation. For example, if a website uses numbered pages like “example.com/page/1”, “example.com/page/2”, etc., a simple loop can iterate through the pages, constructing the URL for each iteration. More complex structures may require recursive functions or more sophisticated parsing techniques.

Dealing with Anti-Scraping Measures

Websites employ various anti-scraping measures to protect their data. These include rate limiting (restricting the number of requests per time unit), IP blocking (banning specific IP addresses), and CAPTCHAs (tests to distinguish humans from bots). To overcome these, implement delays between requests using the `time.sleep()` function in Python. Rotating proxies—using different IP addresses for each request—can help avoid IP bans. Employing headless browsers like Selenium can help render JavaScript content and bypass some anti-scraping mechanisms that rely on detecting headless requests. For CAPTCHAs, consider using CAPTCHA solving services (though this often incurs a cost and raises ethical considerations). Remember, always respect the website’s `robots.txt` file, which Artikels which parts of the site should not be scraped.

Rotating Proxies and User Agents

Websites often identify and block scrapers based on repeated requests from the same IP address or user agent. Rotating proxies masks your IP address, making it appear as though requests originate from various locations. Similarly, rotating user agents—the strings identifying your browser—prevents detection based on a consistent user agent string. Many services offer proxy rotation, often as part of a paid subscription. Implementing proxy rotation in your scraping script involves dynamically changing the proxy settings for each request, usually through configuration settings within the `requests` library or a dedicated proxy management library. Rotating user agents is similarly implemented by randomly selecting from a list of common user agent strings before each request.

Ethical and Responsible Web Scraping Best Practices

Before you start scraping, it’s crucial to understand the ethical and legal implications. Respecting website terms of service and adhering to `robots.txt` rules is paramount. Avoid overloading the target server with requests; implement delays and respect rate limits. Always be mindful of the data you’re collecting and how you’re using it. Consider the privacy implications of scraping personal data and ensure compliance with relevant data protection laws.

- Always check the website’s `robots.txt` file before scraping.

- Respect the website’s terms of service and any usage policies.

- Implement delays between requests to avoid overloading the server.

- Use polite user agents and identify yourself as a scraper where possible.

- Never scrape personal data without explicit consent.

- Comply with all applicable data protection laws and regulations.

- Use your scraped data responsibly and ethically.

- Consider the impact of your scraping on the website and its users.

Illustrative Example

Let’s dive into a practical example of web scraping. We’ll build a Python script to extract product information – name, price, and description – from a fictional e-commerce website. This will solidify your understanding of the techniques discussed earlier. Remember, always respect a website’s robots.txt and terms of service before scraping.

This example uses a simplified HTML structure for clarity. Real-world e-commerce sites have far more complex HTML, but the core principles remain the same. We’ll target specific HTML tags and attributes to pinpoint the data we need.

Target HTML Structure and Data Extraction

Imagine our fictional e-commerce website displays product information like this:

<div class=”product”>

<h2 class=”product-name”>Awesome Widget</h2>

<span class=”product-price”>$29.99</span>

<p class=”product-description”>This is an awesome widget! It does amazing things!</p>

</div>

This snippet shows a <div> with class “product” containing the product name, price, and description within their respective tags. We’ll use Beautiful Soup to parse this structure and extract the relevant information.

Python Script for Data Extraction

Here’s a Python script that uses the `requests` library to fetch the HTML content and `Beautiful Soup` to parse it and extract the data:

“`python

import requests

from bs4 import BeautifulSoup

# Fictional URL (replace with actual URL if testing on a real website)

url = “http://example.com/products”

response = requests.get(url)

response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx)

soup = BeautifulSoup(response.content, “html.parser”)

products = soup.find_all(“div”, class_=”product”)

for product in products:

name = product.find(“h2″, class_=”product-name”).text.strip()

price = product.find(“span”, class_=”product-price”).text.strip()

description = product.find(“p”, class_=”product-description”).text.strip()

print(f”Product Name: name”)

print(f”Price: price”)

print(f”Description: description”)

print(“-” * 20)

“`

This script first fetches the HTML content from the specified URL using `requests.get()`. It then uses Beautiful Soup to parse the content and find all <div> elements with the class “product”. Finally, it iterates through each product, extracting the name, price, and description using `find()` and accessing the `.text` attribute to get the text content. The `.strip()` method removes leading/trailing whitespace.

Error Handling and Robustness

Real-world scraping often encounters unexpected situations. For instance, a product might be missing a description, or the website structure might change. Robust scripts handle these scenarios gracefully. Adding `try-except` blocks to catch potential errors is crucial for preventing crashes. For example:

“`python

try:

name = product.find(“h2″, class_=”product-name”).text.strip()

except AttributeError:

name = “N/A” # Handle case where product name is missing

“`

This improved code snippet handles the case where a product might not have a name, assigning “N/A” instead of causing an error. Similar error handling should be implemented for price and description. This ensures the script continues running even if some data is missing from individual product entries.

Last Point

So, you’ve learned the art of web scraping with Python! From crafting elegant requests to taming dynamic websites and cleaning your extracted data, you’re now ready to explore the boundless ocean of online information. Remember to always respect website terms of service and scrape responsibly. The world of data awaits – go forth and conquer (ethically, of course)!

XPath for Element Selection

XPath offers another powerful method for navigating and selecting elements in an HTML document. Unlike CSS selectors, XPath uses a path-like syntax to traverse the XML tree, making it particularly useful for complex or deeply nested structures. For instance, soup.select('//div[@id="main-content"]/p') would select all

tags that are children of a

Extracting Data from HTML Elements

Once you’ve selected the relevant elements, extracting the data is equally straightforward. Beautiful Soup provides methods to access various aspects of the HTML elements. You can extract text content using the .text attribute, attributes using the .get() method, and navigate the element tree using methods like .find(), .find_all(), and .children. For instance, element.text will give you the text content of the element, while element.get('href') will retrieve the value of the ‘href’ attribute.

Understanding how to effectively use these methods is crucial for efficient data extraction.

.find(tag, attributes): Finds the first matching tag with specified attributes..find_all(tag, attributes): Finds all matching tags with specified attributes. Returns a list..select(css_selector): Finds elements using CSS selectors. Returns a list..text: Extracts the text content of an element..get(attribute_name): Extracts the value of a specified attribute..attrs: Returns a dictionary containing all attributes of an element..parent: Returns the parent element..children: Returns a generator yielding all direct children..descendants: Returns a generator yielding all descendants.

Handling Dynamic Websites with Selenium

So far, we’ve tackled static websites – those whose content is fully loaded when the page initially loads. But the internet is a dynamic place! Many websites use JavaScript to load content after the initial page load, presenting a challenge for our simple `requests` and Beautiful Soup approach. This is where Selenium comes in, a powerful tool that allows us to interact with websites as a real user would.

Selenium essentially acts as a bridge between your Python code and a web browser. It automates browser actions like clicking buttons, filling forms, and scrolling down a page, allowing you to access the fully rendered content, including that generated by JavaScript. This is crucial for scraping dynamic websites, which often hide data behind asynchronous loading or complex interactions.

Selenium’s Interaction with Web Browsers

Selenium achieves this interaction by controlling a web browser instance (like Chrome, Firefox, or Edge) programmatically. It sends commands to the browser, instructing it to navigate to a URL, interact with elements on the page, and retrieve the resulting HTML. This process mimics a user’s actions, ensuring that all JavaScript is executed and the data you want is available. The browser then acts as an intermediary, rendering the page completely, including all dynamically loaded content. Selenium then extracts the data from the fully rendered page, providing you with the complete picture.

Scraping JavaScript-Rendered Websites with Selenium

Let’s see how to use Selenium to scrape a website that uses JavaScript to load data. Assume we want to scrape product titles from an e-commerce site that loads product details using AJAX calls after the page loads. First, we’ll need to install Selenium and a webdriver (a program that controls the browser). For example, to use Chrome, you’d install the ChromeDriver.

The code below demonstrates a basic example. Remember to replace `”YOUR_CHROME_DRIVER_PATH”` with the actual path to your ChromeDriver executable. The specific selectors (like `css_selector` or `xpath`) will depend on the website’s structure, and you’ll need to inspect the website’s HTML using your browser’s developer tools to find the appropriate selectors.

“`python

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver_path = “YOUR_CHROME_DRIVER_PATH”

driver = webdriver.Chrome(executable_path=driver_path)

driver.get(“https://www.example-ecommerce-site.com/products”) # Replace with your target URL

# Wait for the products to load (adjust the wait time as needed)

WebDriverWait(driver, 10).until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, “.product-title”)))

product_titles = driver.find_elements(By.CSS_SELECTOR, “.product-title”) # Replace with the actual CSS selector

for title in product_titles:

print(title.text)

driver.quit()

“`

This code first initializes the webdriver, then navigates to the target URL. It then uses `WebDriverWait` to ensure that the product titles have loaded before attempting to access them. Finally, it extracts the text content of each product title element and prints it to the console. Remember to always respect a website’s `robots.txt` and terms of service when scraping. Excessive scraping can overload servers, so be mindful of your requests.

Data Extraction and Cleaning

Source: freecodecamp.org

Mastering web scraping with Python opens doors to automating data collection, but remember, freelancing has its risks. A crucial aspect to consider alongside your Python skills is securing your financial future; check out The Benefits of Having Disability Insurance as a Freelancer to learn more. Then, armed with both coding prowess and financial security, you can confidently tackle any web scraping project that comes your way.

So you’ve successfully scraped a webpage – congrats! But raw scraped data is like a diamond in the rough; it needs polishing to be truly valuable. This section dives into the crucial steps of extracting the specific information you need and then cleaning it up for analysis or storage. We’ll cover techniques for handling various data types and formats, ensuring your data is ready for its close-up.

Data extraction and cleaning are iterative processes. You might need to refine your extraction methods based on the structure of the website and the quality of the scraped data. Thorough cleaning ensures data accuracy and consistency, preventing errors down the line. This is where the magic happens; turning messy web data into usable insights.

Extracting Data from Scraped HTML

Extracting data involves pinpointing the relevant elements within the scraped HTML and retrieving their content. Beautiful Soup makes this surprisingly straightforward. We can use various methods, depending on the HTML structure. Finding elements by tag name, class, ID, or attributes are common techniques. For example, if we want all the product titles from a shopping website, and these titles are within `

` tags with the class `product-title`, we can use Beautiful Soup’s `find_all` method. This will return a list of all the `

` elements matching our criteria, from which we can then extract the text content.

Consider a scenario where you’re scraping product information from an e-commerce site. Each product is represented by a `div` with the class “product”. Inside each “product” div, the price is within a `span` tag with the class “price”. Using Beautiful Soup, you could iterate through each “product” div, find the “price” span within it, and extract the price value as a string. This string would then need further cleaning to remove any currency symbols or formatting.

Cleaning and Transforming Extracted Data

Raw scraped data often contains unwanted characters, inconsistencies in formatting, and missing values. Cleaning involves removing or correcting these issues to ensure data quality. Common cleaning tasks include removing extra whitespace, handling special characters, converting data types (e.g., strings to numbers or dates), and dealing with missing or inconsistent data.

For instance, if you’re extracting prices, you might find values like “$19.99”, “£25.50”, or “10.00 EUR”. Cleaning this data would involve removing the currency symbols, converting the strings to floating-point numbers, and potentially standardizing the currency to a single unit.

Handling Different Data Formats

Web scraping often involves dealing with diverse data formats. Text data requires handling whitespace, special characters, and encoding issues. Numeric data might need to be converted to the appropriate data type and handled for potential errors. Dates often come in various formats, requiring parsing and standardization.

Let’s imagine you are scraping a news website and need to extract publication dates. You might encounter dates in formats like “October 26, 2023”, “26/10/2023”, or “2023-10-26”. Python’s `datetime` module provides powerful tools to parse these various formats and convert them into a consistent, standardized format (e.g., YYYY-MM-DD) for easier analysis and storage. Error handling is key here; not all scraped dates will be perfectly formatted, so robust error handling is crucial.

Data Storage and Management

So you’ve successfully scraped your data – congratulations! But the journey doesn’t end there. Raw scraped data is like a pile of LEGO bricks; you have all the pieces, but you need a system to build something amazing. This section dives into how to effectively store and manage your web-scraped treasure trove, ensuring it’s organized, accessible, and ready for analysis. We’ll explore various methods, from simple text files to robust databases, helping you choose the best approach for your project’s scale and complexity.

Data storage is a crucial aspect of any web scraping project. Choosing the right method depends on factors like data volume, structure, and how you plan to use the data later. Incorrect storage can lead to inefficiencies, data loss, or difficulties in accessing and analyzing your hard-earned information. Let’s look at some common solutions.

Storing Data in CSV Files

CSV (Comma Separated Values) files are a straightforward and widely compatible option for storing tabular data. Each line in a CSV represents a row, and values within a row are separated by commas. Python’s `csv` module provides tools for easily writing and reading CSV files. For example, imagine you scraped product information (name, price, description). You could store this in a CSV like this:

“`python

import csv

data = [

[“Product Name”, “Price”, “Description”],

[“Awesome Widget”, “29.99”, “A really great widget!”],

[“Super Gadget”, “49.99”, “This gadget is super awesome!”]

]

with open(‘products.csv’, ‘w’, newline=”, encoding=’utf-8′) as csvfile:

writer = csv.writer(csvfile)

writer.writerows(data)

“`

This creates a `products.csv` file containing your neatly organized product data. CSV is great for smaller datasets and when you need a simple, human-readable format. However, it struggles with complex data structures.

Storing Data in JSON Files

JSON (JavaScript Object Notation) is a lightweight data-interchange format ideal for representing structured data. Unlike CSV’s tabular structure, JSON allows for nested objects and arrays, making it suitable for more complex data. The Python `json` module simplifies JSON handling. Continuing with our product example, JSON might look like this:

“`python

import json

data = [

“name”: “Awesome Widget”, “price”: 29.99, “description”: “A really great widget!”,

“name”: “Super Gadget”, “price”: 49.99, “description”: “This gadget is super awesome!”

]

with open(‘products.json’, ‘w’, encoding=’utf-8′) as jsonfile:

json.dump(data, jsonfile, indent=4)

“`

This generates a `products.json` file with a more flexible and easily parsed structure, especially beneficial for applications that need to handle nested or varied data.

Storing Data in SQLite Databases

For larger datasets or when you need more advanced querying capabilities, a database is the way to go. SQLite is a lightweight, file-based database system perfect for smaller projects. Python’s `sqlite3` module provides convenient database interaction.

“`python

import sqlite3

conn = sqlite3.connect(‘products.db’)

cursor = conn.cursor()

cursor.execute(”’

CREATE TABLE IF NOT EXISTS products (

name TEXT,

price REAL,

description TEXT

)

”’)

data = [

(“Awesome Widget”, 29.99, “A really great widget!”),

(“Super Gadget”, 49.99, “This gadget is super awesome!”)

]

cursor.executemany(“INSERT INTO products VALUES (?, ?, ?)”, data)

conn.commit()

conn.close()

“`

This creates an SQLite database (`products.db`) with a table to store your product information. SQLite offers the advantages of structured query language (SQL) for efficient data retrieval and manipulation, handling much larger datasets more efficiently than simple files.

Managing Large Datasets

When dealing with substantial amounts of scraped data, efficient management is key. Consider these strategies:

Data chunking: Instead of processing everything at once, break down your scraping and storage into smaller, manageable chunks. This reduces memory consumption and improves performance.

Database indexing: If using a database, create indexes on frequently queried columns to speed up data retrieval.

Data compression: Compressing your data files (e.g., using gzip) reduces storage space and improves transfer speeds.

Data cleaning and normalization: Before storage, clean and normalize your data to ensure consistency and accuracy. This includes handling missing values, removing duplicates, and standardizing formats.

Advanced Web Scraping Techniques

So, you’ve mastered the basics of web scraping with Python. You can make requests, parse HTML, and even handle some dynamic content. But the real world of web scraping is far more nuanced and challenging. This section dives into the advanced techniques you’ll need to navigate the complexities and pitfalls of efficiently and ethically extracting data from the web. We’ll explore strategies for handling intricate website structures, evading anti-scraping measures, and scraping responsibly.

This section covers advanced techniques to improve your web scraping efficiency and avoid common pitfalls. We’ll explore methods for dealing with pagination, complex website structures, anti-scraping measures, and responsible scraping practices. Mastering these techniques will transform you from a novice scraper into a seasoned data extraction pro.

Handling Pagination and Complex Website Structures

Many websites present data across multiple pages, requiring sophisticated techniques to scrape all the information. Understanding the website’s pagination structure—whether it uses query parameters, “next” buttons, or other methods—is crucial. For websites with complex structures, using CSS selectors or XPath expressions with Beautiful Soup becomes increasingly important to pinpoint the specific elements containing the desired data. Consider using libraries like Scrapy, which provides built-in support for crawling multiple pages and handling complex website navigation. For example, if a website uses numbered pages like “example.com/page/1”, “example.com/page/2”, etc., a simple loop can iterate through the pages, constructing the URL for each iteration. More complex structures may require recursive functions or more sophisticated parsing techniques.

Dealing with Anti-Scraping Measures

Websites employ various anti-scraping measures to protect their data. These include rate limiting (restricting the number of requests per time unit), IP blocking (banning specific IP addresses), and CAPTCHAs (tests to distinguish humans from bots). To overcome these, implement delays between requests using the `time.sleep()` function in Python. Rotating proxies—using different IP addresses for each request—can help avoid IP bans. Employing headless browsers like Selenium can help render JavaScript content and bypass some anti-scraping mechanisms that rely on detecting headless requests. For CAPTCHAs, consider using CAPTCHA solving services (though this often incurs a cost and raises ethical considerations). Remember, always respect the website’s `robots.txt` file, which Artikels which parts of the site should not be scraped.

Rotating Proxies and User Agents

Websites often identify and block scrapers based on repeated requests from the same IP address or user agent. Rotating proxies masks your IP address, making it appear as though requests originate from various locations. Similarly, rotating user agents—the strings identifying your browser—prevents detection based on a consistent user agent string. Many services offer proxy rotation, often as part of a paid subscription. Implementing proxy rotation in your scraping script involves dynamically changing the proxy settings for each request, usually through configuration settings within the `requests` library or a dedicated proxy management library. Rotating user agents is similarly implemented by randomly selecting from a list of common user agent strings before each request.

Ethical and Responsible Web Scraping Best Practices

Before you start scraping, it’s crucial to understand the ethical and legal implications. Respecting website terms of service and adhering to `robots.txt` rules is paramount. Avoid overloading the target server with requests; implement delays and respect rate limits. Always be mindful of the data you’re collecting and how you’re using it. Consider the privacy implications of scraping personal data and ensure compliance with relevant data protection laws.

- Always check the website’s `robots.txt` file before scraping.

- Respect the website’s terms of service and any usage policies.

- Implement delays between requests to avoid overloading the server.

- Use polite user agents and identify yourself as a scraper where possible.

- Never scrape personal data without explicit consent.

- Comply with all applicable data protection laws and regulations.

- Use your scraped data responsibly and ethically.

- Consider the impact of your scraping on the website and its users.

Illustrative Example

Let’s dive into a practical example of web scraping. We’ll build a Python script to extract product information – name, price, and description – from a fictional e-commerce website. This will solidify your understanding of the techniques discussed earlier. Remember, always respect a website’s robots.txt and terms of service before scraping.

This example uses a simplified HTML structure for clarity. Real-world e-commerce sites have far more complex HTML, but the core principles remain the same. We’ll target specific HTML tags and attributes to pinpoint the data we need.

Target HTML Structure and Data Extraction

Imagine our fictional e-commerce website displays product information like this:

<div class=”product”>

<h2 class=”product-name”>Awesome Widget</h2>

<span class=”product-price”>$29.99</span>

<p class=”product-description”>This is an awesome widget! It does amazing things!</p>

</div>

This snippet shows a <div> with class “product” containing the product name, price, and description within their respective tags. We’ll use Beautiful Soup to parse this structure and extract the relevant information.

Python Script for Data Extraction

Here’s a Python script that uses the `requests` library to fetch the HTML content and `Beautiful Soup` to parse it and extract the data:

“`python

import requests

from bs4 import BeautifulSoup# Fictional URL (replace with actual URL if testing on a real website)

url = “http://example.com/products”response = requests.get(url)

response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx)soup = BeautifulSoup(response.content, “html.parser”)

products = soup.find_all(“div”, class_=”product”)

for product in products:

name = product.find(“h2″, class_=”product-name”).text.strip()

price = product.find(“span”, class_=”product-price”).text.strip()

description = product.find(“p”, class_=”product-description”).text.strip()print(f”Product Name: name”)

print(f”Price: price”)

print(f”Description: description”)

print(“-” * 20)

“`

This script first fetches the HTML content from the specified URL using `requests.get()`. It then uses Beautiful Soup to parse the content and find all <div> elements with the class “product”. Finally, it iterates through each product, extracting the name, price, and description using `find()` and accessing the `.text` attribute to get the text content. The `.strip()` method removes leading/trailing whitespace.

Error Handling and Robustness

Real-world scraping often encounters unexpected situations. For instance, a product might be missing a description, or the website structure might change. Robust scripts handle these scenarios gracefully. Adding `try-except` blocks to catch potential errors is crucial for preventing crashes. For example:

“`python

try:

name = product.find(“h2″, class_=”product-name”).text.strip()

except AttributeError:

name = “N/A” # Handle case where product name is missing

“`

This improved code snippet handles the case where a product might not have a name, assigning “N/A” instead of causing an error. Similar error handling should be implemented for price and description. This ensures the script continues running even if some data is missing from individual product entries.

Last Point

So, you’ve learned the art of web scraping with Python! From crafting elegant requests to taming dynamic websites and cleaning your extracted data, you’re now ready to explore the boundless ocean of online information. Remember to always respect website terms of service and scrape responsibly. The world of data awaits – go forth and conquer (ethically, of course)!