Understanding the Basics of Docker for Developers: Dive into the world of containerization! Forget clunky virtual machines; Docker simplifies application deployment and management like magic. This guide unravels the mysteries of Docker, from its core concepts to advanced techniques, making you a Docker ninja in no time. We’ll explore Docker’s architecture, image management, container orchestration, networking, and security – all the essentials for modern developers.

We’ll cover everything from building your first Docker image to mastering Docker Compose for multi-container apps. Think of it as your ultimate cheat sheet to conquering the complexities of Docker and streamlining your development workflow. Get ready to level up your dev game!

Introduction to Docker

Docker has revolutionized the way developers build, ship, and run applications. It’s become a cornerstone of modern software development, simplifying deployment and improving consistency across different environments. This section will explore the fundamental concepts behind Docker and its impact on the software development lifecycle.

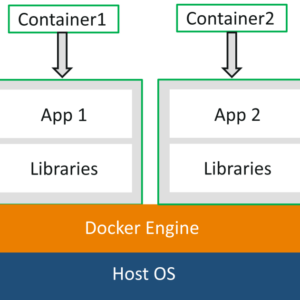

At its heart, Docker utilizes containerization technology. Think of it like this: instead of giving each application its own entire apartment building (a virtual machine), Docker provides each application with its own individual, self-contained apartment (a container). Each apartment has everything it needs – its own kitchen, bathroom, and furniture (libraries, dependencies, and runtime environment) – but it shares the same building structure (the host operating system).

Containerization Explained

Containerization is a lightweight virtualization method. Unlike virtual machines (VMs) that emulate entire hardware, containers share the host operating system’s kernel. This makes them significantly more efficient in terms of resource usage and startup time. Containers package an application and all its dependencies into a single unit, ensuring consistency across development, testing, and production environments. This “packaging” happens through a Docker image, which acts as a blueprint for creating a container. The image contains all the necessary components – code, runtime, system tools, libraries, settings – to run the application.

A Brief History of Docker

Docker’s origins trace back to 2010 with the creation of the Linux Containers (LXC) project. However, it wasn’t until 2013 that Docker, building upon LXC, emerged as a user-friendly and powerful tool. Solomon Hykes and his team at dotCloud (later renamed Docker Inc.) simplified containerization, making it accessible to a wider audience. The project quickly gained popularity, and Docker’s open-source nature fostered a thriving community and ecosystem of tools and services. Its evolution has involved improvements in performance, security, orchestration (with Kubernetes), and integration with various cloud platforms.

Mastering Docker’s fundamentals as a developer is key to efficient workflow. Think of it like optimizing your budget – sometimes smart choices, like learning to leverage containerization, pay off big time, just like choosing a high-deductible health plan, as explained in this helpful guide: How to Save Money on Health Insurance by Choosing a High-Deductible Plan. Understanding these cost-saving strategies, whether in healthcare or development, ultimately boosts your bottom line.

Virtual Machines vs. Docker Containers

Understanding the differences between VMs and Docker containers is crucial. While both provide isolation and portability, they achieve this in fundamentally different ways. The following table highlights these key distinctions:

| Feature | Virtual Machine (VM) | Docker Container |

|---|---|---|

| Operating System | Full guest OS per VM | Shares the host OS kernel |

| Resource Usage | High resource consumption | Lightweight and efficient |

| Boot Time | Slow boot times | Fast startup |

| Portability | Generally portable | Highly portable |

Docker Architecture

So, you’ve grasped the basics of Docker. Now, let’s dive into the engine room – the architecture that makes it all tick. Understanding Docker’s architecture is key to effectively leveraging its power and troubleshooting any issues. Think of it as understanding the blueprint of a powerful tool before you start building.

Docker’s architecture is surprisingly elegant in its simplicity, yet incredibly powerful in its functionality. It revolves around three main components: the Docker client, the Docker daemon (or Docker Engine), and the Docker registry. Let’s break down each one.

Docker Engine

The Docker Engine is the heart of the Docker system. It’s the background process that manages and orchestrates everything. It’s responsible for building, running, and managing containers. Think of it as the conductor of an orchestra, ensuring all the instruments (containers) play in harmony. The Engine handles tasks such as image management, container creation, network management, and storage management. It’s the core component that interacts directly with the operating system’s kernel to create and manage the isolated container environments. It’s written in Go, making it highly portable and efficient.

Docker Client

The Docker client is your interface to the Docker Engine. This is what you interact with directly – the command-line interface (CLI) you use to build, run, and manage your containers. It’s essentially a messenger, sending commands to the Docker Engine and relaying back the results. Whether you’re using the `docker run` command to start a container or `docker ps` to list running containers, you’re interacting with the client. This component can be installed on any system that needs to interact with a Docker Engine, whether it’s your local development machine or a remote server.

Docker Registry

The Docker registry acts as a central repository for Docker images. The most popular registry is Docker Hub, a public repository where you can find and share images. However, you can also set up your own private registries for internal use. Think of it as a vast library of pre-built components, ready to be deployed. When you pull an image using `docker pull`, you’re downloading it from a registry. Conversely, when you push an image using `docker push`, you’re uploading it to a registry. This allows for easy sharing and collaboration, and also allows you to version control your images effectively.

Docker Images and Containers

Docker images are read-only templates containing the application code, runtime, system tools, system libraries and settings needed to run an application. They act as blueprints for containers. A container, on the other hand, is a runnable instance of an image. It’s a fully isolated environment that runs your application. Think of the image as the recipe and the container as the actual cake baked from that recipe. You can create multiple containers from a single image, just like you can bake multiple cakes from one recipe.

Docker Daemon and its Functions

The Docker daemon, often referred to as the Docker Engine, is a persistent background process that manages and orchestrates all Docker objects. It listens for Docker API requests from the client and executes the requested operations. It handles image management, container lifecycle, networking, and storage. The daemon is responsible for creating and managing containers, pulling and pushing images to registries, and ensuring the overall health and performance of the Docker environment. It’s the core engine driving the entire Docker ecosystem.

Diagram of Docker Architecture

Imagine a diagram with three main boxes: “Docker Client,” “Docker Daemon (Engine),” and “Docker Registry.” Arrows indicate the flow of information.

1. An arrow goes from the “Docker Client” to the “Docker Daemon.” This represents the client sending commands (like `docker run`, `docker build`, `docker ps`) to the daemon.

2. Another arrow goes from the “Docker Daemon” to the “Docker Registry.” This represents the daemon communicating with the registry to pull or push images.

3. A bidirectional arrow connects the “Docker Daemon” and the “Docker Client.” This shows the continuous communication between the client and daemon, where the daemon sends back the results of the commands to the client.

4. Within the “Docker Daemon” box, show smaller boxes representing “Container” and “Image.” The “Image” is essentially a layer on top of the “Container” which is the runtime instance of the image. The daemon manages the creation, deletion, and execution of these containers.

The diagram illustrates the client initiating actions, the daemon executing those actions, and the registry providing and receiving image data. The interaction is primarily client-daemon-registry, with the daemon managing the containers and images locally. The entire system works in a coordinated manner, allowing developers to easily build, ship, and run applications in consistent environments.

Working with Docker Images

Docker images are the building blocks of Docker containers. Think of them as blueprints – they contain all the necessary instructions and files to create a running container. Understanding how to build, manage, and share these images is crucial for efficient Docker workflow. This section will walk you through the process, highlighting key commands and best practices.

Building a Docker image involves creating a Dockerfile, a text file containing a series of instructions that Docker uses to assemble the image layer by layer. Each instruction adds a new layer, making the image process efficient and allowing for changes to only rebuild affected layers. This efficiency is a key advantage of Docker’s layered approach.

Building Docker Images from a Dockerfile

A Dockerfile uses specific instructions to define the image. Let’s explore some common ones:

FROM: Specifies the base image. This could be an official image from Docker Hub (likeubuntu:latestorpython:3.9) or a custom image.COPY: Copies files and directories from your local system into the image.RUN: Executes commands during the image build process. This is typically used to install software or configure the environment.CMD: Specifies the command to be executed when the container starts. Note that only oneCMDinstruction will be executed.ENTRYPOINT: Similar toCMD, but its command is always executed and can be overridden byCMD. This is useful for creating reusable images that can be run with different commands.

Here’s a simple example of a Dockerfile that creates a Node.js application image:

FROM node:16

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

CMD ["npm", "start"]

This Dockerfile starts with a Node.js 16 base image, sets the working directory, copies the package files, installs dependencies, copies the application code, and finally, starts the application using npm start.

Pushing and Pulling Docker Images

Once you’ve built an image, you can push it to a Docker registry like Docker Hub to share it with others or store it for later use. Pulling an image retrieves it from the registry.

To push an image, you’ll need a Docker Hub account. After building your image (let’s say it’s tagged as my-node-app), use the following command:

docker push your-dockerhub-username/my-node-app

Replace your-dockerhub-username with your actual username. To pull an image, use:

docker pull your-dockerhub-username/my-node-app

Best Practices for Creating Efficient Docker Images

Creating lean and efficient Docker images is crucial for performance and security. Here are some key best practices:

- Use a minimal base image: Start with a smaller base image to reduce the image size.

- Minimize the number of layers: Avoid unnecessary layers by combining commands where possible.

- Use multi-stage builds: For complex applications, use multi-stage builds to separate build dependencies from the runtime environment, resulting in a smaller final image.

- Use a `.dockerignore` file: This file specifies files and directories to exclude from the image, reducing its size and build time.

- Regularly update base images: Keep your base images up-to-date with security patches.

Docker Compose for Multi-Container Applications

Running a single container is cool, but real-world applications rarely exist in isolation. They often involve multiple services working together – a web server, a database, a message queue, and more. This is where Docker Compose shines, simplifying the management of these multi-container applications. It lets you define and run your entire application stack with a single command, saving you headaches and boosting your productivity.

Docker Compose streamlines the process of defining and running multi-container Docker applications. It uses a YAML file (docker-compose.yml) to specify the services, networks, and volumes needed for your application. This allows for consistent and repeatable deployments across different environments, from your local development machine to production servers. Think of it as the ultimate orchestrator for your containerized world.

Creating a docker-compose.yml File, Understanding the Basics of Docker for Developers

The docker-compose.yml file is the heart of your multi-container application. It’s a simple YAML file that describes all the services your application needs. Let’s break down how to create one. First, you’ll need to define each service, specifying its image, ports, volumes, and dependencies. Then, Docker Compose takes care of building, starting, and linking all the containers together.

Syntax and Options in docker-compose.yml

The docker-compose.yml file uses a straightforward key-value pair structure. Each service is defined as a section within the file. Key options include:

image: Specifies the Docker image to use for the service. This could be a publicly available image or a custom image you’ve built.ports: Maps ports from the container to your host machine. This allows you to access services running inside the containers from your browser or other applications.volumes: Mounts volumes, allowing persistent storage for your data. This is crucial for databases and other stateful services.depends_on: Specifies dependencies between services. This ensures that services start in the correct order.environment: Sets environment variables for the service. This is handy for configuring things like database credentials.networks: Defines the network configuration for your application. This allows containers to communicate with each other.

These options provide a flexible way to define the intricate relationships and configurations needed for complex applications. Properly utilizing these options ensures a robust and scalable multi-container setup.

Example docker-compose.yml for a Web Application with a Database

Let’s create a simple web application using a Node.js server and a PostgreSQL database. This example showcases the power and simplicity of Docker Compose.

“`yaml

version: “3.9”

services:

web:

image: node:16

ports:

– “3000:3000”

volumes:

– ./app:/usr/src/app

working_dir: /usr/src/app

depends_on:

– db

command: npm start

db:

image: postgres:13

environment:

– POSTGRES_USER=myuser

– POSTGRES_PASSWORD=mypassword

– POSTGRES_DB=mydb

ports:

– “5432:5432”

“`

This docker-compose.yml file defines two services: web and db.

The web service uses a Node.js 16 image, maps port 3000, mounts the application code from the current directory, depends on the db service, and runs the npm start command.

The db service uses a PostgreSQL 13 image, sets environment variables for the database user, password, and name, and maps port 5432. The depends_on directive in the web service ensures the database starts before the web server. This is a critical aspect of orchestrating multi-container apps, ensuring a smooth startup process.

Docker Networking: Understanding The Basics Of Docker For Developers

Docker networking is crucial for enabling communication between your containers and the outside world. Understanding the different networking modes and how to configure them is key to building robust and scalable applications. Without proper networking, your containers become isolated islands, unable to interact with each other or access external services. Let’s dive into the different options available.

Docker Networking Modes

Docker offers several networking modes, each with its own strengths and weaknesses. Choosing the right mode depends on your application’s requirements and how you want your containers to interact. The primary modes are bridge, host, none, and overlay.

- Bridge: This is the default networking mode. Containers on the same bridge network can communicate with each other using their container names or IP addresses. They are also accessible from the host machine, but not directly accessible from the outside world without additional configuration (like port mapping). Think of it as a virtual LAN within your Docker host. The bridge network provides isolation between containers and the host’s network, enhancing security. However, it adds a layer of complexity compared to the host mode.

- Host: In host mode, the container shares the host machine’s network stack. This means the container uses the host’s IP address and port, simplifying networking. There’s no isolation; the container operates directly on the host’s network. This is great for simple applications where security isn’t a major concern. However, it lacks the isolation and security benefits of other modes and is unsuitable for applications requiring network segmentation or running multiple containers that need to be isolated from each other.

- None: This mode disables networking for the container entirely. The container will have no network access at all. This is useful for specific situations, like running containers that don’t require network connectivity, such as background tasks or processes that only interact with the host filesystem. It offers maximum isolation, but at the cost of network access. It’s generally used for specific, limited use cases.

- Overlay: Overlay networks are designed for multi-host deployments, such as Docker Swarm or Kubernetes. They create a virtual network that spans multiple Docker hosts, allowing containers on different hosts to communicate seamlessly. This is particularly beneficial for microservices architectures where containers need to communicate across different machines. The complexity increases, requiring more setup and configuration compared to the simpler modes, but it provides significant scalability and flexibility for distributed applications.

Configuring Docker Networks

Docker networks are created and managed using the `docker network` command. For example, to create a new bridge network named `my-network`, you would use: `docker network create my-network`. You can then specify the network when creating a container using the `–net` flag: `docker run –net=my-network my-image`. Existing networks can be inspected using `docker network ls` and removed with `docker network rm my-network`. Complex network configurations, particularly for overlay networks, often involve additional configuration files and parameters.

Connecting Containers Within a Network

Containers connected to the same network can communicate using each other’s names or IP addresses. If two containers, `web` and `db`, are both on the `my-network` network, `web` can access `db` using `db`’s name as the hostname. The Docker daemon handles the resolution of container names to IP addresses within the network. This simplifies inter-container communication, eliminating the need for complex IP address management. Properly configuring DNS within the network is crucial for reliable name resolution.

Docker Volumes

So, you’ve mastered the art of building and running Docker containers. But what about persistent data? Your containers might vanish, but your data shouldn’t. That’s where Docker volumes come in – they’re the superheroes of persistent storage within the Docker ecosystem. Think of them as separate, managed storage units specifically designed to outlive your containers.

Docker volumes provide a crucial mechanism for persisting data generated or used by your containers. Unlike bind mounts (which directly link a directory on your host machine to the container), volumes are managed by Docker itself, offering advantages in terms of portability, backup, and data management.

Named and Anonymous Volumes: A Comparison

Named volumes offer a more structured approach to managing persistent data within Docker. They are explicitly created and named, allowing for better organization and control. Conversely, anonymous volumes are created implicitly when a container is started without specifying a named volume. While convenient for quick setups, they lack the traceability and management features of named volumes. This can make them less suitable for production environments where you need to track and manage your data effectively. Consider this: you’d much rather know exactly where your application’s crucial data resides than hunt for a mystery volume when something goes wrong.

Creating and Managing Docker Volumes

Creating and managing Docker volumes is surprisingly straightforward. Let’s illustrate with examples. To create a named volume called `my-data`, you’d use the command: `docker volume create my-data`. You can then attach this volume to a container using the `-v` flag during container creation: `docker run -d -v my-data:/app/data my-app`. This mounts the `my-data` volume at the `/app/data` path inside the `my-app` container. Removing the container won’t delete the `my-data` volume, ensuring data persistence. To list existing volumes, use `docker volume ls`. Removing a volume is done with `docker volume rm my-data` (after ensuring the volume is not in use).

Best Practices for Docker Volumes in Production

In production environments, careful volume management is paramount. Using named volumes enhances organization and simplifies backups and restoration. Regular backups of your volumes are essential to safeguard against data loss. Consider using a dedicated volume driver for more advanced features like snapshots, replication, and encryption. Also, ensure that volume permissions are properly configured to prevent security vulnerabilities.

Docker Volume Management Strategies

| Volume Type | Description | Use Cases | Advantages | Disadvantages |

|---|---|---|---|---|

| Named Volumes | Explicitly created and named volumes managed by Docker. | Persistent storage for applications, databases, etc. Ideal for production environments. | Easy to manage, track, and back up. Improved organization and portability. | Requires explicit creation; slightly more complex setup than anonymous volumes. |

| Anonymous Volumes | Created implicitly when a container starts without a volume specification. | Temporary or quick prototyping. Not recommended for production. | Simple and convenient for initial testing. | Difficult to manage, track, and back up. Data loss is more likely upon container removal. Less portable. |

| Local Volumes (Bind Mounts) | Directly mount a directory from your host machine into the container. | Development and testing; situations where direct host access is needed. | Direct access to the host filesystem; easy setup. | Not portable; security risks if not carefully managed; data persistence relies on the host system. |

Docker Security Best Practices

Source: innovationm.co

Docker’s popularity brings a need for robust security measures. Ignoring container security can expose your applications and data to significant risks, leading to breaches and hefty consequences. Let’s dive into the essential practices for securing your Docker environment.

Securing your Docker deployments isn’t just about adding a few extra layers of protection; it’s about building a holistic security posture from the ground up. This involves careful consideration at every stage, from image creation to runtime management.

Common Docker Security Risks

Ignoring security best practices can leave your Dockerized applications vulnerable. Common threats include compromised images containing malicious code, insecure network configurations exposing containers to unauthorized access, and insufficient access controls allowing unauthorized users to manipulate containers or their data. These vulnerabilities can lead to data breaches, application downtime, and reputational damage.

Securing Docker Images

Building secure Docker images is paramount. This starts with using minimal base images, containing only the necessary components. Regularly updating base images and dependencies patches vulnerabilities. Leveraging multi-stage builds allows you to separate the build environment from the runtime environment, reducing the attack surface. Finally, scanning images for vulnerabilities using tools like Clair or Trivy before deployment is crucial for identifying and addressing potential weaknesses.

Securing Docker Containers

Beyond image security, runtime container security is equally critical. Running containers as non-root users limits the impact of potential compromises. Implementing strong access controls using Docker’s built-in mechanisms restricts access to containers and their resources. Regularly monitoring container activity helps detect anomalies and potential threats. Network security is vital; containers should only be exposed to necessary ports and networks, and using firewalls and network segmentation adds an extra layer of protection.

Best Practices for Secure Docker Management

Effective Docker management is key to maintaining a secure environment.

The following points highlight crucial best practices:

- Use a secure registry: Store your Docker images in a private registry like Docker Hub (with appropriate access controls) or a self-hosted registry like Harbor to prevent unauthorized access.

- Implement image signing and verification: Verify the authenticity and integrity of your images before deploying them, preventing the use of tampered or malicious images.

- Employ least privilege: Grant containers only the necessary permissions and resources to function correctly, minimizing the impact of potential breaches.

- Regularly update and patch: Keep your Docker engine, images, and related components updated with the latest security patches to address known vulnerabilities.

- Use automated security scanning: Integrate automated security scanning into your CI/CD pipeline to detect vulnerabilities early in the development lifecycle.

- Monitor container activity: Regularly monitor container logs and resource usage to identify unusual activity that could indicate a security breach.

- Implement robust logging and monitoring: Centralized logging and monitoring provide a comprehensive view of container activity, aiding in the detection of suspicious behavior and security incidents.

Importance of Regular Security Audits

Regular security audits are essential for identifying and mitigating potential vulnerabilities in your Docker deployments. These audits should cover all aspects of your Docker environment, from image creation and deployment to runtime management and network security. By conducting regular audits, you can proactively identify and address security weaknesses before they can be exploited. These audits should involve vulnerability scanning, penetration testing, and a review of security configurations and policies. Consider engaging security experts to perform these audits for a more comprehensive and objective assessment.

Conclusion

Mastering Docker isn’t just about deploying apps; it’s about transforming your development process. From streamlined workflows to enhanced collaboration, Docker unlocks a new level of efficiency and scalability. By understanding its core components and best practices, you’ve equipped yourself with a powerful tool to build, ship, and run applications with ease. So go forth, containerize, and conquer!