Introduction to Docker Containers and Their Uses in Development: Dive into the world of streamlined software development! Forget wrestling with inconsistent environments – Docker containers offer a revolutionary approach to building, shipping, and running applications. This guide unpacks the magic behind containerization, showing you how Docker simplifies development workflows, boosts efficiency, and ensures your apps run smoothly across different platforms. We’ll explore Docker’s architecture, essential commands, and best practices, transforming you from Docker novice to confident container wrangler.

We’ll cover everything from basic concepts like images and containers to advanced techniques like Docker Compose for managing multi-container applications. Learn how Docker enhances your CI/CD pipeline, improves collaboration, and helps you deploy applications with ease. Get ready to revolutionize your development process!

What are Docker Containers?

Source: edureka.co

Imagine a world where deploying applications is as simple as shipping a package. No more wrestling with dependencies, conflicting libraries, or environment inconsistencies. That’s the promise of Docker containers, a revolutionary approach to software packaging and deployment that’s transforming the development landscape. They’re lightweight, portable, and self-sufficient units that encapsulate everything an application needs to run – code, libraries, runtime, system tools, and settings – all bundled together neatly.

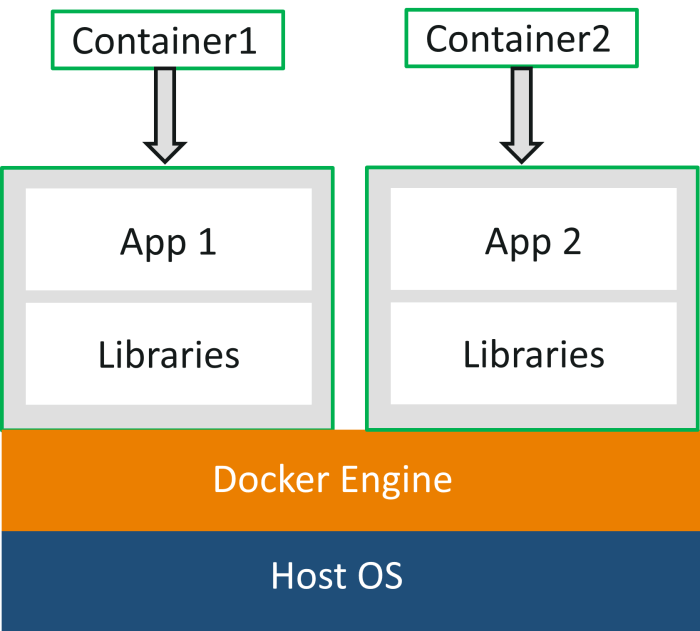

Docker containers leverage the Linux kernel’s features like namespaces and control groups to create isolated environments. This means multiple containers can run simultaneously on a single host operating system without interfering with each other, making efficient use of resources. Think of it as having multiple virtual apartments within a single building, each with its own utilities and amenities, yet sharing the same foundational structure.

Containerization Fundamentals

Containerization is the process of packaging an application and its dependencies into a standardized unit, the container. This unit can then be easily moved and run across different environments, from a developer’s laptop to a cloud server, ensuring consistency and predictability. The key here is isolation: each container operates in its own sandbox, preventing conflicts and ensuring stability. This isolation is achieved through various Linux kernel features, which essentially create virtualized versions of system resources for each container.

Docker Containers vs. Virtual Machines

While both Docker containers and virtual machines (VMs) provide a way to isolate applications, they differ significantly in their approach and resource consumption. VMs virtualize the entire hardware, including the operating system, resulting in a heavier footprint and slower performance. Docker containers, on the other hand, share the host operating system’s kernel, making them significantly lighter and faster. This difference translates to better resource utilization and faster deployment times. Imagine trying to move a whole house (VM) versus moving a single piece of furniture (Docker container).

Resource Utilization and Performance Comparison

The key advantage of Docker over VMs lies in resource efficiency. Because containers share the host OS kernel, they require significantly less memory and CPU power. This translates to running more containers on the same hardware, reduced infrastructure costs, and faster application deployment. Start-up times for containers are also dramatically faster than for VMs, which need to boot a full operating system. This speed advantage is particularly crucial in microservices architectures, where applications are broken down into many small, independent services.

| Feature | Docker | Virtual Machine | Comparison |

|---|---|---|---|

| Operating System | Shares host OS kernel | Has its own guest OS | Docker is significantly lighter |

| Resource Utilization | Lightweight, efficient | Resource-intensive | Docker consumes fewer resources |

| Performance | Faster startup, higher performance | Slower startup, lower performance | Docker offers superior performance |

| Deployment Speed | Very fast | Relatively slow | Docker enables rapid deployment |

Docker Architecture and Components

So, you’ve got a handle on what Docker containers are—basically, lightweight, portable packages of software. But how does this magic actually work? Let’s dive into the architecture that makes it all tick. Think of it like a well-oiled machine with several key parts working together seamlessly.

Docker’s architecture is surprisingly elegant, built around several core components that interact to create and manage containers. Understanding these components is key to unlocking the full power of Docker.

Docker Engine

The Docker Engine is the heart of the Docker system. It’s the runtime environment that manages the entire lifecycle of containers: creating, starting, stopping, and deleting them. Think of it as the conductor of an orchestra, ensuring all the different parts of the system work in harmony. The Engine uses the Docker daemon (discussed below) to perform these actions. It’s a client-server application with a daemon process that manages containers, images, networks, and volumes. The Docker client is how you interact with the daemon, sending commands and receiving feedback.

Docker Daemon

The Docker daemon, often referred to as `dockerd`, is the background process that manages all Docker objects. It receives commands from the Docker client and carries them out. It’s the tireless worker behind the scenes, making sure your containers are running smoothly. It listens for Docker API requests and manages the Docker objects such as images, containers, networks, and volumes. Without the daemon, you wouldn’t be able to create or manage anything within Docker.

Docker Images

Docker images are read-only templates that contain everything needed to create a container. They’re like blueprints for your containers, specifying the operating system, libraries, dependencies, and application code. These images are built from Dockerfiles, which are simple text files containing instructions on how to build the image. Think of it like a recipe: you follow the steps, and the result is a ready-to-use image.

Dockerfiles

Dockerfiles are the cornerstone of image creation. They are text files containing a set of instructions that Docker uses to assemble an image. These instructions might include installing packages, copying files, setting environment variables, and defining the command to run when a container is started. They provide a reproducible and automated way to build consistent images.

Building a Simple Docker Image, Introduction to Docker Containers and Their Uses in Development

Let’s build a simple “Hello World” image. First, create a file named `Dockerfile` (no extension) with the following content:

“`dockerfile

FROM ubuntu:latest

RUN apt-get update && apt-get install -y curl

CMD [“curl”, “-s”, “http://example.com”]

“`

This Dockerfile uses the latest Ubuntu image as a base, installs `curl`, and then runs `curl` to fetch the example.com webpage. To build the image, navigate to the directory containing the `Dockerfile` and run:

“`bash

docker build -t my-hello-world .

“`

This command builds the image and tags it as `my-hello-world`. The `.` specifies the current directory.

Docker Containers

Containers are running instances of Docker images. They are created from images and are ephemeral—they can be started, stopped, and deleted easily. Think of them as the actual application running, based on the blueprint provided by the image. Each container has its own isolated filesystem, network, and process space.

Docker Registries

Docker registries are central repositories for storing and distributing Docker images. The most popular registry is Docker Hub, which hosts a vast collection of public and private images. Registries allow you to share your images with others or pull images created by others, making collaboration and reuse much easier. They are essentially online warehouses for your Docker images.

Docker Architecture Diagram

Imagine a diagram. At the top, you have the Docker Client, which is where you interact with Docker via commands. This client communicates with the Docker Daemon (`dockerd`), a background process running on the host machine. The daemon manages all Docker objects. Below the daemon, you have Docker Images, the read-only templates. These images are used to create Docker Containers, which are the running instances of the application. Finally, you have Docker Registries (like Docker Hub), where images are stored and shared. Arrows would indicate the flow of communication and data between these components. For example, an arrow would point from the Docker Client to the Docker Daemon, indicating that commands are sent from the client to the daemon. Another arrow would point from the Docker Daemon to a Docker Container, indicating that the daemon manages the container. Another arrow would point from a Docker Registry to the Docker Daemon, showing that images are pulled from the registry to the daemon.

Docker Commands and Workflow

Navigating the world of Docker can feel like learning a new language, but once you grasp the core commands, building and deploying applications becomes significantly smoother. This section dives into essential Docker commands, categorized for clarity, and Artikels a typical Docker workflow. Think of these commands as your toolbox – mastering them unlocks the full potential of Docker.

Essential Docker Commands

Understanding Docker commands is crucial for efficient container management. These commands are grouped by function for easier comprehension. Remember, you’ll need to have Docker installed and running on your system before executing these commands.

- Image Management: These commands deal with Docker images, the blueprints for your containers.

docker pull: This command downloads a Docker image from a registry (like Docker Hub). For example,: docker pull ubuntu:latestdownloads the latest version of the Ubuntu image. This is your starting point for creating containers.docker images: Lists all the images currently stored on your system. This helps you manage your image library and identify which images are taking up space.docker rmi: Removes a Docker image. Replacedocker images). Be cautious, as this action is irreversible.docker build -t: This builds a Docker image from a Dockerfile located in the current directory. The: . -tflag tags the image with a name and version. This is how you create your own custom images.

- Container Management: These commands control the lifecycle of your containers.

docker run: Creates and starts a new container from a specified image. For instance,: docker run -d -p 8080:80 nginxruns an Nginx web server in detached mode (-d) and maps port 8080 on the host to port 80 on the container.docker ps: Lists all running containers. This provides a quick overview of your active containers and their status.docker ps -a: Lists all containers, both running and stopped. Useful for managing containers that have been stopped.docker stop: Gracefully stops a running container.docker psordocker ps -a.docker start: Starts a stopped container.docker rm: Removes a stopped container. Remember to stop the container before removing it.

- Network Management: These commands manage Docker networks, allowing containers to communicate.

docker network create: Creates a new Docker network. This allows you to isolate containers or connect them together in a defined network.docker network ls: Lists all existing Docker networks.docker network connect: Connects a container to a specific network.docker network disconnect: Disconnects a container from a network.

Pulling, Running, Stopping, and Removing Containers: A Practical Example

Let’s walk through a simple example using the Nginx web server.

1. Pull the image: docker pull nginx:latest

2. Run the container: docker run -d -p 8080:80 nginx (This runs Nginx in detached mode and maps port 8080 on your host machine to port 80 in the container.)

3. Check if it’s running: docker ps (You should see the Nginx container listed.)

4. Stop the container: docker stop (Replace `

5. Remove the container: docker rm

Managing Docker Networks and Connecting Containers

Docker networks provide a way to control how your containers communicate. A default bridge network is automatically created, but you can create custom networks for better isolation and control. Connecting containers to a custom network allows them to communicate without exposing ports to the host machine, improving security. For example, you could have a database container and a web application container connected to a private network, allowing them to interact without being directly accessible from the outside.

Docker Workflow for Application Development and Deployment

The typical Docker workflow streamlines the development, building, and deployment process.

A well-defined workflow using Docker typically involves these steps: Develop -> Build -> Test -> Push -> Deploy.

Imagine a scenario where you’re building a simple web application.

1. Development: Write your application code and create a Dockerfile that specifies the application’s dependencies and runtime environment.

2. Build: Use `docker build` to create a Docker image containing your application.

3. Testing: Run the container locally using `docker run` to thoroughly test the application.

4. Pushing to a registry (e.g., Docker Hub): Use `docker push` to upload your image to a registry, making it accessible for deployment.

5. Deployment: Deploy the image to a server using tools like Docker Compose or Kubernetes.

Docker Compose for Multi-Container Applications: Introduction To Docker Containers And Their Uses In Development

Managing multiple containers can feel like herding cats – chaotic and prone to errors. That’s where Docker Compose steps in, offering a streamlined way to define and run multi-container applications. Think of it as the ultimate container orchestra conductor, ensuring all your services play in harmony.

Docker Compose simplifies the complexities of managing interconnected containers by using a single YAML file (docker-compose.yml) to define all your services, their dependencies, and configurations. This eliminates the need for juggling multiple commands and configurations, making your development workflow significantly more efficient and less error-prone. It’s a game-changer for microservices architectures and complex applications.

Defining Services in a Docker Compose File

A Docker Compose file uses a straightforward YAML structure to specify your application’s services. Each service section defines a container, its image, ports, volumes, environment variables, and dependencies. Let’s imagine a simple web application with a web server and a database:

“`yaml

version: “3.9”

services:

web:

image: nginx:latest

ports:

– “80:80”

volumes:

– ./html:/usr/share/nginx/html

db:

image: mysql:8

environment:

MYSQL_ROOT_PASSWORD: mysecretpassword

ports:

– “3306:3306”

volumes:

– db_data:/var/lib/mysql

volumes:

db_data:

“`

This example defines two services: `web` (using the latest Nginx image) and `db` (using MySQL 8). The `ports` section maps host ports to container ports, allowing access from your host machine. The `volumes` section defines persistent storage for the database, ensuring data persistence even if the container is restarted. Environment variables, like `MYSQL_ROOT_PASSWORD`, are securely defined within the service definition.

Starting, Stopping, and Scaling Applications with Docker Compose

Once your docker-compose.yml file is ready, managing your application becomes incredibly simple. You can start all services with a single command: `docker-compose up -d`. The `-d` flag runs the containers in detached mode (in the background). To stop them, use `docker-compose down`.

Scaling services is equally straightforward. Let’s say you need to scale your web service to three instances: `docker-compose scale web=3`. Docker Compose will automatically create and manage the additional containers, ensuring your application can handle increased load.

Defining Environment Variables and Volumes

Environment variables provide a flexible way to configure your application without modifying the container images. As shown in the previous example, environment variables are defined within the `environment` section of each service. This allows you to customize settings like database passwords, API keys, or other sensitive information without hardcoding them into your images. This improves security and portability.

Volumes provide persistent storage for your data, independent of the container’s lifecycle. This is crucial for databases and other applications that require data persistence. The example demonstrates how to define a named volume (`db_data`) that’s shared between the database container and the host machine, ensuring data is not lost when the container restarts. This also allows you to back up and restore your data easily.

Docker in Software Development

Docker’s impact on the software development lifecycle is nothing short of revolutionary. It’s become a cornerstone for building, shipping, and running applications, streamlining processes that were once cumbersome and error-prone. This section dives into how Docker improves efficiency and consistency throughout the entire development journey.

Docker fundamentally changes how developers approach building and deploying applications. By providing consistent environments across development, testing, and production, it eliminates the infamous “works on my machine” problem, a source of countless headaches for developers. This consistency translates to faster development cycles, fewer bugs, and a smoother transition to production.

Common Docker Use Cases in Software Development

Docker offers a wide array of benefits across various stages of the software development lifecycle. It’s not just a tool for deployment; it significantly impacts development, testing, and collaboration.

From streamlining local development environments to simplifying continuous integration and continuous delivery (CI/CD) pipelines, Docker enhances efficiency and reduces friction. Let’s explore some key use cases:

- Local Development Environments: Docker allows developers to create isolated and reproducible environments on their local machines, ensuring everyone works with the same dependencies and configurations, regardless of their operating system.

- Microservices Architecture: Docker excels in supporting microservices, allowing each service to run in its own container, improving isolation, scalability, and maintainability.

- Testing and Quality Assurance: Consistent environments across testing stages, facilitated by Docker, ensure that bugs are detected early and consistently, leading to higher quality software.

- Continuous Integration and Continuous Delivery (CI/CD): Docker containers are easily integrated into CI/CD pipelines, enabling automated builds, testing, and deployments across various environments.

- Deployment and Scaling: Docker simplifies deployment by packaging applications and their dependencies into portable containers, making it easier to deploy to various platforms, including cloud providers like AWS, Azure, and Google Cloud.

Improved Consistency and Reproducibility of Development Environments

The “works on my machine” syndrome is a common developer frustration. Different operating systems, libraries, and configurations can lead to inconsistencies between development, testing, and production environments. Docker solves this by encapsulating the application and its dependencies within a container, ensuring a consistent environment regardless of the underlying infrastructure. This reproducibility extends to all team members and across different stages of the development lifecycle.

For example, a developer using a Mac might encounter issues when deploying code to a Linux server. With Docker, the application runs within the same containerized environment on both the Mac and the server, eliminating environment-related discrepancies.

Docker’s Role in Continuous Integration and Continuous Delivery (CI/CD) Pipelines

Docker significantly enhances CI/CD pipelines by automating the build, test, and deployment processes. Containers provide a consistent and isolated environment for each stage of the pipeline, minimizing the risk of conflicts and ensuring reliable builds. The portability of Docker containers allows for seamless deployments across different environments—from development to staging to production—without requiring modifications to the application code.

Imagine a CI/CD pipeline where a new code commit triggers a Docker build. The built image is then tested automatically in a containerized environment, and if successful, deployed to a staging server (also using Docker). Finally, after successful staging tests, the same image is deployed to production. This entire process is automated and consistent thanks to Docker.

Docker containers? Think of them as portable, isolated environments for your apps. Managing dependencies becomes a breeze, but just like you protect your prized vinyl collection, you need to safeguard your development workflow. That’s why understanding the importance of robust backups is crucial, much like learning about The Benefits of Insuring Your Valuable Collectibles – it’s all about protecting your investment.

So, mastering Docker ensures your digital creations are just as safe as your physical treasures.

Simplified Deployment and Scaling of Applications

Docker simplifies application deployment by packaging the application and its dependencies into a single, portable unit—the container. This eliminates the need for complex configuration management and reduces the risk of environment-related issues. Scaling applications is also streamlined. Instead of manually configuring and deploying multiple instances, you can simply spin up multiple containers of the same image, effectively scaling your application horizontally.

Consider a web application deployed using Docker. To scale the application to handle increased traffic, you can simply create more containers running the same Docker image. This horizontal scaling is efficient and readily adaptable to fluctuating demands.

Docker Security Best Practices

Docker, while incredibly useful for streamlining development, introduces a new layer of security considerations. Ignoring these can expose your applications and infrastructure to significant vulnerabilities. Understanding and implementing robust security practices is crucial for leveraging Docker’s benefits safely and effectively.

Securing your Docker environment involves a multi-faceted approach, encompassing image security, container runtime protection, and access control. A well-secured Docker setup isn’t just about protecting your applications; it’s about protecting your entire infrastructure and data.

Potential Security Risks in Docker

Using Docker containers introduces several potential security risks. These risks stem from both the nature of containerization and potential misconfigurations. Understanding these risks is the first step towards mitigating them.

For example, a compromised container could potentially grant access to the host machine’s resources or other containers on the same host. Similarly, vulnerabilities within the base image used for a container could be exploited. Furthermore, insufficient access control can lead to unauthorized access and manipulation of containers and their data.

Securing Docker Images

Building secure Docker images is paramount. This starts with selecting a minimal base image, containing only the necessary dependencies. Avoid using images with known vulnerabilities, and always update your base images to the latest versions with security patches.

Employing multi-stage builds allows you to separate the build environment from the runtime environment, minimizing the attack surface of your final image. Furthermore, regularly scanning your images for vulnerabilities using tools like Clair or Trivy is essential. These tools analyze your image’s contents, identifying known security flaws and providing remediation guidance.

Container Runtime Security

Beyond image security, securing the container runtime environment is critical. Restricting container access to only the necessary resources on the host system using capabilities and resource limits is a vital step. This limits the potential damage a compromised container could inflict.

Implementing Docker security features like AppArmor or SELinux provides additional layers of protection. These tools enforce strict security policies, limiting the actions containers can perform. Regularly auditing your Docker daemon configuration and access logs is also essential for identifying and addressing potential security breaches.

Access Control and User Permissions

Proper access control is crucial for securing your Docker environment. Use Docker’s built-in user management features to create and manage users with appropriate permissions. Avoid running containers as root whenever possible; instead, use dedicated non-root users with limited privileges.

Employing role-based access control (RBAC) mechanisms, either through Docker’s native features or external tools, can further enhance security by granting different users and groups varying levels of access to Docker resources. This prevents unauthorized access and modification of containers and images.

Image Vulnerability Scanning

Regularly scanning your Docker images for vulnerabilities is a critical aspect of maintaining a secure environment. Several tools, including Clair and Trivy, automate this process, analyzing your images for known vulnerabilities based on publicly available databases.

These scanners identify potential security weaknesses in your base images and any installed packages, providing detailed reports and remediation advice. Integrating these scans into your CI/CD pipeline ensures that security checks are performed automatically before images are deployed to production.

Advanced Docker Concepts (Optional)

Stepping beyond the basics, let’s delve into some more advanced Docker features that can significantly enhance your containerization workflow and unlock greater potential for your projects. Mastering these concepts will transform you from a Docker user into a Docker ninja. These techniques are often crucial for managing complex applications and ensuring robust, scalable deployments.

This section explores Docker volumes for persistent data, different Docker network types, Docker Swarm for container orchestration, and the vital role of Docker secrets management.

Docker Volumes

Docker volumes provide a mechanism for persisting data generated and used by containers. Unlike bind mounts, which directly link a directory on your host machine to a container, volumes are managed by Docker itself. This offers several advantages, including improved data management, portability, and backup capabilities. Data stored in a volume persists even if the container is deleted or moved. For example, imagine a database container. If you used a bind mount, deleting the container would also delete your database data. With a volume, your data remains safe and sound, ready to be used by a new container instance. You can also easily back up and restore Docker volumes, ensuring data security and resilience.

Docker Networks

Docker networks define how containers communicate with each other and with the outside world. Docker offers several network types, each suited to different needs. The default bridge network isolates containers from the host network and each other unless explicitly configured. This provides a layer of security. However, for applications requiring inter-container communication, you can create custom networks. A common use case is using a custom network to allow several microservices to interact efficiently. Alternatively, the host network allows containers to share the host’s network namespace, providing direct access to the host’s network interfaces and IP addresses. This can be convenient for debugging but sacrifices isolation. Finally, overlay networks enable communication between containers across multiple Docker hosts, forming the basis for container orchestration platforms like Docker Swarm or Kubernetes.

Docker Swarm

Docker Swarm is a native container orchestration tool built into Docker Engine. It allows you to manage a cluster of Docker hosts as a single entity, enabling you to deploy and scale applications across multiple machines. Swarm simplifies the process of managing container deployments, scaling, and high availability. Imagine a scenario where you need to run hundreds of instances of your application to handle peak traffic. Manually managing all these containers across multiple servers would be a nightmare. Docker Swarm automates this process, allowing you to easily scale your application up or down based on demand. Swarm utilizes a manager node to coordinate the worker nodes, distributing workloads and ensuring high availability.

Docker Secrets Management

Storing sensitive information like API keys, database passwords, and certificates directly within your Docker images is a major security risk. Docker secrets management provides a secure way to handle such sensitive data. Instead of hardcoding secrets into your application code or configuration files, you can store them securely outside your images and make them accessible to containers only when needed. This reduces the risk of exposing sensitive information and simplifies the management of secrets across multiple environments. For example, you might use Docker secrets to store your database credentials, allowing your application container to access the database without embedding the credentials within the container image itself. This improves security significantly by preventing accidental exposure.

Final Wrap-Up

Mastering Docker is no longer a luxury—it’s a necessity for modern developers. From simplifying development environments to streamlining deployments, Docker offers a game-changing solution for building and shipping robust, scalable applications. By understanding its architecture, commands, and security best practices, you’ll unlock a world of efficiency and consistency. So, ditch the environment headaches and embrace the power of Docker; your future self (and your team) will thank you.