The Role of Testing in Software Development: Think launching a rocket to the moon without checking the fuel levels – sounds crazy, right? Software is no different. This isn’t just about finding bugs; it’s about building robust, reliable applications that actually work. From preventing embarrassing public failures to saving your company millions, testing is the unsung hero of the tech world. Let’s dive into why it matters.

This exploration covers everything from the various types of testing – think unit tests, integration tests, the whole shebang – to how testing integrates seamlessly into agile methodologies like Scrum. We’ll unpack the importance of test automation, the skills of a top-notch software tester, and how to navigate the often-tricky world of managing test environments. Get ready for a deep dive into the world of software testing – because let’s face it, a buggy app is a broken promise.

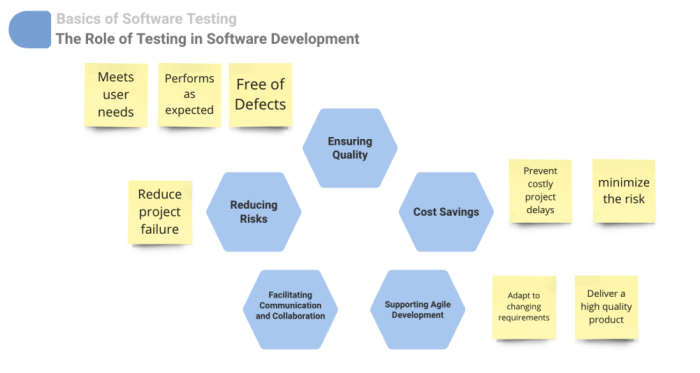

The Importance of Testing in Software Development: The Role Of Testing In Software Development

Software testing isn’t just a nice-to-have; it’s the bedrock of reliable and successful software. Think of it as the final quality check before your digital creation hits the world – a crucial step that can save your reputation, your budget, and even lives. Without robust testing, you’re essentially launching a rocket without checking the fuel levels.

Reasons for the Crucial Role of Software Testing

Effective software testing is paramount for several reasons. First, it identifies bugs and defects early in the development lifecycle, significantly reducing the cost and effort of fixing them later. Imagine finding a typo in a single line of code – easy to fix. Now imagine that same typo causing a system-wide crash after release – not so easy (or cheap). Secondly, testing ensures the software meets the specified requirements and functions as intended. This prevents costly rework and ensures user satisfaction. Finally, thorough testing boosts user confidence and trust in the product, leading to better market adoption and increased revenue. Ignoring testing can lead to reputational damage, financial losses, and legal repercussions.

Consequences of Inadequate or Absent Testing

The absence of proper testing can result in a cascade of negative consequences. Poorly tested software is prone to crashes, security vulnerabilities, and performance issues. These problems can lead to frustrated users, lost revenue, and even legal action. Imagine a banking app that allows unauthorized access to accounts – the consequences are severe. In addition, inadequate testing can lead to missed deadlines, increased development costs due to late bug fixes, and a damaged reputation for the development team. The ripple effect of releasing untested software can be far-reaching and damaging.

Examples of Real-World Software Failures Due to Insufficient Testing

Numerous examples highlight the disastrous consequences of neglecting software testing. The infamous Therac-25 radiation therapy machine, which delivered lethal doses of radiation due to software errors, is a chilling reminder. Similarly, the Ariane 5 rocket explosion, costing hundreds of millions of dollars, was attributed to a software overflow error. These catastrophic failures underscore the critical importance of rigorous testing procedures. These aren’t isolated incidents; many less dramatic but equally costly software failures occur daily due to insufficient testing.

Hypothetical Scenario Demonstrating the Benefits of Thorough Testing

Imagine a new e-commerce platform launching without thorough testing. During the initial surge of Black Friday traffic, the website crashes due to an unhandled exception in the payment processing module. This leads to lost sales, frustrated customers, and negative media coverage. However, if the developers had implemented comprehensive testing, including load testing to simulate high traffic, this critical failure could have been prevented. The cost of fixing the problem after the launch far exceeds the cost of preventative testing.

Types of Software Testing Methods and Their Applications

Understanding the different types of software testing is crucial for building robust applications. Different testing methods address specific aspects of software quality.

| Testing Type | Description | Application | Example |

|---|---|---|---|

| Unit Testing | Testing individual components or modules of the software. | Early bug detection, improved code maintainability. | Testing a single function that calculates the total price of items in a shopping cart. |

| Integration Testing | Testing the interaction between different modules or components. | Ensuring seamless communication between different parts of the software. | Testing the interaction between the shopping cart and the payment gateway. |

| System Testing | Testing the entire system as a whole. | Verifying that the system meets all requirements and functions as expected. | Testing the entire e-commerce platform, including user accounts, product catalog, shopping cart, and payment processing. |

| User Acceptance Testing (UAT) | Testing by end-users to ensure the software meets their needs. | Validating the software’s usability and functionality from the end-user perspective. | Having a group of potential customers test the e-commerce platform to provide feedback on its usability and functionality. |

Types of Software Testing

Source: dcastalia.com

Software testing isn’t a one-size-fits-all affair; it’s a multifaceted process crucial for delivering high-quality software. Different testing methodologies cater to various needs and stages of the software development lifecycle. Understanding these differences is key to building robust and reliable applications. Let’s dive into the diverse world of software testing.

Software testing methodologies are categorized based on the scope and level of testing performed. Each type plays a vital role in ensuring the overall quality and functionality of the software. Choosing the right testing method depends on factors like project size, complexity, budget, and the chosen software development lifecycle model.

Unit Testing

Unit testing focuses on verifying the smallest testable parts of an application, typically individual functions or modules. Developers write unit tests to ensure each component works correctly in isolation. This approach allows for early detection of bugs, making them easier and cheaper to fix. Strengths include early bug detection and improved code maintainability. However, it can be time-consuming and may not reveal integration issues. For example, in developing a shopping cart application, a unit test might verify that the “add to cart” function correctly updates the cart total.

Integration Testing

Integration testing builds upon unit testing, verifying the interaction between different modules or components. It aims to identify defects arising from the interplay of various units. This is crucial because a perfectly functioning unit might fail when integrated with others. Strengths include early detection of integration issues and improved system stability. A weakness is the increased complexity compared to unit testing, making it potentially more time-consuming. In our shopping cart example, integration testing would check if the “add to cart” function correctly interacts with the database to store the item and update the user’s cart.

System Testing

System testing involves testing the entire software system as a whole, encompassing all integrated modules. It assesses the system’s functionality, performance, security, and usability against specified requirements. This is a high-level test, providing a comprehensive overview of the software’s behavior. Strengths include comprehensive evaluation of the system and early detection of major defects. However, it’s often resource-intensive and can be challenging to identify the root cause of issues. For our shopping cart application, system testing would simulate a complete user journey, from browsing products to checkout and payment processing.

Acceptance Testing

Acceptance testing is the final stage before software release, verifying if the software meets the client’s or user’s requirements. This often involves user acceptance testing (UAT), where end-users test the system in a real-world scenario. It ensures the software aligns with business needs and user expectations. Strengths include validation against user requirements and increased user confidence. However, it can be time-consuming and may not uncover all potential issues. In our example, acceptance testing would involve real shoppers testing the application to ensure it meets their needs and is user-friendly.

Testing Methodologies and Software Development Life Cycle Models

The choice of testing methodology often aligns with the chosen software development life cycle model. Waterfall models, with their sequential nature, typically employ a more structured testing approach, with each testing type performed sequentially. Agile methodologies, with their iterative nature, often integrate testing throughout the development process, utilizing techniques like continuous integration and continuous delivery (CI/CD) to facilitate frequent testing and feedback.

Example: A Software Project Testing Flow

Imagine developing a mobile banking application. Initially, unit tests would verify individual functions like login authentication or fund transfer calculations. Integration tests would then check the interaction between these functions, for example, ensuring the login correctly interacts with the account balance retrieval. System testing would test the entire application, simulating various user scenarios and checking for performance and security vulnerabilities. Finally, acceptance testing would involve real users testing the application to ensure it meets their needs and is user-friendly before its release.

Flowchart of Testing Activities

A flowchart depicting the typical flow of testing activities might show a sequence starting with unit testing, followed by integration testing, system testing, and finally acceptance testing. Each stage would have feedback loops allowing for iterative improvements based on test results. The flowchart would visually represent the sequential and iterative nature of the testing process, emphasizing the importance of feedback loops and continuous improvement.

Test Planning and Execution

Crafting a robust software testing plan is like designing a meticulous blueprint for a building – you wouldn’t start constructing without one, would you? A well-defined plan ensures that testing is thorough, efficient, and ultimately contributes to a higher-quality product. It’s the roadmap that guides your team through the testing process, ensuring nothing crucial gets overlooked.

Effective test planning and execution are pivotal to successful software development. They ensure that the right tests are performed at the right time, maximizing the chances of identifying and resolving defects before the software reaches end-users. This translates to reduced costs, improved user satisfaction, and a stronger reputation for the development team.

Key Elements of a Comprehensive Software Testing Plan

A comprehensive software testing plan should include several key elements. These elements provide a framework for the entire testing process, from initial planning to final reporting. Think of it as the detailed instruction manual for your testing team.

- Testing Objectives: Clearly defined goals of the testing process. For example, “Identify 90% of critical defects before release.”

- Scope and Out of Scope: What aspects of the software will be tested, and which will be excluded? This is crucial for managing expectations and resources. For instance, testing might focus on core functionalities while leaving less critical features for later testing cycles.

- Test Environment: Details of the hardware, software, and network configurations used for testing. This ensures consistency and reproducibility of test results. A specification detailing the operating system, browser versions, and database used for the testing would be included.

- Test Schedule: A timeline outlining the various testing phases and their durations. This helps maintain project momentum and track progress. This includes start and end dates for each phase, including unit, integration, system, and user acceptance testing.

- Test Data: The data sets used for testing. This should cover various scenarios, including boundary conditions and edge cases. Example data might include valid and invalid user inputs, different data volumes, and extreme values to test the software’s robustness.

- Risk Assessment: Identifying potential risks and developing mitigation strategies. This proactive approach minimizes disruptions and ensures smooth testing execution. This might involve identifying areas of the software with higher complexity and therefore higher risk of defects.

- Resource Allocation: Identifying the personnel, tools, and other resources required for testing. This includes assigning roles and responsibilities to team members.

- Reporting and Communication: Defining the methods and frequency of reporting on test progress and results. Regular updates keep stakeholders informed and allow for timely course correction.

Designing Effective Test Cases and Test Suites

Test cases are the individual steps taken to verify specific functionalities or aspects of the software. Test suites, on the other hand, are collections of related test cases. Careful design is critical for efficient and comprehensive testing.

Effective test case design involves clearly defining the objective, steps to reproduce the test, expected results, and actual results. A well-structured test case ensures that the test is repeatable and the results are easily verifiable. A good test case should also consider different input values and edge cases to thoroughly test the system’s behavior.

Just like rigorous testing ensures a bug-free software launch, regularly reviewing your personal finances is equally crucial. Think of it like this: making sure your insurance coverage still fits your needs, as outlined in The Importance of Reviewing Your Insurance Policy After Life Changes , is preventative maintenance. Failing to do so could leave you vulnerable, much like releasing untested software.

Proactive checks, whether it’s code or coverage, are key to long-term stability.

- Clear and Concise Steps: Each step should be unambiguous and easy to follow.

- Expected Results Defined: The expected outcome of each test case should be explicitly stated.

- Test Data Specification: The data required for each test case should be clearly identified.

- Test Environment Details: The necessary hardware and software configurations should be documented.

Prioritizing Test Cases Based on Risk and Criticality

Not all test cases are created equal. Some functionalities are more critical than others, and some are more prone to errors. Prioritization helps focus resources on the most important aspects first.

Prioritization often involves techniques like risk assessment and assigning severity levels to potential defects. Critical functionalities that impact the core usability of the software are prioritized higher than less important features. This allows for efficient allocation of testing time and resources, ensuring that the most critical areas are thoroughly tested first.

- Risk-Based Prioritization: Focus on areas with high potential impact and likelihood of failure.

- Criticality-Based Prioritization: Prioritize testing of core functionalities that are essential for the software’s operation.

- Severity Levels: Assign severity levels to potential defects (e.g., critical, major, minor) to guide prioritization.

Executing a Software Testing Plan: A Step-by-Step Guide

Executing the plan involves a structured approach to ensure thorough testing and efficient defect tracking. This process involves meticulous execution of test cases, careful documentation of results, and timely reporting.

- Test Environment Setup: Configure the testing environment according to the plan.

- Test Case Execution: Execute test cases systematically, documenting actual results.

- Defect Reporting: Report any discrepancies between expected and actual results, including detailed steps to reproduce the defect.

- Defect Tracking: Track the status of reported defects until they are resolved.

- Test Closure: Finalize testing activities, document results, and prepare a test summary report.

Documenting Test Results and Generating Reports

Thorough documentation is crucial for tracking progress, identifying areas for improvement, and providing stakeholders with a clear picture of the software’s quality.

Test reports provide a concise summary of the testing activities, highlighting key findings and recommendations. They are essential for decision-making and ensuring the software meets quality standards.

- Summary of Testing Activities: Overview of the testing scope, methods used, and timelines.

- Test Case Execution Status: Number of test cases executed, passed, failed, and not executed.

- Defect Statistics: Number of defects found, their severity levels, and resolution status.

- Test Coverage: Percentage of requirements or functionalities tested.

- Overall Assessment: An overall evaluation of the software’s quality and readiness for release.

- Recommendations: Suggestions for improvements or further testing.

Test Automation

Automating software testing is no longer a luxury; it’s a necessity in today’s fast-paced development cycles. The ability to rapidly execute tests, receive immediate feedback, and ensure consistent quality is crucial for delivering successful software projects. However, the journey to automated testing isn’t without its hurdles. This section delves into the advantages and challenges, exploring various frameworks and best practices to help you navigate this essential aspect of software development.

Benefits and Challenges of Automating Software Testing

Automating software testing offers significant benefits, primarily increased efficiency and reduced costs. Automated tests can be run repeatedly and quickly, identifying bugs early in the development process. This reduces the overall time and resources spent on manual testing, leading to faster release cycles and improved product quality. However, the initial investment in setting up an automated testing framework can be substantial. Maintaining and updating automated test scripts as the software evolves requires ongoing effort and expertise. Furthermore, not all types of testing are suitable for automation; some require human judgment and intuition. The complexity of the software itself can also pose challenges in creating effective automated tests.

Comparison of Test Automation Frameworks and Tools

Several test automation frameworks cater to different needs and programming languages. Selenium, a popular open-source framework, is widely used for web application testing, allowing testers to automate browser interactions. Appium is another strong contender, focusing on mobile application testing across various platforms. Cypress, known for its ease of use and developer-friendly features, is gaining popularity for front-end testing. Each framework offers unique capabilities and strengths, with the choice often depending on the specific project requirements and team expertise. For example, Selenium’s extensive community support and cross-browser compatibility make it a robust choice for large-scale web projects, while Cypress’s real-time feedback and debugging capabilities are advantageous for rapid development cycles. Other tools like JUnit (for Java) and pytest (for Python) provide unit testing capabilities, forming the backbone of many automation strategies.

Scenarios Where Test Automation Is Most Effective

Test automation shines brightest in scenarios involving repetitive tasks, regression testing, and performance testing. Regression testing, where existing functionalities are tested after code changes, is significantly expedited through automation. Similarly, performance testing, which involves assessing the software’s response under various load conditions, is best handled through automated tools capable of simulating numerous concurrent users. Automated tests are also ideal for situations requiring consistent execution across multiple environments and configurations. For example, testing a web application across different browsers and operating systems is far more efficient with automated tests than manual testing. Conversely, scenarios requiring subjective judgment or exploratory testing are less suitable for automation.

Designing and Implementing Automated Test Scripts, The Role of Testing in Software Development

Designing effective automated test scripts involves a structured approach. First, identify the test cases suitable for automation. Then, select an appropriate test automation framework and tools based on the project’s technology stack and testing needs. Next, create clear and concise test scripts, following best practices for code readability and maintainability. Each script should focus on a specific aspect of the software’s functionality, with clear steps and expected outcomes. The scripts should be designed to handle potential errors and exceptions gracefully, providing informative error messages. Finally, integrate the automated tests into the continuous integration/continuous delivery (CI/CD) pipeline for seamless execution and feedback.

Best Practices for Maintaining and Improving Automated Test Suites

Maintaining and improving automated test suites is crucial for their long-term effectiveness. Regularly review and update the test scripts to reflect changes in the software. Implement a robust framework for managing test data and configurations. Use version control systems to track changes and facilitate collaboration among team members. Regularly run the automated tests and analyze the results to identify areas for improvement. Employ techniques like code reviews and static analysis to ensure the quality of the test scripts. A well-maintained automated test suite is a valuable asset, providing continuous feedback and contributing significantly to the overall software quality.

Testing and Agile Development

Agile methodologies, with their emphasis on iterative development and collaboration, have revolutionized software development. Testing, far from being an afterthought, is deeply integrated into the Agile process, ensuring quality is built in from the very beginning, rather than tacked on at the end. This approach leads to faster feedback loops, reduced risks, and ultimately, happier clients.

Testing’s role in Agile isn’t just about finding bugs; it’s about validating assumptions, guiding development, and ensuring the software meets the evolving needs of the project. This seamless integration is crucial for the success of any Agile project.

Testing Integration in Agile Methodologies

Agile methodologies like Scrum and Kanban incorporate testing throughout the development lifecycle. In Scrum, for example, testing is a core part of each sprint, with dedicated testing activities planned and executed alongside development tasks. Daily stand-ups include discussions on testing progress and any roadblocks encountered. Kanban, with its focus on visualizing workflow, often uses swim lanes to clearly delineate testing tasks and their progress within the overall development process. This constant feedback loop ensures issues are identified and addressed early, preventing them from snowballing into larger problems later in the development cycle. The goal is to deliver working software incrementally, with each increment thoroughly tested.

Continuous Integration and Continuous Delivery (CI/CD) in Testing

CI/CD pipelines are the backbone of modern Agile testing. CI involves regularly integrating code changes into a shared repository, followed by automated builds and tests. This allows for early detection of integration issues and ensures the codebase remains stable. CD extends this by automating the release process, making it possible to deploy new versions of the software frequently and reliably. Automated tests, including unit, integration, and system tests, are crucial components of CI/CD pipelines, providing immediate feedback on the quality of each code change. A well-designed CI/CD pipeline minimizes the risk of introducing bugs and significantly speeds up the development process. For example, imagine a team deploying a new feature every day – CI/CD ensures each deployment is tested rigorously before reaching users.

Test-Driven Development (TDD)

TDD flips the traditional development process on its head. Instead of writing code first and then testing it, TDD advocates writing tests *before* writing the code itself. This ensures that the code is written to meet specific requirements, and that those requirements are testable. The cycle involves writing a failing test, writing the minimum amount of code necessary to pass the test, and then refactoring the code to improve its design. This iterative approach helps developers focus on writing clean, maintainable code that meets the defined requirements. A simple example is developing a function to calculate the area of a circle. Before writing the function, a test would be written to verify its output for various inputs.

Agile Principles Influencing Testing Strategies

Agile principles directly impact testing strategies. The emphasis on collaboration leads to testers working closely with developers throughout the development process. The iterative nature of Agile means testing is continuous, with frequent feedback loops ensuring early detection and resolution of issues. The focus on delivering value quickly means that testing is prioritized based on risk and business value. For instance, critical functionalities will be tested more thoroughly than less important features. This prioritization ensures that the most important aspects of the software are always functioning correctly.

Key Differences Between Testing in Agile and Waterfall Methodologies

The differences between testing in Agile and Waterfall methodologies are significant:

- Testing Timing: In Agile, testing is integrated throughout the development lifecycle; in Waterfall, testing is typically a separate phase at the end.

- Testing Approach: Agile favors continuous testing and rapid feedback loops; Waterfall employs a more sequential approach with distinct testing phases.

- Test Automation: Agile strongly encourages test automation; Waterfall may have less emphasis on automation.

- Collaboration: Agile promotes close collaboration between developers and testers; Waterfall often has more separation between these roles.

- Feedback: Agile relies on frequent feedback loops; Waterfall feedback is typically less frequent and happens at the end of phases.

Managing Test Environments

Think of your software like a chameleon – it needs to adapt to different environments to truly shine. A well-defined test environment is crucial for ensuring your software performs flawlessly across various platforms and conditions, mirroring real-world usage as closely as possible. Without it, you risk releasing buggy software that fails spectacularly in production, leading to frustrated users and costly fixes.

Managing multiple test environments, however, presents its own set of challenges. It’s a balancing act between resources, time, and the need for accurate simulations. Inconsistencies between these environments can lead to unreliable test results, masking critical bugs and delaying releases. This section delves into the importance of managing test environments effectively and efficiently.

Importance of Well-Defined Test Environments

Well-defined test environments are essential for accurate and reliable software testing. They provide a controlled setting that closely resembles the production environment, allowing testers to identify and resolve issues before they reach end-users. This minimizes the risk of unexpected failures and improves overall software quality. A well-defined environment includes specifications for hardware, software, network configurations, and data, ensuring consistent test results and facilitating efficient debugging. For example, a mobile app might require testing on various Android and iOS versions, each with its own specific configuration, emulating different user scenarios and network conditions. Without a well-defined environment, inconsistencies could lead to bugs appearing only in certain environments, making them difficult to track down.

Challenges of Managing Multiple Test Environments

Maintaining multiple test environments presents several significant challenges. The primary challenge is the sheer cost and complexity involved in setting up and maintaining these environments. This includes the cost of hardware, software licenses, and the time investment required for configuration and maintenance. Another significant hurdle is ensuring consistency across all environments. Slight variations in configuration can lead to different test results, making it difficult to pinpoint the root cause of issues. Furthermore, managing access and permissions for multiple testers across different environments can be a logistical nightmare, potentially leading to conflicts and errors. Finally, keeping these environments synchronized and up-to-date with the latest software versions and patches is a continuous and demanding process.

Best Practices for Setting Up and Maintaining Test Environments

Effective test environment management requires a well-defined strategy. This begins with creating a detailed plan outlining the specific needs of each environment, including hardware and software specifications, network configurations, and data requirements. Automation plays a vital role; scripting the setup and configuration process ensures consistency and reduces manual errors. Version control for environment configurations is crucial for tracking changes and reverting to previous states if necessary. Regular maintenance, including updates, patches, and backups, is essential for preventing issues and ensuring the stability of the environments. Furthermore, establishing clear access control and permissions prevents unauthorized modifications and maintains data integrity. Finally, regularly reviewing and updating the test environment plan to reflect changing requirements ensures the environments remain relevant and effective.

Ensuring Consistency Across Different Test Environments

Maintaining consistency across test environments is paramount for reliable testing. This involves using standardized procedures for setting up and configuring each environment. Employing configuration management tools can automate the process and ensure consistency across all environments. Regular audits and comparisons of environment configurations can identify and rectify any inconsistencies. Using virtualization or containerization technologies can create consistent, reproducible environments. By carefully controlling and monitoring the environments, and implementing robust version control, the consistency and reliability of test results are dramatically improved. A consistent environment ensures that bugs found in one environment are likely to be reproducible in others, greatly aiding in debugging and improving the overall quality of the software.

Test Environment Readiness Checklist

Before commencing testing, a thorough verification of the test environment’s readiness is crucial. This involves a comprehensive checklist covering several critical aspects.

- Hardware Verification: Confirm all hardware components (servers, network devices, etc.) are functioning correctly and meet the specified requirements.

- Software Verification: Verify all necessary software (operating systems, databases, applications) are installed, configured correctly, and at the required versions.

- Network Verification: Ensure network connectivity, bandwidth, and security settings are appropriately configured and meet the test requirements.

- Data Verification: Confirm the test data is accurate, complete, and consistent across all environments. This includes checking data integrity, volume, and structure.

- Security Verification: Verify security measures, such as firewalls and access controls, are correctly implemented and functioning as expected.

- Backup Verification: Ensure that backups of the test environment are regularly performed and readily available in case of failure.

- Documentation Verification: Verify that all necessary documentation, including configuration details and troubleshooting guides, is readily accessible.

Ending Remarks

Source: medium.com

So, there you have it – the crucial role of testing in software development. It’s not just about catching errors; it’s about building confidence, reducing risk, and ultimately, delivering a product users will love. From meticulous planning and execution to the power of automation and the collaborative spirit of the testing team, every step contributes to creating software that’s not just functional, but truly exceptional. Ignoring testing? Think again. Your users (and your bank account) will thank you.